Research:Automated classification of edit quality

This page documents a research project in progress.

Information may be incomplete and change as the project progresses.

Please contact the project lead before formally citing or reusing results from this page.

Overall, most contributors to Wikimedia projects contribute in good-faith[1]. By keeping our editing model open, we get to take advantage of this fact, but it also means that we have to make sure that edit review and curation work can keep up with the rate of new contribution.

One of the greatest advancements in building edit reviewing and curation capacity is the machine classifier. This tool helps minimize the number of edits that *need* review by large percentages. While these tools have been used extensively in English and German Wikipedia, they are not available most other large wikis and all smaller wikis. In the R:Revision scoring as a service project, we're working to bring useful machine learning models to any wiki community who will partner with us to do so.

Model types

[edit]In this project we are exploring effective strategies for classifying edits by their quality. There are several classes that we are actively trying to predict:

reverted-- Will this edit need to be reverted (in a way that looks like it was damaging)?damaging-- Did this edit cause damage to the wiki? (Labels gathered with wiki labels)goodfaith-- Was this edit made in good-faith? (Labels gathered with wiki labels)

Results

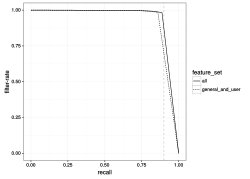

[edit]See ORES/reverted, ORES/damaging and ORES/goodfaith for fitness characteristics about the models.

Wikidata

[edit]See Research:Building automated vandalism detection tool for Wikidata. We describe a machine classification strategy that is able to catch 89% of vandalism while reducing patrollers' workload by 98% by drawing lightly from contextual features of an edit and heavily from the characteristics of the user making the edit.

References

[edit]- ↑ Basically the entire literature around vandalism fighting and productivity of Wikipedians. See a good summary here Research:Building_automated_vandalism_detection_tool_for_Wikidata