Research:Building automated vandalism detection tool for Wikidata

This page is an incomplete draft of a research project.

Information is incomplete and is likely to change substantially before the project starts.

Wikidata, like Wikipedia, is a knowledge base that anyone can edit. This open collaboration model is powerful in that it reduces barriers to participation and allows a large number of people to contribute. However, it exposes the knowledge base to the risk of vandalism and low-quality contributions. In this work, we build off of past work detecting vandalism in Wikipedia to detect vandalism in Wikidata. This work is novel in that identifying damaging changes in a structured knowledge-base requires substantially different feature engineering work than in a text-based wiki. We also discuss the utility of these classifiers for reducing the overall workload of recent changes vandalism patrollers in Wikidata. We describe a machine classification strategy that is able to catch 89% of vandalism while reducing patrollers' workload by 98% by drawing lightly from contextual features of an edit and heavily from the characteristics of the user making the edit.

Introduction

[edit]Wikidata (www.wikidata.org) is a free knowledge base that everyone can edit. It is a collaborative project aiming to produce a high quality, language-independent, open-licensed, structured knowledge base. Like Wikipedia, the project is open to contributions from anyone willing to contribute productively, but also open to potentially damaging/disruptive contributions. In order to combat such intentional damage, volunteer patrollers work to review changes to the database after they are saved. At a rate of about 80,000 human edits and 200,000 automated edits per day (as of February 2015), though, the task of reviewing every single edit would be daunting even for a very large pool of patrollers. Recently, substantial concerns have been raised about the quality and accuracy of Wikidata's statements[1], and therefore, the long-term viability of the project. These concerns call for the design of scalable quality control processes.

Similar concerns about quality control have been raised about Wikipedia in the past[2]. Studies of Wikipedia's quality have shown that, even at large scale and with open permissions, a high-quality information resource can be maintained[2][3]. One of the key technologies that let Wikipedia maintain quality efficiently at scale is the use of machine classifiers for detecting vandalism edits. These technologies allow the massive feed of daily changes to be filtered down to a small percentage that is most likely to actually be vandalism, substantially reducing the workload of patrollers[4][5]. These semi-automated support systems also substantially reduce the amount of time that an article in Wikipedia remains in a vandalized state. The study of vandalism detection in Wikipedia has seen substantial development as a field in the scholarly literature, to great benefit of the project[6][7][8][9].

In this study, we extend and adapt methods from the Wikipedia vandalism detection literature to Wikidata's structured knowledge base. In order to do so, we develop novel techniques for extracting signal from the types of changes that editors make to Wikidata's items. But unlike this past literature, we focus our evaluation on the key concerns of Wikidata patrollers who are tasked with reviewing incoming edits for vandalism: reducing their workload. We show that our machine classifier can be used to reduce the amount of edits that need review by up to 98% while still maintaining a recall of 89% using an off-the-shelf implementation of a Random Forest classifier[10][11].

Wikidata in a nutshell

[edit]Wikidata consists of mainly two types of entities: items and properties. Items represent define-able things. Since Wikidata is intended to operate in a language-independent way, each item is uniquely identified by a number prefixed with the letter "Q". Properties describe a data value of a statement that can be predicated of an item. Like items, properties are uniquely identified by a number prefixed with the letter "P".

Each item in Wikidata consists of five sections.

- Labels

- a name for the item (unique per language)

- Descriptions

- a short description of the item (unique per language)

- Aliases

- alternative names that could be used as a label for the item (multiple aliases can be specified per language)

- Statements

- property and data value pairs such as country of citizenship, gender, nationality, image, etc. Statements can also include qualifiers (which include sub-statements like the date of a census for a population count) and sources (like Wikipedia, Wikidata demands reliable sources for its data)

- Site links

- links to Wikipedia and other Wikimedia projects (such as Wikisource[12]) that reference the item.

For example, the item representing the city of San Francisco (Q62) contains the following statement: (P190, Q90). P190 is a property described in English as "sister city" and Q90 is an item for the city of "Paris, France". This statement represents the fact that San Francisco (Q62) has a sister city (P190) named Paris (Q90). Using so-called SPO triplets, standing for subject-predicate-object, as a mean to store knowledge is a common practice in knowledge bases such as Freebase.

Related work

[edit]The subject of quality in open production has been extensively studied in the open text editing contexts like Wikipedia, but comparatively little study has been done in open structured data editing contexts. In this section, we'll provide an overview of some of the most relevant work exploring quality in open contexts like Wikipedia and Wikidata.

Wikidata and Wikipedia operate in a common context: they are supported by the Wikimedia Foundation[13]; virtually all of Wikidata users are also editor in Wikipedia and/or other Wikimedia wikis; Wikidata, like Wikipedia, is powered by MediaWiki software, but Wikidata uses the "wikibase" extension[14] to manage structured data. Thus, damage detection in Wikipedia is closely related to vandalism detection in Wikidata projects. However, there are also open contribution structured data projects where quality and vandalism detection have been a focus of scholarly inquiry.

Quality in Wikipedia. Quality in Wikipedia has been studied so extensively that we can't give a fair overview of all related work, so here, we provide a limited overview of the work that is related to quality prediction and editing dynamics.

Stvilia et al. built the first automated quality prediction models for Wikipedia that was able to distinguish between Featured (highest quality classification) and non-Feature articles[15]. Warncke-Wang et al. extended this work by showing that the features used in prediction could be limited actionable characteristics of articles in Wikipedia and maintain a high level of fitness[16] and used these predictions in task routing.

Kittur et al. explored the process by which articles improve most efficiently and found that articles with a small group of highly active editors and a large group of less active editors were more likely to increase in quality than articles whose editors contributed more evenly[17]. They argued that this is due to the lower coordination cost when few people are primarily engaged in the construction of an article. Arazy et al. challenged the conclusions of Kittur et al. by showing a strong correlation between diversity of experience (global inequality) between editors who are active and positive changes in article quality[18]. The visibility of articles in in Wikipedia seems to be critical their development. Halfaker et al., showed that hiding newly created articles from Wikipedia readers in a drafting space substantially reduced the overall productivity of editors in Wikipedia[19]

Detecting vandalism in Wikipedia using machine learning classifiers has been an active genre since 2008[20]. There are generally two types of damage detection problems discussed in the literature: realtime and post-hoc. The realtime framing of damage detection imagines the classifier supporting patrollers by helping them find vandalism shortly after it has happened. The post-hoc framing of damage detection imagines the classifier being used long after an edit has been saved (and potentially reverted by patrollers). Since the post-hoc framing allows the model to take advantage of what happens to a contribution after it is saved (e.g. that it was reverted), these classifiers are able to attain a much higher level of fitness than realtime classifiers that must make judgements before a human has responded to an edit. However, the utility of a post-hoc classifier is at best hypothetical while realtime classifiers have become a critical infrastructure for Wikipedia patrollers[5][21]. Geiger et al. discussed the "distributed cognition" system that formed through the integration of counter-vandalism tools that use machine classification and social practices around quality control[22]. Geiger et al. showed in a follow-up work that, when systems that use automated vandalism detection go offline, vandalism is not reverted as quickly which results in twice as many views of vandalized articles[21].

Substantial effort has been put into developing high signal features for vandalism classifiers. Adler et. al was able to show substantial gains in model fitness when including user-reputation metrics as features[8]. Among several other metrics, they primarily evaluated the fitness of their classifier using the area under the receiver operating characteristic curve[23] (ROC-AUC) and were able to attain 93.4%. Other researchers explored the use of stylometric features and were able to boost attain an ROC-AUC of 92.9% [6][7]. West et al. explored spacio-temporal features and built and evaluated models predicting vandalism over only anonymous (not logged-in) user edits because those are the editors from which most vandalism ("offending edits" in West et al.'s terms) originate[24]. Adler et al. continues this work by comparing all of these feature extraction strategies/models and combines them to attain an ROC-AUC of 96.3%[9]. They continue to call for a focus on the area under the precision-recall curve (PR-AUC) instead of the ROC-AUC since it affords more discriminatory power between the overall fitness of models in the context of a low prevalence prediction problem (few positive examples -- as is the case with vandalism in Wikipedia).

Quality in Wikidata. Like Wikipedia, Wikidata is based on the MediaWiki software which provides several means for tracking and reviewing changes to content. For example, watchlists[25] allow editors to be notified about changes make to items and properties that they are interested in. The recentchanges feed[26] provides an interface for reviewing all changes that have been made to the knowledge base. Wikidata also uses tools related to its own quality demands. Most notably, "Constraint violation reports"[27] is a dynamic list of possible errors in statements that is generated using predefined rules for properties. For example, if a cat is mentioned as spouse of a human being that's likely to need review. Other tools such as Kian[28] also exposes possible errors in Wikidata by comparing data in Wikidata with extracted values from Wikipedia. Despite all of the efforts on quality control in Wikidata, still concerns has been raised regarding Wikidata reliability. For example Kolbe [29] calls into question whether Wikidata will ever be able to verify and source the statements in Wikidata.

Regarding vandalism detection Heindorf et al. have done studied on the demography of vandalism in Wikidata[30] showing interesting dynamics in how who vandalizes Wikidata. For example, most of the vandals in Wikidata already has vandalized at least a Wikipedia language.[30] As far as we can tell, our work is the first published about a vandalism detection classifier for Wikidata.

Quality in other structured data repositories. There have been several research projects conducted on damage detection in knowledge bases. Most notably, Tan et al. [31] worked on detecting correctness of data added to freebase[32]. They assumed that, if a statement can survive for four weeks, it's probably a good contribution. They also showed the ratio of correct statements added by a user is not predictive in determining the correctness of future statements, but by defining the area of expertise for each user, it's possible to make proper predictions. As we showed it doesn't apply to Wikidata. The difference in the concept of classification in their project and ours can be the reason. They were looking into the correctness of data while our aim is detecting vandalism. Nies et al. \cite{} have done a research regarding vandalism in OpenStreetMap (OSM). OSM, like Wikidata, is an open structured database but unlike our work, they did not draw from the substantial history of vandalism detection in Wikipedia. Also, they did not use machine learning. Their method is poorly described "rule-based" scoring system and would be difficult to reproduce. In our work, we draw extensively from past work building high fitness vandalism detection models for Wikipedia. We use training and testing strategies that are intended to be straightforward to replicate. We've adopted standard metrics from the Wikipedia vandalism detection literature and supplement our own intuitive evaluation metric (filter-rate) that correspond to real effort saved for Wikidata patrollers.

Methods

[edit]Designing damage detection classifiers that can be effectively used by Wikidata patrollers requires two conditions. First, the classifier should be able to respond and classify edits in a timely manner i.e. within a few seconds: reviewing large sets of edits would be unfeasible with longer response times. On average, two edits are made by humans in Wikidata every second. Given this high edit rate, using post-hoc features (such as the time an edit stays without being reverted) is undesirable in a production environment. Second, two distinct use cases need to be supported: auto-reverting of edits by bots and triaging edits to be reviewed by humans. In the first use case, the classifier is expected to have a high level of confidence, for instance a 99-99.9% precision. In this case, the classifier will catch obvious vandalisms (e.g. blanking of a statement) but a higher recall would be helpful. In the second use case, the classifier is expected to have a high recall: low precision can be tolerated, however it should not be too low so that in practice it classifies all edits.

In order to build a classifier usable by Wikidata users, we leverage the Wikimedia Labs infrastructure hosted by the Wikimedia Foundation. We rely on a service called ORES (for Objective Revision Evaluation Service), which can host machine learning classifiers for all projects by the Wikimedia Foundation, including Wikipedia and Wikidata. ORES accepts two methods of scoring edits: a single edit mode and a batch mode. We tested ORES response time by testing 1,000 randomly sampled edits. Response time in single edit mode varies between 0.0076 and 14.6 seconds with a mean of 0.66 seconds and median of 0.53 seconds. In batch mode with sets of 50 edits, response time falls between 0.56 and 13.9 seconds with a mean of 6.23 seconds and median of 5.58 seconds. Analyzing the response time in single edit mode, two peaks are noticeable: the first peak is around 0.3 seconds and a second peak is around 0.55 seconds.

Building a corpus

[edit]While there has been substantial work done in the past to build a high quality vandalism corpus for Wikipedia[33], no such work has been done for Wikidata. Research by Heindorf et al.[30] was intended to build such a corpus, but their method (matching edit comments for the use of specific tool) is inapplicable as it mislabels a substantial amount of edits. They also assume that patrollers only use one of two methods available in the editing interface to revert vandalism: "rollback" and "restore". Their qualitative analysis shows that 86% of rollbacked edits and 62% of edits reverted using the restore feature were vandalism. If a classifier trained using this limited corpus proved useful for predicting all cases of vandalism regardless of the reverting method, then it would perform poorly when trying to predict true vandalism edits that were mislabeled in the corpus (i.e., reverted by ways other than the "rollback" method). In a second scenario, the classifier may only learn how to classify edits that are reverted using the "rollback" method. In this case, the classifier is substantially less useful in practice, but it would show high scores in the evaluation phase for effectively ignoring all mislabeled items in the corpus. Thus, training and testing a classifier solely based on "rollbacked" edits is problematic.

Rather than rely on this corpus, we applied our own strategy for identifying edits that are likely to be vandalism. First, we randomly sampled 500,000 edits saved by humans (non-bot editors) in the year 2015 in Wikidata. Next, we labeled edits that were reverted during an "identity revert event" (see [34]). Next, we applied several filters to the dataset to examine cross sections of it. First, we filtered out edits that were performed by users who attained a high status in Wikidata by receiving advanced rights (including: sysop, checkuser, flood, ipblock-exempt, oversight, property-creator, rollbacker, steward, sysop, translationadmin, wikidata-staff). Second, we filtered out edits that originated from other wikis (known as "client edits") and edits that merged together two Wikidata items. Finally, we were left with a set of regular edits by non-trusted users. Next, we reviewed random samples of reverted and non-reverted edits in a few key subsets to get a sense for which of these filters could be applied when identifying vandalism.

| edits | reverted | |

|---|---|---|

| trusted user edit | 461176 | 1188 (0.26%) |

| merge edit | 8241 | 38 (0.46%) |

| client edit | 10099 | 109 (1.08%) |

| non-trusted regular edit | 22460 | 622 (2.77%) |

We can safely exclude client edits since, if they are vandalism, they are vandalism to the originating wiki. Edits by trusted users are reverted at an extremely low rate, but it's still worth reviewing them, and so is reviewing merge edits. Finally, non-trusted regular edits are reverted at a high rate of 2.77%, which is more in line with the rates seen for all edits in English Wikipedia[33]. To make sure that reverts catch most of the vandalism, we manually reviewed both the reverted and non-reverted regular edits by non-trusted users.

| good | good-faith damaging | vandalism | |

|---|---|---|---|

| reverted merge edits | 17 | 21 | 0 |

| reverted trusted user edits | 93 | 7 | 0 |

| reverted non-trusted regular edits | 8 | 24 | 68 |

| non-reverted non-trusted regular edits | 94 | 3 | 1 |

This analysis suggests that reverted edits by non-trusted users are highly likely to be intentional vandalism (68%) or at least damaging (92%) and that non-reverted edits by users in this group are unlikely to be vandalism (1%) or damaging (4%). Further, it appears that reverted merge edits and reverted edits by trusted users are very unlikely to be vandalism (0% observed) – though many merges are good-faith mistakes that violate some Wikidata policy. Based on this analysis, we built a corpus of edits based on this 500,000 sample and labeled reverted regular edits by non-trusted users as True (vandalism) and all other edits as False (not vandalism). From this 500,000 set, we randomly split 400,000 edits for training and hyper-parameter optimization and 100,000 edits for testing. All test statistics were drawn from this 100,000 test set.

Comparing our work to that of Heindorf et al.[30] we found that only 63% (439) edits we identified as vandalism were reverted using the "rollback" method, 15% (104) were reverted using "restore" and 22% (155) were reverted using other methods.

Feature engineering

[edit]Before starting to build the damage detection classifier, we launched a community consultation asking Wikidata users to provide examples of common patterns of vandalism. We received around thirty patterns and examples. Community feedback was helpful to build the initial model which was launched on October 29, 2015. A second community consultation was launched for reporting possible mistakes of the initial model and more than 20 cases of false positives and false negatives were reported, which helped the authors improve damage detection mostly by adding proper features. In order to obtain accurately labeled data, a campaign was launched asking community members to hand-code 4,283 edits. At the time of this writing, this campaign is half-way through and its data is used in this research to examine the accuracy of models and automated labels of edits that are being used in training models. Also the classifier is accessible to everyone[35] which allows users and experts to comment on the algorithms and methods used.

Features

[edit]| General metrics | |

|---|---|

| Number of sitelinks added/removed/changed/current | Sitelinks are links from a Wikidata item to Wikipedia or other sister projects such as Wikiquote, etc. |

| Number of labels added/removed/changed/current | Items can have multiple labels, unique per language. |

| Number of descriptions added/removed/changed/current | Items can have multiple descriptions, unique per language. |

| Number of statements added/removed/changed/current | Statements are subject-predicate-object triplets. |

| Number of aliases added/removed/current | Items can have one or more aliases per language. |

| Number of badges added/removed/current | Badges determine when articles linked to an item are of a high quality ("featured articles"). |

| Number of qualifiers added/removed/current | Qualifiers restrict the validity of a statement to a specific range. For example, a statement about the population of a city can accept census year as a possible qualifier. |

| Number of references added/removed/current | Each statement can have zero or more references, used to represent the provenance of the data. |

| Number of changed identifiers | Identifiers are properties that allow mapping an item to a corresponding item in an external knowledge bases. VIAF ids, ISBN or IMDb ids are examples of identifiers. |

| Typical vandalism patterns | |

| Proportion of Q-ids added | The addition of multiple statements to an item in a single edit. |

| If English label has changed | Changing the English label. |

| Proportion of language names added | Adding language names such as "English" as the values of a statement. |

| Proportion of external links added | Spamming Wikidata items by adding external links |

| Is gender changed | Changing value for the gender property |

| Is country of citizenship changed | Changing value for country of citizenship |

| Is member of sports team changed | Modifying statements about teams a sportsperson has played with |

| Is date of birth changed | Changing a person's date of birth |

| Is image changed | Changing the item image to an unrelated image |

| Is image of signature changed | Changing the signature image for people |

| Is category of this item at Wikimedia Commons changed | Changing the Commons category associated with an item |

| Is official website has changed | Changing the official website property of an item |

| Is this item about a human | The item is an instance of human |

| Is this item is about a living human | The item is an instance of human and living |

| Typical non-vandalism patterns | |

| Is it a client edit | When a user moves a page in Wikipedia (a client of Wikidata) or deletes the page, an edit is made in Wikidata to keep the link between the projects in sync. |

| Is it a merge | Merging – an action that is not enabled for new users – tends to change drastically an item's content. |

| Is it revert, rollback, or restore | These edits are actions performed by users trying to undo vandalism. |

| Is it creating a new item | The edit creates a new item |

| Editor characteristics | |

| Is the user is a bot | The edit is performed by a bot, a very common practice in Wikidata. |

| Does the user have advanced rights | The user is a member of the 'checkuser', 'bureaucrat', or 'oversight' group and can perform advanced actions. |

| Is the user an administrator | Administrators are advanced users with a significant amount of contributions and trusted by the community of editors |

| Is the user a curator | The user is a member of the 'rollbacker', 'abusefilter', 'autopatrolled', or 'reviewer' group, privileges typically assigned to users with significant amounts of edits. |

| Is the user anonymous | The user is unregistered. |

| Age of editor | The time between the user account registration time and the timestamp of the edit, in seconds scaled using log(age + 1). |

Evaluation

[edit]We use three metrics to evaluate the performance of our prediction model:

- ROC-AUC (which has been used historically in the vandalism detection literature) [8][6]

- PR-AUC (as suggested by Adler et al. call in their more recent work)[9]

- Filter-rate @ high recall (which measures the proportion of edits that must be reviewed by Wikidata patrollers in order to for a high percentage of all vandalism to be caught).

Our inclusion of "filter rates" in the evaluation of the performance of vandalism classifiers is intentional since our goal is to design classifiers that can be effectively used by Wikidata patrollers. As we improve fitness of the model, this filter-rate should increase and therefore the expected workload for patrollers should decrease: this metric directly measures theoretical changes in patroller workload.

Results

[edit]In this section, we discuss the fitness of our model against the corpus and the real-time performance of the model as exposed via ORES, our live classification service for Wikidata patrollers.

Model fitness

[edit]All models were tested on the exact same set of 99,222 revisions withheld during hyper-parameter optimization and training. Table XX shows general fitness metrics for models using different combinations of features. As Table 1 suggests, we were only able to train marginally useful prediction models when excluding user features. These models attained extremely low PR-AUC values and there was no threshold that could be set on the True probability that would allow for 75% of reverted edits to be identified to the exclusion of others -- resulting in a zero filter rate.

| features | ROC-AUC | PR-AUC | filter-rate |

|---|---|---|---|

| general | 0.777 | 0.01 | 0.936 at 0.62 recall |

| general and context | 0.803 | 0.013 | 0.937 at 0.67 recall |

| general, type, and context | 0.813 | 0.014 | 0.940 at 0.68 recall |

| general and user | 0.927 | 0.387 | 0.985 at 0.86 recall |

| all | 0.941 | 0.403 | 0.982 at 0.89 recall |

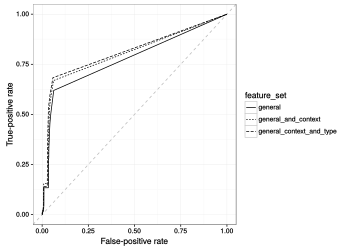

Figure #precision/recall (no user) and #precision/recall (user) plot precision-recall curves for the two sub-feature-sets. Figure #precision/recall (no user) visually confirms the very poor results of the classifier when no user features are included. At the scale of the graph it is difficult to confirm any meaningfully greater than zero precision anywhere on the recall spectrum. #precision/recall (user) shows a substantial difference. For the most part, the all features and general_and_user features classifiers seem to perform comparably well across the spectrum of recall. This suggests that the inclusion of context and edit type features on top of general and user-based features results in minor (if any) improvements.

Figures #filter-rate & recall (no user) and filter-rate & recall (user) show the filter-rate (which quantifies the amount of effort saved for Wikidata patrollers. See #Methods.) of the classifiers across the spectrum of recall. Here, we can see that models that don't include user features struggle to attain 70% recall at any filter-rate while models that include user features are able to attain very high filter rates up to 89% recall. This suggests a theoretical reduction in patrolling workload down to 1.8% of incoming human edits, assuming that it's tolerable to let 11% of potential vandalism to be detected by other means.

Real-time prediction speed

[edit]Requests for a single revision typically respond in ~0.5 seconds with rare cases taking up to 2-10 seconds. If the score has already been generated and cached, the system will generally respond in 0.01 seconds. This is a common use-case, since we run a pre-caching service that caches scores for edits as soon as they are saved. ORES also provides the ability to request scores in batch mode, which allows users to gather basic data for feature extraction in batches as well. When requesting predictions in 50 revision batches, we found that the service responds in about ~0.12 seconds per revision in the batch. As #ORES response timing (Wikidata-reverted) suggests, this timing varies quite widely which is likely due to rare individual edits that take a long time to score and hold back the whole batch from finishing.

Limitations

[edit]A major limitation of our model building and analysis exercise is our approach in constructing our corpus. In our analysis of which edits and reverts are likely to represent vandalism in Wikidata, we used characteristics of the edit (e.g. is it a client edit? and is it a merge edit?) and the editor (e.g. is the user in a trusted group?) to identify vandalism. These characteristics of an edit are also included as features in our prediction model. If we applied these filters in our vandalism corpus inappropriately, we would have simply trained our classifier to match these arbitrary rules we have put in place. We are confident that this is not the case generally thanks to reports from Wikidata users who have been using our live classification service. User reports generally suggest that the classifier is generally effective at flagging edits that are vandalism. We also ran a follow-up qualitative analysis to help check whether our estimates of the filter-rate afforded by the "all features" model worked out in practice.

We randomly sampled 10,000 human edits and generated the corresponding vandalism prediction scores. We then manually labeled (1) the highest scored edits in the dataset (100 edits at more than 93% prediction), (2) all reverted edits in the dataset, and (3) a random samples of 100 edits for each 10% strata of prediction weight (e.g. 30-40%, 40-50%, etc.) We found only 17 (0.17%) vandalism edits in the 10,000 set and all these vandalism observations scored 93% or more. Only 100 of the 10,000 edits were scored 93% or above and by reviewing this 1% fraction of edits it was possible to catch all damaging edits. So, in this sample set, we were able to attain a 99% filter-rate with 100% recall by setting the threshold at 93%. This result looks substantially better on paper than evaluation against the test set and we think that is due to the inclusion of careful human annotation. It seems likely that more of the good edits that were mistakenly labeled as vandalism in our corpus also show up as false negatives in our test set, pushing down our apparent filter-rate and recall. While this analysis is not as robust and easy to replicate as the formal analysis we described above, we feel that it helps show that our classifier may be more useful than it appears.

These concerns and limitations call for a PAN-like dataset for Wikidata that actually uses human judgement to identify vandalism edits rather than heuristics. Lacking such a dataset, the true filter-rates and the consequent reduction in workload for patrollers can only be discovered in the context of actual work performed by patrollers. The extremely low prevalence of vandalism edits in Wikidata means that we would need extremely large numbers of labeled observations to attain a representative set of vandalism edits -- probably in the order of 100,000-1,000,000. Furthermore, and unlike vandalism in Wikipedia, labeling vandalism in Wikidata requires reviewers who are both familiar with the structure of statements in Wikidata and are able to evaluate contributions across many languages.

Conclusion

[edit]In this paper we described a straightforward method for classifying Wikidata edits as vandalism in real time by using a machine learned classification model. We show that, using this model and our prediction service, it is possible to reduce human labor involved in patrolling edits to Wikidata by nearly two orders of magnitude (98%). At the time of writing, several tools that have adopted our service and are using the prediction model to patrol incoming edits. Our analysis and a substantial part of our feature set are informed by the real-world experience of patrollers who are using this classifier to do their work.

Future work should focus on two key areas: (1) the development of a high-quality vandalism test dataset for Wikidata and (2) the development of new features for Wikidata that draw from sources of signal other than a user's status as "untrusted". A high-quality vandalism dataset would provide a solid basis to effectively compare the performance of prediction models without the limitations we described above and the qualitative intuitions we obtained by observing vandal fighting "in the wild". The development of high signal features beyond a users status are critical to design quality control processes that are fair towards users of different categories. Our classification model is strongly weighted against edits by anonymous and new contributors to Wikidata, regardless of the quality of their work. While this may be an effective way to reduce patrollers' workload, it is likely not fair to these users that their edits be so carefully scrutinized. By increasing the fitness of this model and adding new, strong sources of signal, a classifier could help direct the patrollers attention away from good new/anonymous contributors and towards proper vandalism -- both reducing patroller workload and making Wikidata a more welcoming place for newcomers.

Acknowledgments

[edit]We would like to thank Lydia Pintscher and Abraham Taherivand from Wikimedia Deutschland. Yuvaraj Pandian from Wikimedia Foundation for operational support. Adam Wight, Helder Lima, Arthur Tilley and Gediz Aksit for their help. We also want to thanks community of Wikidata editors for providing feedback and reporting mistakes.

References

[edit]- ↑ en:Wikipedia:Wikipedia Signpost/2015-12-02/Op-ed "Whither Wikidata"

- ↑ a b Giles, Jim. "Internet encyclopaedias go head to head". Nature 438 (7070): 900–901. doi:10.1038/438900a.

- ↑ Stvilia, Besiki; Twidale, Michael B.; Smith, Linda C.; Gasser, Les (2008-04-01). "Information quality work organization in Wikipedia". Journal of the American Society for Information Science and Technology 59 (6): 983–1001. ISSN 1532-2890. doi:10.1002/asi.20813.

- ↑ Geiger, R. Stuart; Halfaker, Aaron (2013-01-01). "When the Levee Breaks: Without Bots, What Happens to Wikipedia's Quality Control Processes?". Proceedings of the 9th International Symposium on Open Collaboration. WikiSym '13 (New York, NY, USA: ACM): 6:1–6:6. ISBN 9781450318525. doi:10.1145/2491055.2491061.

- ↑ a b Cite error: Invalid

<ref>tag; no text was provided for refs namedgeiger10banning - ↑ a b c William Yang Wang and Kathleen R McKeown. Got you!: automatic vandalism detection in wikipedia with web-based shallow syntactic-semantic modeling. In Proceedings of the 23rd International Conference on Computational Linguistics, pages 1146–1154. Association for Computational Linguistics, 2010.

- ↑ a b Manoj Harpalani, Michael Hart, Sandesh Singh, Rob Johnson, and Yejin Choi. Language of vandalism: Improving wikipedia vandalism detection via stylometric analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies: short papers-Volume 2, pages 83–88. Association for Computational Linguistics, 2011.

- ↑ a b c B Adler, Luca de Alfaro, and Ian Pye. Detecting wikipedia vandalism using wikitrust. Noteook papers of CLEF, 1:22–23, 2010.

- ↑ a b c B Thomas Adler, Luca De Alfaro, Santiago M Mola-Velasco, Paolo Rosso, and Andrew G West. Wikipedia vandalism detection: Combining natural language, metadata, and reputation features. In Computational linguistics and intelligent text processing, pages 277–288. Springer, 2011.

- ↑ L. Breiman, “Random Forests”, Machine Learning, 45(1), 5-32, 2001.

- ↑ http://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html

- ↑ https://wikisource.org

- ↑ Footnote to some page about the WMF

- ↑ Footnote a link to some docs about wikibase

- ↑ Stvilia, B., Twidale, M. B., Smith, L. C., & Gasser, L. (2005, November). Assessing Information Quality of a Community-Based Encyclopedia. In IQ.

- ↑ Warncke-Wang, M., Cosley, D., & Riedl, J. (2013, August). Tell me more: An actionable quality model for wikipedia. In Proceedings of the 9th International Symposium on Open Collaboration (p. 8). ACM.

- ↑ Kittur, A., & Kraut, R. E. (2008, November). Harnessing the wisdom of crowds in wikipedia: quality through coordination. In Proceedings of the 2008 ACM conference on Computer supported cooperative work (pp. 37-46). ACM.

- ↑ Arazy, O., & Nov, O. (2010, February). Determinants of wikipedia quality: the roles of global and local contribution inequality. In Proceedings of the 2010 ACM conference on Computer supported cooperative work (pp. 233-236). ACM.

- ↑ Schneider, J., Gelley, B. S., & Halfaker, A. (2014, August). Accept, decline, postpone: How newcomer productivity is reduced in English Wikipedia by pre-publication review. In Proceedings of The International Symposium on Open Collaboration (p. 26). ACM.

- ↑ Smets, K., Goethals, B., & Verdonk, B. (2008, July). Automatic vandalism detection in Wikipedia: Towards a machine learning approach. In AAAI workshop on Wikipedia and artificial intelligence: An Evolving Synergy (pp. 43-48).

- ↑ a b Geiger, R. S., & Halfaker, A. (2013, August). When the levee breaks: without bots, what happens to Wikipedia's quality control processes?. In Proceedings of the 9th International Symposium on Open Collaboration (p. 6). ACM.

- ↑ Geiger, R. S., & Ribes, D. (2010, February). The work of sustaining order in wikipedia: the banning of a vandal. In Proceedings of the 2010 ACM conference on Computer supported cooperative work (pp. 117-126). ACM.

- ↑ Footnote: https://en.wikipedia.org/wiki/Receiver_operating_characteristic#Area_under_the_curve

- ↑ West, A. G., Kannan, S., & Lee, I. (2010, April). Detecting wikipedia vandalism via spatio-temporal analysis of revision metadata?. In Proceedings of the Third European Workshop on System Security (pp. 22-28). ACM.

- ↑ footnote: Link to a help about watchlists

- ↑ footnote: Link to RC itself

- ↑ footnote: Link to page in Wikidata

- ↑ footnote: Link to Kian github

- ↑ https://en.wikipedia.org/wiki/Wikipedia:Wikipedia_Signpost/2015-12-02/Op-ed

- ↑ a b c d Stefan Heindorf, Martin Potthast, Benno Stein, and Gregor Engels. Towards vandalism detection in knowledge bases: Corpus construction and analysis. In Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, pages 831–834. ACM, 2015. SIGIR 2015 Poster

- ↑ Tan, Chun How; Agichtein, Eugene; Ipeirotis, Panos; Gabrilovich, Evgeniy (2014-01-01). "Trust, but Verify: Predicting Contribution Quality for Knowledge Base Construction and Curation". Proceedings of the 7th ACM International Conference on Web Search and Data Mining. WSDM '14 (New York, NY, USA: ACM): 553–562. ISBN 9781450323512. doi:10.1145/2556195.2556227.

- ↑ footnote: Freebase, Google's knowledge base, is currently being shut down in favor of Wikidata.

- ↑ a b Potthast, M. (2010, July). Crowdsourcing a Wikipedia vandalism corpus. In Proceedings of the 33rd international ACM SIGIR conference on Research and development in information retrieval (pp. 789-790). ACM.

- ↑ https://meta.wikimedia.org/wiki/Research:Revert#Identity_revert

- ↑ Link to wb-vandalism in github