Wiktionary future/gl

- see also wikidata:Wikidata:Wiktionary and Wikidata/Notes/Future

This page aims to group ideas for Wiktionary's future. Indeed, with the introduction of Wikidata, a lot of possibilities emerge. Moreover, the proposal to adopt OmegaWiki is adding to this current effervescence on projects with overlapping goals.

The goal is first to expose what the specificities of each project are, and what parts overlap. Then, suggestions on how to dispatch non-overlapping goals and not waste resources in redundant ways can be discussed. Finally, this page should be used to present and debate long-term plans concerning Wiktionaries.

Organisation of this page

In hopes of helping this page grow in a useful manner, a recommended structure has been proposed. You can of course share your own ideas to structure the article in the talk page.

Each proposal section should come under the Proposals in the following form:

- Proposal title

- a level 2 section (===) which gives a hint of the subject.

- Title Abstract

- a level 3 section (====) which provide a very short description of the proposal.

- Title Current Situation

- a level 3 section (====) describing the shortcomings of the current situation.

- Title Recommended improvement

- a level 3 section (====) describing them, perhaps using subtitles on level 4 (=====).

- Title Comments on proposal

- a level 3 section (====), initially empty, filled by others.

Here is a template:

=== Proposal title ===

</div>

<div lang="en" dir="ltr" class="mw-content-ltr">

==== Abstract ====

</div>

<div lang="en" dir="ltr" class="mw-content-ltr">

==== Current Situation ====

</div>

<div lang="en" dir="ltr" class="mw-content-ltr">

==== Recommended improvement ====

</div>

<div lang="en" dir="ltr" class="mw-content-ltr">

==== Comments on proposal ====

Propostas

Gathering information on the Wiktionary project

Abstract

To make relevant recommendations for the Wiktionary future, a state of the art work should first be realised. A document should summarize and providing references on:

- Wiktionary history: initial goals and implementatiton choices.

- Current state of wiktionnaries: similarities and differencies in how articles are structured on each chapter.

- Inventory of related projects such as OmegaWiki and Semantic MediaWiki: why did they emerged out of Wiktionary, are their goals and Wiktionary goals mergeable in a single project.

- What would be the expectable pros and cons of a more structured Wiktionary. Obviously structures may help on the cross-chapter auto-feedback. But on the other hand the most important part of Mediawiki projects is its community. With a trans-chapter structure, potential community frustration could be raised by a lose of control feeling, especialy if some locales existing specificities become impossible in the said structure. Ways to prevent this situations should be investigated: broad feedback campaigns, flexible structures, etc..

Current Situation

As far as it is known, no such report exists yet.

Recommended improvement

The report should be created, it may be suggested as a mentorship project idea.

Comments on proposal

Take an inventory of expected usage

Abstract

According to how you structure your data, it will ease or harden some specific uses. So having a list of expected uses would help design a structure that ease them. Of course, it's not possible to guess all the future uses which may be imaginated, but at least taking into account all ideas which can be grabbed now would be helpful.

Current Situation

Extracting structured information from articles and database dump may be very hard, as their content are only focused on generating Wiktionary html pages and rely sometime heavily on templates hardly interpretable outside the Wikimedia engine.

Recommended improvement

Listing other use cases of Wiktionaries content and reach requirements to ease them.

Comments on proposal

Improving Wiktionaries data model

Abstract

While the wiki way largely proved its pro, coins remains, that the community is willing to overcome through struturation of some elements and centralization of common data, for example with the Wikidata initiative.

Current Situation

Each language version of Wiktionary have their own way to structure data, making it harder to share common data like translations, pronunciations and word relations like synonymy and antinomy.

Recommended improvement

Envolving all wiktionnary version contributors in a global structuration of wiktionary entries, possibly with defined ways to extend strutured data which lets each community continue to develop its needed specificities while remaining a good player in the global cross version collaborative work.

- Defining usages which wiktionary should easily enable

- Defining what should be a wiktionary entry:

- what data should be mandatory

- how to inform if a data is laking because

- its irrelevant for the entry

- it needs a contribution

- (put others ideas here)

- (add others ideas)

Proposal ideas

Comments on proposal

Improving Wiktionary data model

Abstract

Current Situation

Recommended improvement

Comments on proposal

Work and discussions on wiktionary future

Wikimania 2013 Meeting about the support of Wiktionary in Wikidata

During Wikimania 2013, a meeting about the support of Wiktionary in Wikidata was organized. The following notes were taken during the event, and a cross-list discussion accompanied it online.

11:30-13:00, room Y520 Attendees: Micru, Aude, Nikerabbit, Duesentrieb, Jaing Bian, Denny, Chorobek, Linda Lin, Amgine (via Skype), Stepro, Vertigo (via Skype), Nirakka, GWicke, Max Klein Micru presenting on several different data models:

- several Wiktionaries

- OmegaWiki

- WordNet

- Lemon model

- DBpedia Wiktionary

Denny says: The idea of this meeting is to discuss and move the discussion, not to decide anything. This is obviously not the right set of people to decide anything about Wiktionary. Our primary goal is not to create a lexical resource of our own, but to provide support for the Wiktionaries Amgine says: Interwiki link setup of Wiktionary is rather complex Difference between words/lexemes and concepts Terminological questions around senses, lexemes, definitions... Stepro says: To have simple form-based User Interfaces that allow to edit structured edit The structured data to be available to all languages Maybe a Wiktionary conference, but how to start it?

- (Denny: afraid that if Wikidata invites to a conference it would not fly)

German Wiktionary would love to have this kind of structured data

- Teestube on German Wiktionary, Beer parlor and Grease Pit on English, and IRC channel for English on different times of day

Further: English Wiktionary have a common talking area called "Grease pit" for technically inclined Wiktionarians Vertigo says: It would be great to try this stuff out beforehand

- (Denny) problem is that we need to know what to develop. Changing it later is expensive

- (Micru) maybe a mock up?

- (Vertigo) mock up is a very good idea, a sketch that displays things

Stepro says:

- First Wiktionary meeting was this year, next one probably in Spring

GWicke says: Parsoid does parsing of Wikitext Get Template and template blocks through JavaScript If Wiktionary has any special requirements to the Visual Editor, please contact Gabriel Wicke First demo available on parsoid.wmflabs.org GWicke: Have you seen the editor special characters on en.WT? Is this an extension or gadget?

Contributions which need to be structured and merged into proposals

Denny's questions

For me, the proposals here are a bit advanced. I would like to gather first a bit of background, and I would very much appreciate it if we could gather it together.

The page below contains a mix of data model ideas, use cases, and requirements. I would suggest that this page get sorted out and divided into different point, discussing the use cases, other requirements, and the data model. For the data model I would very much like to see a short descriptions of the existing data models of OmegaWiki, two to three successful Wiktionaries, WordNet, and maybe VerbNet and FrameNet. Such a background would be extremely helpful in building our data model.

Does anyone feel like starting to prepare this site appropriately and structure a discussion? --denny (talk) 10:28, 3 April 2013 (UTC)

Comments

- Good proposal, but I think we need a target clarification before thinking about the tools to reach them (Wikidata, OmegaWiki, WordNet, VerbNet etc.). I made a (sketch) proposal below, before adding the title "Targets, business-model of the proposals presented here?", not knowing WT Project's WT business-model. Does it have one? Where can I find it? Is it really open for long term developments reaching far into the future? [1][2] Knowing the long-term goals, we are better able to make good proposals and assess their (imagined) value. But this doesn’t answer your question how to structure the proposals. I propose short term, mid-term and long-term. Some mid- and long-term proposals may need a bunch of short term proposals as prerequisite. NoX (talk) 17:38, 3 April 2013 (UTC)

- ↑ E.g. Add voice recognition: Travelling in China and having an understanding problem, I take my mobile phone and ask SIRI in English: What does this mean in English?, holding the phone to my partner's mouth and my partner sais: 朋友. I expect SIRI (or something better) to use the WT Project Database (because it is still simply the best), a WT function translates the sound into an IPA string, starts a search in the WT Database and delivers the feedback. SIRI responds: You are currently in China (my GPS position is activated) the word’s meaning could be friend in Mandarin. (Does anybody think this could be achieved using the current WT database structure?)

- ↑ The idea to have voice input "translated" into IPA is intriguing but currently not realistic. We would need an almost complete overhaul of IPA. Few obstacles of many: (1) How to transcribe utterances is language dependent in several ways. (2) Acoustic-only recognition and discrimination of periods of silence (pauses, glottal stops, plosives) is not possible. (3) Prosody conveys meaning but IPA provides too little means to write is down.

I do not regard the data model of OmegaWiki, the Wiktionaries, WordNet etc. as a tool. I regard them as clearly related work with some decades of experience flowing into them. Just making up a new data model without explicitly describing existing ones that already have gone through a few good reality tests seems like a potential waste of time, assuming one is not a genius or extremely experienced in the given field. So, I still stick to my request: please let us first describe the existing data models of a few Wiktionaries, of OmegaWiki, and of WordNet at least, before we introduce completely new ones. --denny (talk) 11:14, 4 April 2013 (UTC)

Targets, business-model of the proposals presented here?

Adding a proposal here needs to pose the question to oneself: Who will benefit from my proposal?

- Who are my potential customers, the final users of my proposal?

- What is the business model behind it?

- Will it lead to a win-win-situation

- for me (only for me?),

- for the worldwide WT Project,

- for the worldwide (hopefully expanding) user group: Reading users, contributing users or even (very essential) donating users?

Here the win-win-situation can exist between two parties: The WT Project itself and its users.

WT users

The WT project will prosper in the long term only if it satisfies the true needs of a broad spectrum of users, correctly assesses and continues to evolve in the direction of satisfying the needs of this user group (concerning language), currently and in the future.

This user group will be only willing to pay for it (in the form of contributions, and, absolutely necessary for the WT infrastructure, donations and hopefully not, by enduring ads) if the WTs are of high and rising quality and growing easiness of use (easy to understand AND easy to contribute).

This leads to the question, posed in every business: Who are the WT Project users (world-wide)?

I, personally, se the following user groups:

- The monolingual WT user

- The bilingual WT user.

- WT data suckers: Competitors that suck data from WT.

Within the first two groups, you have to distinguish between common users (merely looking for a word; learning a foreign language) and language experts (translators, people who studied the language). Surely the common users are the bigger user base. A smaller part of the common users will be able to contribute. The easier WT can be used the more (mass) input can be produced by them. WT Project should also fulfill the needs of the language experts. Do the interests of language experts match the interests of common users? I think: No. But they can coexist in a good way. Do the language experts understand the real needs of the common users? Sometimes my feeling is: No.

What are the sizes of these user bases to estimate the real, general benefit of a good proposal?

Proposal of an improved Wiktionary data model

- NoX: Withdrawal of the proposition.

- Reasons:

- This proposal has been done from an average layman’s view of Wiktionary, especially Wiktionnaire, reflecting the usage needs of a common cross WT user (see Targets ... above), not knowing in detail all the things that run in parallel (e.g. OmegaWiki, Wikidata etc.).

- Improvement of Wiktionary data model is not the proper title. The lacks and deficiencies concerning translations and translation examples in current WT’s have been the main reason. These lacks are also clearly identified by OmegaWiki and treated in my proposed way (structured data) but the way of realizations seems not to be reasonable to me (two different language worlds).

- It’s not a single proposal but a bunch of proposals targeting a one and only one WT world.

- It’s bottom up proposal, not knowing top down targets (see Targets ... above).

- So I still stand behind of all my proposals (some in a little different view; some additional others) searching for a way to address them better. NoX (talk) 20:31, 6 April 2013 (UTC)

- Reasons:

Abstract: The following proposal aims at improving the internal Wiktionary data structures. The current mostly text- and mark-up-based structures with more or less accidental quality of data-content should be moved, step by step, into a better apt, more easily understandable and usable data model, representing the real long term needs of the subject. The view is that of a cross-Wiktionary user. The target is described, not the road map.

Currently: One Wiktionary project contains m Wiktionaries. (m = many). Each Wiktionary contains m Wikis. Each Wiki contains one (and only one) text bloc containing text intermixed with mark-up. Within such a text bloc can be contained 1 : m words of the same or different languages (not necessarily the actual Wiktionary language). Each word can contain the word-token itself (string of single or double byte characters), its type of word (noun, verb etc.), its gender and other characteristics (if it has), 1 : m pronunciations, 1 : m word-meanings. 0 : m (word) expressions, 0 : m references (links) to derived words. Each word-meaning has 1 : m translations into 1 : m languages. Each translation contains 2 links (French viewpoint). One into the same Wiktionary to a Wiki, containing a word of the language into which it is translated , and a second link to another Wiktionary project of the language referenced, to a Wiki, containing the translated word. So far to the well-known staff. See also [WIKI, INTERWIKI].

Recommendations:

The Wiktionary project is an admirable worldwide project that greatly helps to improve worldwide communication. Therefore the recommendations presented are those of a cross-language-boundary-user, a user, that is not focused on only one language within one Wiktionary.

1. Use only ONE tag-standard, ONE model-standard and ONE model-sequence-standard across ALL Wiktionaries.

German Wiktionary, French Wiktionnaire and English Wiktonary use different tags and model structures.

e.g.:

- (German) Translation mark-up: *{{fr}}: [1] {{Ü|fr|maison}} {{f}}, ''Normannisch:'' maisoun

- (French) Translation mark-up: * {{T|de}} : {{trad+|de|Haus}} {{n}} or * {{T|de}} : {{trad+|de|Haus|n}}

- (English) Translation mark-up: * French: {{t+|fr|maison|f}}

Partly mark-tags exist in parallel.

- e.g.: the gender mark-up '|f' (feminine) at a defined place and {{f}} (anywhere).

At the first glance this seems to be a little narrow-minded.

But. The creation of tools to update the tag-standard version would be facilitated and could be broadly used across Wiktionary boundaries. The lack of standardization prevents the transfer of only one time created functions. e.g. to improve automated data transfer into another Wiktionary to automatically update translations. In the same way all automated functions could be used in one Version for all Wiktionaries.

I propose to use the English tag-, model- and model-sequence standard as general standard.

In this case the actual English HELP description for the mark-up language must and should be the actually leading one. Those for other Wiktionaries should be merely translated. Currently (in the French Wiktionnaire) the partly poor, partly outdated and inaccessible model descriptions hamper the broad usage by users who are willing to contribute but lack know-how.

2. Avoid duplicates in different Wiktionaries.

[NoX: Modified 28 March 2013.]

In my opinion, it’s an incredible waste of time and effort that the same word with the same language code exists in each Wiktionary (WT).

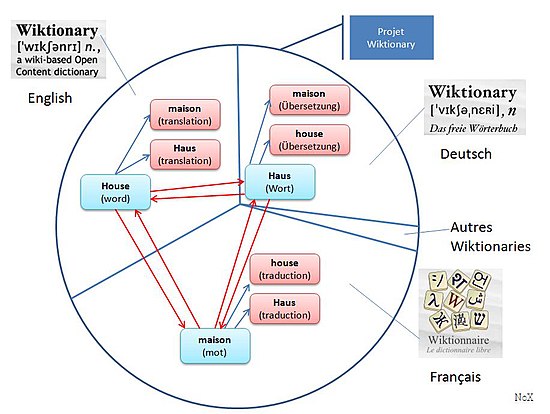

All words of a foreign language (other than the host language of the WT (e.g. non-French words in French WT)) should be eliminated. In image 1 I address all words in pink. In my opinion they are abused as best word representation in this language. They are redundant and in some respect their production is an unnecessary waste of time for contributing users. This effort could be used for better purposes: to improve bilingual translation examples.

In the 3-Wiktionary view of image 1 this means:

- Haus in the English [1] and French Wiktionary [2] should be eliminated.

- Maison in the German and English Wiktionary should be eliminated.

- house in the German and French Wiktionary should be eliminated

and substited by another ENTITY as described in the next chapter (see the TransEx-Entity).

To explain this, let me first take the perspective of a (reading (opposite to contributing)) user, mother language English, strongly interested in French (cross WT view). If he looks for a French word representing house, not knowing maison, he could use English WT house, take the translation reference type 2 (see image 3), cross over to the French WT by clicking on that link and get full and best information concerning maison. I’m sure, the language information concerning maison he finds there is the best one he can find in ANY other WT of WT project. After having enriched his knowledge concerning maison, why should he add this word to English WT (if he is a contributing user)? The other way round (user mother language English interested in French) it works same way.

So my argument is:

- The best (language) Information concerning house you will find in the English WT.

- The best information concerning maison you will find in the French WT.

- The best information concerning Haus you will find in the German WT etc.

Generally best information concerning a word in a specific language you will find in its host WT (blue words in image 1). All representations of these words in non-host-WTs (those represented in pink in image 1) are generally of lower quality.

BUT: I know this is not the whole truth. In reality these pink WIKI words not only contain redundant information concerning this word itself (e.g. pronunciation, gender, declination, conjugation, etc.).

They also provide:

- A synonym reference to synonyms in host language.

Synonym references are currently of better quality within the pink words (image 1) than in the blue ones. E.g. a contributor with mother language English better knows the English synonyms for maison. But why should he add them to maison in the English WT as he does today? Wouldn’t it be better to improve the translations of maison in the French WT? This would make the blue words to be used as synonym references. (I know this touches the problem of differences in WT mark-up which hampers updating other WTs: different translation mark-up tags. See my recommendation 1: Use only ONE tag-standard across all WTs.) - A bilingual representation of the usage of a word in a defined bilingual meaning/sense context (translation examples).

Today they are in my opinion the only strong reason for the existence of the words in pink (which I propose to remove). The true sense of these bilingual representations is, that they pass to the reading user a hint, a helping hand, how a word is typically and properly used in a (bilateral) language context: In the form of examples, translated into the other (!?!) langue. This decisive information must be kept and survive. I propose to transfer it into the TransEx-Entity (Translation examples). Its content should composed (in my example) from house in the French WT, and from maison in the English WT. Bilaterally.

3. Improve the data model. Introduce IDs and attributes.

The data model generally prosed for Wiktionaries is represented as ERM diagram in crowfoot representation. Data modelling and data representation (as shown to the user or edited) are two different things.

Primary Entity-Type shown is Word. It should only contain the basic information representing one single word in a defined language (Wiktionary language code), and word type (verb, substantive etc.).

In terms to understand the intention of the data model it seems to be necessary the identifier of Word. The unique identifier, the key of Word should be composed of the following attributes:

- Word-token. String of single or double byte characters representing the written word in any typeface.

- Language code.

- Word-type. Defined permissible word-types in relation to language code. E.g. substantive, verb, adjective etc.

- Homonym-token. String single or double byte characters in any typeface to discern homonyms. (Words of the same word-token, same language and same word type). Normally empty.

Each Word has one or more Meanings. Meanings are currently represented in Wikis by

- (example DE:) :[1] [[Unterkunft]], [[Gebäude]],

- (example FR:) # {{architecture|fr}} [[bâtiment|Bâtiment]] [[servir|servant]] de [[logis]], d’habitation, de demeure.

- (example EN:) # {{senseid|en|abode}} A structure serving as an [[abode]] of human beings..

Each Meaning-Entity should contain one and only one meaning and one or more characteristic sentences (repetition is not shown in image 2), using the word under this specific meaning (as currently). Meanings should be ordered by relevance.

Each Meaning has 0 to n Translations. Each Translation has two references.

I propose to substitute reference Typ 1 (see image 3) to refer to TransEx-Entity (bi-lingual translation examples). This seems to me to be a decisive change. Reference Typ 2 should be kept. It needs to point to a word in the language referenced by the translation.

Establishing the TransEx-Entity (bi-lingual translation examples) avoids all redundancies currently met at foreign language words in one defined Wiktionary. Data technically it’s a relationship-type-Entity to resolve the many-to-many relationships between a specific word meaning in different languages. Its content is bi-lingual. It does not belong to one Wiktionary. It’s a bridge between two Wiktionaries.

What could its content look like? Example: TransEx between German first meaning of DE: Haus and French first meaning of FR: maison. (Examples taken from (DE: maison) and (FR : Haus).)

- Signification – Bedeutung

- DE: Haus im Sinne von: [1] Unterkunft, Gebäude

- FR: Maison en sens de: (Architecture) Bâtiment servant de logis, d’habitation, de demeure.

- Exemples – Beispiele

- FR: [1] Dans quelle maison est-ce que tu habites?

- DE: In welchem Haus wohnst du?

- FR: Sa maison se trouvait seule sur une colline. De là, on avait une vue sur les toits des autres maisons du village.

- DE: Sein Haus stand einsam auf einem Hügel. Von dort blickte man über die Dächer der anderen Häuser der Stadt.

All other information in TransEx should be avoided. E.g.: Word-type, gender, pronunciation, translation. See German example of (DE: maison). They are superfluous and redundant at this place. These information are attributes of other entities; mostly of the entity Word itself.

Other entities: They seem to be self-explaining. Not all of them are detailed.

Comparison between the current data model and the proposed one.

ID of Word.

Currently the ID of a WIKI is only the character-string, representing a word.

The proposed ID of a Word consists of several attributes that should be put into separated (database) data-fields. Not into text mark-up. (A change of one of these IT-attributes would result into a database-move-process of the word.)

Other common attributes.

They could be put into a text-container, containing the well-known, hopefully standardised mark-up. Such a container could also contain (as currently) repeating groups, e.g. translated sentence pairs in TransEx. The same could be the case with entities like See, Expression, Derived word etc. Another possibility could be to put them into separate database elements.

TransEx entity.

As described, this entity represents the deep and broad Jordan River, which has to be crossed.

Future presentation and editing.

If you look at the English style of presentation I do not see big differences (besides the not-yet-extant TransEx entity).

One thing that really needs improvement in the presentation area is the display of translations. The currently usable roll-in roll-out mode seems to me to be simple-minded. An experienced cross-language user is interested in only two or three languages. User-defined it should be possible to select a translation language roll-out mode that rolls-out only translations of languages requested.

A big challenge seems to me to be the future editing process. It needs to be greatly improved. Preferably window based pop-up sequences, oriented at the entity structure, containing input fields that do not require the knowledge of the mark-up, except perhaps in an expert mode.

Advantages of the proposal.

- One general, commonly usable data structure.

- The limits between the Wiktionaries could be demolished. All Wiktionaries could be put into one worldwide Wiktionary-Project data pot.

- One general, worldwide usable mark-up language would be established.

- Functions need only one times be developed and can be used in all Wiktionaries. (I know this will kill the beloved babies of many a Wiktionary power user.)

- Automation processes (automated content controlling, automated Word-stub creation, automated translation transfer, mark-up upgrade etc.) could be greatly improved.

- No word redundancies, less editing effort.

Disadvantages of the proposal.

- Strong effort is needed. The TransEx entity is a broad and deep Jordan River to be crossed.

- The theme in its entirety is difficult to communicate between single language users, database experts focused at the WIKI Data Structure, language experts and those who need to create a Wiktonary-project data representation style-guide.

Your opinion?

Eirikr's comments (and replies to them)

- Wow. That's a lot to digest.

- Allow me to say for now that the underlying idea, of better data portability, is a good one.

- However, your proposal here calls for unifying many many things that really have no business being unified -- many of these variant aspects are different at least in part because they meet the needs of different user communities. For instance, the wikitext markup used in translation tables reflects the languages of the host language of each Wiktionary. The DE WT uses

{{Ü}}to stand in for Übersetzung; the FR WT uses{{trad}}as shorthand for traduction, since{{t}}there is used to stand in for transitif; the EN WT uses{{t}}as shorthand for translation, since there wasn't the same name collision as on the FR WT. Requiring that all Wiktionaries use{{t}}for translation table items might make English speakers happy, but it would be a poor mnemonic for editors of languages where the relevant term for translation does not start with a "t". It would also require renaming any other existing templates already at{{t}}, and then going through all entries that referenced the previous name to update with the new name. - This does not even begin to address the more complicated issue that different Wiktionaries employ sometimes very different entry structures because of the different ideas about grammar and linguistics held by the different user communities. If you are serious about moving forward with this proposal, I strongly recommend that you do some grass-roots building by addressing each Wiktionary user community directly. I'm pretty much never over here on Meta, and the only reason I learned of your proposal was thanks to another editor who posted on wiktionary:Wiktionary:Beer_Parlor about this. I suspect that I am not alone in having missed this post earlier. -- Eiríkr Útlendi │ Tala við mig 22:05, 19 March 2013 (UTC).

- I think your worry on the translated templates is not as much a problem as you think. I wouldn't be difficult to have "code-common" where templates have a name in english, and in each local chapter offering a wrapper template which translate it (as well as documentation). But I think that you are nonetheless right on the importance on making such a structuration with the community. We must communicate on this project and working with the whole community, so every regular contributor will know about it, and hopefully will be enthusiast to get involved so their current specificity could be preserved by making an enough flexible structure for every use case. --Psychoslave (talk) 13:57, 22 March 2013 (UTC).

- Re: localized labels for various features, your idea of a wrapper is probably a good one. Now that we have Lua, that might be less of a concern, though I have read that editors are running into possible performance concerns when a single Lua module is being called multiple times all at once. Collapsing all translation item templates for all Wiktionaries into a single Lua module might wind up creating an extremely limited bottleneck. -- Eiríkr Útlendi │ Tala við mig 21:52, 22 March 2013 (UTC).

- Well, I don't know the detail of this specific load balancy problem, but it seems like a resolvable to me. A simple solution would be to duplicate the code automatically, so you keep the central editable version, but executed code is distributed. Now if there's really a load ballency with lua module, there should be a serious investigation to resolve it. I just haven't the proper representation of the technical infrastructure to give an appropriate answer just like that, but I have no doubt this can be resolved. --Psychoslave (talk) 08:55, 23 March 2013 (UTC).

-

- @Lars, one difference in the treatment of translations is that the English Wiktionary, for instance, links straight through to the translated term entry pages. The full treatment is available there, but not right in the "Translations" table.

- @Nox, your rewrite quite concerns me, particularly this paragraph:

To explain this, let me first take the perspective of a (reading (opposite to contributing)) user, mother language English, strongly interested in French (cross WT view). If he looks for a French word representing house, not knowing maison, he could use English WT house, take the translation reference type 2 (see image 3), cross over to the French WT by clicking on that link and get full and best information concerning maison. I’m sure, the language information concerning maison he finds there is the best one he can find in ANY other WT of WT project. After having enriched his knowledge concerning maison, why should he add this word to English WT (if he is a contributing user)? The other way round (user mother language English interested in French) it works same way.

- You assume that this hypothetical English reader is also capable of fully understanding the French Wiktionary entry at wiktionary:fr:maison. This is a seriously flawed assumption. As Lars notes, each Wiktionary represents thousands of hours of work by host-language contributors, writing in the host language.

- I am also concerned about some of your operating assumptions about applicable data models. The only commonalities in entry structure and data, across all Wiktionaries that I have seen, is the presence of the lemma term itself, and possibly lists like for translations, derived terms, and descendant terms. It is not even safe to assume common parts of speech for term categorization, as not all host languages treat parts of speech in the same way. For instance, what English grammarians think of as an "adjective" roughly maps to at least three different parts of speech in Japanese (形容詞 [keiyōshi], 形容動詞 [keiyō dōshi], and 連体詞 [rentaishi]). What Japanese grammarians of as a 語素 (goso) roughly maps to two different parts of speech in English ("prefix" or "suffix"). Meanwhile, it seems that the Russian Wiktionary forgoes such labeling entirely and instead uses running text to describe the morphology of each term. (NB: I'm not a Russian reader; this comes to me as second-hand information.)

- Since each Wiktionary describes each term using the host language, there is no guarantee at all that the labels used in the Russian Wiktionary match the labels used in the French Wiktionary match the labels used in the English Wiktionary match the labels used in the Japanese wiktionary... all for any single given term.

- I certainly wish you luck in your research. However, I think this problem is much more complicated, and much more intractable, than your description above suggests. -- Eiríkr Útlendi │ Tala við mig 23:59, 28 March 2013 (UTC)

- @Eirikr et al.: Since I have worked quite a lot in the Russian Wiktionary (Викисловарь) I can assure that you can find that kind of labelling also there. But the reason why you don't see it when you just look at a page like this, is that you don't know where to look. I think that this illustrates very well one of the problems, if you want all Wiktionaries to function as one big Wiktionary (and if you want to be able to contribute to many Wiktionaries without starting from scratch everytime), all the unnecessary differences. There an initiative like this could make a difference.

- Lars Gardenius(diskurs) 09:14, 29 March 2013 (UTC)

- @Eirikr et al.: Since I have worked quite a lot in the Russian Wiktionary (Викисловарь) I can assure that you can find that kind of labelling also there. But the reason why you don't see it when you just look at a page like this, is that you don't know where to look. I think that this illustrates very well one of the problems, if you want all Wiktionaries to function as one big Wiktionary (and if you want to be able to contribute to many Wiktionaries without starting from scratch everytime), all the unnecessary differences. There an initiative like this could make a difference.

Psychoslave's comments (and replies to them)

- Excellent work. Now to my mind the word entity should not have a single orthography, because it doesn't reflect reality : even if you restrict yourself to well known and widely used spells, there are words which have several acceptations. For example in french you may write clef or clé to refer to a key. So orthography should be an other entity, just like meaning, and one word may have one or more orthographies (for a given language). One orthography can correspond to one or more word. Moreover an orthography should be categorizable so you can say if it's considered a correct orthography, a mispelled word, or special things like the all your base are belong to us locution and the word l33t. Also the proposition should be extended to include synonyms, hypernyms, and so on, as well as etymologia. Etymologia should have it's own ERM part I think, because we can for sure establish a well structured schema of how words slided from one form to an other (I'm not a specialist, but I know there are specific vocabulary for many supposed transformation, like a l sliding to r. --Psychoslave (talk) 10:41, 22 March 2013 (UTC).

- Also each spelling could be attached to examples, and examples could have 0 or m translations, as well as a well defined reference (url/document with isbn…). --Psychoslave (talk) 13:14, 22 March 2013 (UTC).

- Re: orthographies, different spellings often carry different connotations, sometimes different enough that they should be considered different entities in their own rights, even if the underlying concept referred to by the terms is the same thing. English thru and through are two different labels for one concept, but the labels themselves carry sufficiently different semantic information that dictionaries often treat these two different spellings as separate entries.

- Even with your French example, I see that clé has a secondary sense of "wrench, spanner", that seems to be missing from the clef entry. Assuming that this difference in meaning is valid and not just an accidental omission by Wiktionary editors, then these two spellings carry different semantic information, and deserve to be treated as different entries, at least for Wiktionary purposes.

- Japanese gets much more complicated due to the extremely visually rich nature of the written language. The hiragana spelling つく (tsuku) can mean "to arrive; to turn on; to stab", among other meanings. Meanwhile, the kanji spelling 着く (tsuku) is limited to "to arrive"; 付く (tsuku) is limited to "to turn on"; and 突く (tsuku) is limited to "to stab". (Simplified examples; all of these entries have additional senses.) Whether to use the more-specific kanji spellings is a matter of style and preference, not to mention clarity and disambiguation; which kanji spelling to use depends on semantic context. The hiragana spellings of many short verbs have similar one-to-many correlations to kanji spellings, where the kanji spellings are generally more specific than the hiragana spellings, and often the hiragana spellings are in common use right alongside the kanji spellings.

- The data model must ostensibly account for all of this variation. Separating the spelling from the concept, which I think is what @Psychoslave here is proposing, is probably necessary for this. Some commercial terminology management tools that I have used take the concept as the top level of the data structure. One concept may have multiple terms, and one term may point to multiple concepts. One serious potential shortfall of such software is clarity --

- how are concepts identified within the data model?

- how does one add a synonym (such as a new orthography) to a concept?

- when looking at a single term, how are different concepts identified for the user?

- is each individual sense of any given term (implemented now in Wiktionary as a numbered definition line) to be transformed into a "concept" in the data model?

- how does one manage different "concept" data objects, to do things such as find potential duplicates (possibly differing only by minor wording choices)?

- how does one manage "concept" data objects, for purposes of splitting a sense into multiple separate senses when more specific meanings are identified?

- etc., etc.

- This is an enormously complicated problem, even when limited to looking at just one language. Expanding the problem scope to include all languages is both insanely ambitious and deliciously challenging. Good luck to all! :) -- Eiríkr Útlendi │ Tala við mig 21:52, 22 March 2013 (UTC).

- Ok, let me begin with the simplest point (for a french native speaker point of view): clef and clé are exactly the same "word", orthography being the only difference. They have the same meaning, and you pronounce them in the same way. To understand why, you can begin with w:Rectifications orthographiques du français if you want to know more about it (some equivalent articles are available in other chapters). But to stay both on the topic and the french specificities (or at least, linguistic phenomena which may not happened in all languages), there are word that you write in the same way, but you'll pronounce differently according to their meaning. See wikt:Catégorie:Homographes non homophones en français. I have no doubt each language have it's own curiosities, so indeed, we are here speaking of a daunting task. Fortunately (and hopefully) this task can rely on a global community (or a global set of communities if you prefer). Probably no single human could afford the time and experience needed to accomplish such a task, but I believe that together we can do it.

- For the how can we deal with identification and more than that, what are the element which should form a key to a unique entry in our database, I would be personally interested to know about the wikiomega contributors opinion, because they probably have interesting analyze to share that they gained through their experience.

- Also we for sure have to gather information to be sure we can establish a model flexible enough to take account of all languages/communities specificities, but how do we decide we gathered enough information to freeze a structure? Ideally, to my mind, we should come with an extensible basic solid structure. --Psychoslave (talk) 09:57, 23 March 2013 (UTC).

NoX: You (Psychoslave, Eirikr) are absolutely right. My ERM is only a sketch. Relevant Entities and Relationships are missing. In IT Database projects it’s a good idea to begin with a simple ERM. Its purpose is, to initiate a discussion between IT- and (in this case) language-experts about necessary and relevant things (Entities) and their relationships to each other. Later in the database design process they are mapped, not at all 1 : 1 into specific (MS SQL-, ORACLE-, DB2-, WIKI-) databases and tables. In an IT project e.g. your contribution would lead to (a discussion and) an extension of the ERM by adding Entities (not seen by me, or left away in the discussion provoking startup process). A good ERM on Language and language translation would reflect the long lasting nature and the essence of all things (Entities) and their relationships in this environment.

But our current problem is different. We have a multipurpose WIKI-database with shortcomings in the language area (WT Project) and big advantages in other areas (e.g. WIKIPEDIA). So my idea was, looking at the current French WT (knowing also English, German and Italian WT), what could its ERM look like, what could be improved. I didn’t write anything about HOW to do it. The change could be made evolutionary (I’m not sure if this can work because I’m not a WIKI-database expert), or it could be made revolutionary: Simply said 1. Harmonize the mark-up, 2. Export current WT content (eg. into an agreed XML Structure). 3. Reload it into a database better apt (see following proposals by others).

Lars Gardenius' comments (and replies to them)

@NoX?: Since I look upon all Wiktionaries as one big Wiktionary and then also share your interest in cross-Wiktionary questions I believe that your approach is basically praiseworthy, however some or your suggestions above raises questions. Before I start critizing the proposals too severly I therefore want to ask you a question concerning the section "Avoid duplicates in different Wiktionaries". What exactly do you mean by that? To make a comparison: Do you want all Chinese users to throw away their French-Chinese dictionaries, all Swedes to throw away their French-Swedish dictionaries and so forth, and that they should all start using Larousse's French-French dictionary, to get the exact meaning of a French word? Is that the idea you have for Wiktionary, or have I completly misunderstood your vision? Lars Gardenius(diskurs) 13:21, 26 March 2013 (UTC)

- NoX: Hi Lars. I partly rewrote chap 2 concerning duplicates. I hope this answers your questions. If not, let me know. Still unanswered rests, where (in which WT) to put the TransEx-Entity if established. NoX (talk) 14:46, 28 March 2013 (UTC)

Thank You for the new and extended version of "Avoid duplicates in different Wiktionaries". However I am still very critical. I would like to stress again that I find Your initiative and approach praiseworthy, and I hope that You will not find what I write below as an attack on Your proposal as a whole. [1] However I believe that You have overlooked some very basic facts about languages in that specific section.

I have worked as a professional translator (from Chinese) during a short period of my life. I, as many others, recommend that you start using monolingual dictionaries as soon as possible, like Oxford Dictionary for English, Larousse for French or 新华字典 for Chinese. The reason is the one you give above, the best explanation you can find is probably in this kind of dictionary. Since it is very costly to produce a (paper) dictionary you have to limit the space given to explanations in bilingual dictionaries. [2]

However, this recommendation is easier to give than to follow. To be able to handle e.g. a monolingual Chinese dictionary, you have to study Chinese at least a couple of years. An effort that perhaps not everybody is ready to make. I don't think it is reasonable to believe that any average user can understand an explanation written in Chinese, however good it is.

You could of course propose a translation of the Chinese articles (on Chinese words) to all other languages but then you are back in the situation you wanted to avoid, and how many can translate from Chinese to Finnish, Romanian, Quechua etc., and keep them updated?

There is also another mayor reason why this is not a good approach.

Every monolingual dictionary is written in a social and linguistic context. A Chinese monolingual dictionary is written in a Chinese social and linguistic context, that you have to know to really understand the explanations. All languages also have different ways to solve different grammatical and linguistic problems. So what perhaps is not at all mentioned in a Chinese monolingual dictionary, because it is considered trivial to everybody having Chinese as their mother tongue, is perhaps very difficult to understand, and necessary to treat in a dictionary, if you e.g. just speak Portuguese.

These are some of the reasons why I think every serious translator use all kinds of monolingual, bilingual (both ways) dictionaries when translating. If you are a Swedish translator you simply need a dictionary explaining the word from e.g. a Chinese point of view as well as from a Swedish point of view.

So both the professional translator as well as the average layman needs both monolingual and bilingual dictionaries, now and in the future.

Then it should also be said that it is obvious that the biggest problem in Wiktionary lies with these bilingual parts of the dictionary. If you for instance want to create a Chinese-Romanian Wiki, you will need at least ten people working on it for several years before it reaches a level of quality and usability that is acceptable. These number of people is obviously often lacking. But to throw these bilingual parts out doesn´t solve the problem, just hides it.

This problem is I believe also partly linked to the translation part of the articles. The space devoted to translations is very small (in all Wiktionaries). I at one time made a comparison with an ordinary (paper) dictionary. While they devoted 40 lines to translate a german word (to a certain language), Wiktionary devoted half a line, that is about as much as you can find in an ordinary cheap pocket dictionary!

I believe that Wiktionary have to find a whole new way to present translations and to link them to the articles.

Lars Gardenius(diskurs) 18:13, 28 March 2013 (UTC)

- ↑ I don’t have any problem with rational pros and cons. I have problems with some emotional ones (not yet found here). NoX

- ↑ I agree that the normal way learning a language is starting with a bilingual dictionary and ends in using a monolingual dictionary in the target language. The latter is particularly difficult in cases where the manners of writing differ and cultural differences are big. But in my opinion this is no strong argument against my proposal to remove the pink (non WT host language) words. Though I’m an oldie, I’m still willing to learn. But before rewriting parts of my proposal, so I should, I want to listen to your voices. NoX

- I sometimes use wiktionary in my poetry writing process, so I'm fully aware that you can't expect some little definitions to give you all the key of the overall meaning of a sentance. Meaning is context dependent, at least that's how I think about it currently. Ok, but even if no dictionary will never able to give all keys to understand a sentance in its idiomatic context, I think that giving some hint is better than nothing. Wiktionary have no deadline, so to my mind time is not a real problem. Gathering more contributors is to my opinion a far more important issue. So we should aim at

- making edition as easy as possible to new contributors

- having a cross-chapter way to structure articles in a flexible manner which allow:

- feedback across all chapters : if I add a Shakespeare quotation as usage example in the english version, it should be propagated to all chapters, with text requesting users to translate in the current chapter language if they know the original one. If relevant, text explenation may be for example added in ref anchors.

- adaptation to locale specificities, let the chapter community decide how to integrate elements in their workflow. --13:01, 5 April 2013 (UTC)

-sche's comments (and replies to them)

- (-sche here:) I don't have time right now to respond to everything that has been said, but: it's true that many (e.g.) English Wikt entries for (e.g.) French words are currently smaller than French dictionaries' entries for those words, because Wiktionary is incomplete. However, because Wiktionary is not paper, it has the ability to cover all words in all languages in greater detail than any paper dictionary. wikt:de:life (as a result of my work) and wikt:de:be, for example, provide German-language coverage of the English words life and be that is as expansive and detailed as a monolingual English dictionary's. wikt:en:-ak is provides English-language coverage of -ak more detailed than any Abenaki-language dictionary's—not that there are (m)any Abenaki-language dictionaries! That's what each Wiktionary can do at its best, and it's what would be lost or made more difficult by proposals to centralise foreign-language content either on Wikidata or on OmegaWiki (cf. my comments on the proposal to adopt OmegaWiki) -sche (talk) 23:34, 28 March 2013 (UTC).

- Currently in my opinion (very) rare pink high quality examples like the cited German WT life (1st bilingual part) seem to me to be a strong pro for my proposal. I stay with your example and have a look at English WT Leben (2nd bilingual part). Why not merge them into one TransEx? 1st part meaning 1. Life, the state between birth and death is the same as 2nd part meaning [2] Leben: die Zeit, in der jemand lebt; persönliche Laufbahn, mit der Geburt beginnend und mit dem Tod endend. And so on with the other meanings. Why not take one of the two as best representation of the word’s meaning, translate it into the other language and both on top of the corresponding translation examples, as proposed (by me)? (By the way in this special case: the blue German WT Leben has fewer meanings than pink German WT life (colors refer to image 1 above). The question arises, why did the contributing author not improve the meanings of the blue one or took them from the blue one and put them in the pink one?) I think we are in this respect not far away from each other. I don’t propose to throw away the content of the pink words. The idea is to transfer the bilingual representation of the usage of a word in a defined bilingual meaning/sense context (perhaps also other non redundant language-relationship-information that I did not yet identify, but seems to be addressed) into the TransEx Entity/database/table/WT. Link type 1 (see Image 3: Translation references above) would address these TransEx(es). German to English translation link type 1 of Leben would reference the identical TransEx Leben*life as English to German translation link type 1 of life. Thinking of an evolutionary expansion of WT Project: to which WT sould in this case Leben*life, in general referential entity ("brige-") elements belong? Not to the German, not to the English but to a common new WT, containing the elements of the relationship entity TransEx. The integration should occur in a way that users have the feeling to work with one WT alone. Currently with the English, French etc. WT, in the future with a single big pot. I think its not a good idea to split up parts of current WTs and transfer them elsewhere. Political parties doing that, generally loose. NoX

- Re "I think it's not a good idea to split up parts of current WTs and transfer them elsewhere": yet that is what this proposal does; it either splits foreign-language entries out of all the Wiktionaries, or duplicates content.

- Re "Why not merge them into one TransEx?": Many of the comments I made about OmegaWiki—which already exists as an 'all-in-one' Wiktionary that translates and transcludes 'consolidated' definitions into multiple languages—can be repeated here:

- Firstly, words which are denotatively similar enough to translate each other are rarely connotatively synonymous. It is linguistically unsound to presume that terms from different languages have exactly the same nuanced definitions. Freund and друг, for example, denote a closer companion than friend (Freund also denotes boyfriend); wikt:de:friend-vs-wikt:de:Freund and wikt:en:Freund-vs-wikt:en:friend should and can note this, even if (due to incompleteness) they do not note it yet. How would a consolidated 'TransEx' address it?

- Secondly, OmegaWiki already tries to do something similar to what is proposed here... and it fails. New contributors create dozens of translation-table entries and new entries on en.Wikt every day; in any given week, en.Wikt has 40+ regularly active users. Other Wiktionaries, laid out in their own languages, also have many active users. OmegaWiki has 10 regularly active users. I doubt this is without good reason: OmegaWiki's lingua franca is English, and its translation tables are based in English terms (it assigns translations to English senses: for translations of the German laufen, I am taken to DefinedMeaning:run (6323)). This makes it hard for people who do not speak English well to contribute translations or anything else, or to participate in discussions: such users are best served by Wiktionaries in their own languages. WikiData, with English as its lingua franca, has the same handicap as OmegaWiki, and I feel that an attempt to create a second 'consolidated' Wiktionary on WikiData is likely to fail to flourish just as the first consolidated Wiktionary (OmegaWiki) failed to flourish, and just like OmegaWiki, a WikiData 'TransEx project' will then be just another competing 'standard', its entries sure to fall out of sync with the other Wiktionaries. :/ -sche (talk) 07:15, 1 April 2013 (UTC)

- At the first glance, this seemed to me to be a strong argument. To get a feeling how the Germans would treat a problem like this, I added a question to the discussion page of wikt:de:Freund.

My interpretation of the (one opinion only) response is: Lack of quality. 1) Missing meaning at wikt:de:Freund. 2) Missing word wikt:de:Freundchen. 3) Wrong translation references at wikt:fr:ami (should refer to wikt:de:Leute) and wikt:en:friend (should refer to wikt:de:Freundchen).

Not only since Heisenberg we know that we are living in a world of uncertainty and we have to live with it. But we can reduce it. My not yet fully presented idea concerning TransEx is, that a presentation construct like

#* {{RQ:Schuster Hepaticae V|vii}} , see word sound

is needed, but without containing its data in line behind the mark up. The editing process, using a pop up window (data presentation defers from data editing and data storage) puts the data into a separate storage, unseen by readers and editors). The presentation process takes the date from there.

And this is completely different from a two tire approach like that of OmegaWiki (which I don’t appreciate). The users need to have the feeling to act in one single environment. This can be achieved by separating data presentation from data storage. This is currently not the case. Its description needs more words than those currently contained in my proposal.

By the way, thank you for removing my {{sic}} provoking bugs. Hope you get a feeling of the idea behind my poor words. NoX (talk) 19:43, 1 April 2013 (UTC)

- At the first glance, this seemed to me to be a strong argument. To get a feeling how the Germans would treat a problem like this, I added a question to the discussion page of wikt:de:Freund.

- Here is what your interesting comment inspire me: we should not pretend to provide word/locutions "translations", but try to list semantic proximity. For example, as far as I can tell, the french je and the english I are semanticaly equivalent. But I have no idea if the japanese わたし (watashi) is (I suspect it isn't even if my knowledge in japanese is close to nil). Of course, for japanese people, having details on watashi semantic may not be as interesting as it can be for non-native speakers. We lake consistency:

- We should provide a way to propagate this kind of information in a more systematic way, with automation when possible. Tipically, a word etymology is something you can well represent into a form that a computer can manipulate. --Psychoslave (talk) 13:48, 5 April 2013 (UTC)

- Please note that the assertion of -sche that OmegaWiki's translation tables are based in English terms is wrong. In OmegaWiki we also have terms that have no English equivalent (e.g. safraner). The fact that the German "laufen", takes you to "DefinedMeaning:run (6323)" should not be interpreted as being English-based. In fact, it should take you to the "DefinedMeaning:6323", but it was decided to add a translation in the DefinedMeaning name to make the recentchanges page more readable. As it causes some confusion, it will be changed to number-only in the future, when the recentchanges page will be ajaxized. --Kip (talk) 13:40, 8 April 2013 (UTC)

Purodhas comments (and replies to them)

I am not at all commenting presentational matters here. I am strictly concerned with structure only.

The approach of OmegaWiki is to have a defintion of a concept per expression, or per "word". Defintions are assumed to be expressed in all languages, yet to describe something which is not bound to a single language.

This has a technical disadvantage: It prohibits the mass-import of most bilingual word lists and dictionaries, becaus they lack the requied definitions. Remember, a definition must exist in OmegaWiki before another word or translation can be connected to it.

Is the idea of having (mostly) language-independent definitions thus a dead-end? I believe not so. For a really huge class of words such definitions exist, can easily be found, and they serve their purposes. But there are notable exceptions.

In more detail:

- Typical names, proper names, geographical designations, technical terms, scientific terms, and many more of theses kinds are identical or almost identical between many languages, having doubtlessly shareable definitions. The are btw. quite often additionally identifyble by pictures, drawings, maps, etc.. They comprise something like 90% of the use cases of a dictionary of more than ten thousand or twenty thousand "words". Thus, it would be unwise, and a waste, if we were not willing to use this easily available opportunity.

However:

- The typical hundred most used words of a language - each language has its own set of course - most often do not have good shareable definitions, and very often no useful definitions at all. Setting very common descriptive words aside, such as mother, rain, or one, you will find words that are best described by their usage rather than by their meanings. E.g. the English words many, much, and often share some aspects of ther meanings and may even have a common translation to another language, but you cannot exchange them for one another in English sentences. Their use cases do not overlap. Somewhat simplified, you have many for countables, you use much for uncountables, and often for repititions, which all exclude one another. Descriptions of the proper name type can be entirely on the object language level. The preceeding "descriptions" of many, much, and often are on the meta language level (which uses language to speak about the language itself rather than about objects alone). Transferring them into another language may proove cumbersome when the target language does not have concepts like countables, uncountables, or repititions. -- Purodha Blissenbach (talk) 05:49, 21 March 2014 (UTC)

- The Toki Pona word li has no direct translation in any language that I would know of. It is a pure structural word. It separates verbs from objects. E.g. Purodha toki toki. means something like Purodha talks and talks. while Purodha toki li toki. tells us Purodha speaks a language. You see how li introduces the object part of the second sentence. It does not have a "meaning" on its own. It is there for a structural reason. It only indirectly influences the meanings of entire sentences. There are strutural words in many languages. Some of them have close or remote look-alikes in some other languages. Generally, they are language specific, have no global translations, have no or little meaning on their own, and need to be explained using metalanguage. OmegaWikis approach of having a common "defintion" has drawbacks when it comes to pure structural words. The good news is that there are not many of them in any language, most often maybe a dozen or two. The bad news is that they are usually among the most frequently used ones. A vocabulary without them would be very incomplete.

(to be continued) -- Purodha Blissenbach (talk) 13:22, 21 March 2014 (UTC)

Thinking out of the classical online Wiktionary format and reading usage

Our goal is not only to build dictionaries as complete as possible, we also want the result to be as useful as possible, which mean it should be easy to integrate them elsewhere and generaly to be used in innovative ways.

In this part, contributors are encouraged to expose what kind of usage could be made easier if taken into account at the design step rather than an after thought.

Generating standard dictionary output.

Currently, dump which are generated are not directly usable usable in offline application, for example gnome dictionnary. As far as I know we doesn't provide a standard way to consult it like through the w:DICT protocole. It would also be convenient to be able to download wiktionary for e-ink devices. --Psychoslave (talk) 13:40, 22 March 2013 (UTC).

Voice recognition.

One way one could want to access to an entry in wiktionnary, is to pronounce the word/locution. As smartphone become more common, people acquire a device which is able to take voice input. On the other hand, sometime people will meet a word they can't spell. For example, two person from distant native culture became friends, and they like to share their respective knowledge through their talk. So sometime one will talk a specific word of its native language, but the other person won't understand it and in fact won't even be able to pronounce it because it contains sounds s/he doesn't know (or may it's a tone language while s/he doesn't know tone language). So they take a smartphone, run the wikipronounce app, and voilà, the original graphy, an IPA transcription (eventually a roman transcription if relevant), a definition in the user native language. --08:45, 23 March 2013 (UTC).

- This is not as easy as one might expect it to be. We have three kinds of obstacles.

- There is no technical way to come from sound to IPA in general. While some pieces of sound recordings may be technically identifyable (mostly sonorants, but by far not all of them), other segments are not generally identifyable. Most notably plosives are silent to a large extent of their durations. There is no way to distinguish them based on their main parts (no frequencies, amplitude zero for each of them) but you can try from their coarticulation with neghboring segments. Since that is extremely language dependent, we are currently not capable to do that in an independent way, and we cannot for the majority of the major languages either due to lack of specific research on them, leave alone so called smaller languages.

- Current state-of-the-art voice recognition systems are either:

- restricted to a very limited predefined vocabulary. Good ones produce speaker independant hit rates in the 60% to 75% range.

- less restriced vocabularywise, e.g. accepting all words recognized by a spellchecker of a certain language, but then they need to be trained to a specific speaker for a significant duration before they become usable. Their recognition rate of non-dictionary words is, politly said, limited.

- IPA use is both language and tradition dependant. If you have a single recorded utterance and give it to, say, a dozen people from different parts of the earth and from different traditions of using IPA, you will likely get a dozen mostly incompatible or contradictive transcripts. Most usually, IPA transcripts in dictionaries follow a single tradition and are made by native academics. Unless you know both the tradition and the customary language specific deviations from the formal IPA standard, you cannot pronunce IPA transcripts found in foreingn language dictionaries correctly.

- Thus I am sorry to say, your vision is - at the moment at least - limited to very few use cases and at best to words already existing in a Wiktionary. I wish the better, but it is certainly not at sight. -- Purodha Blissenbach (talk) 04:31, 21 March 2014 (UTC)

Speech synthesis.

Along the IPA (and X-SAMPA), wiktionnary also offer prononciation sample. Currently this sounds need to be recorded and uploaded by contributors, one by one. This solution is better than nothing, and even should be probably kept to give real world examples of the word prononciation. Thus said, there are many disadvantages with it. First of all, not all word have such sample. Some have nonetheless IPA, but probably must people won't be able to read it easily, given that, as far as I know, no primary school in the world teach it. So it would be very helpful for much reader to have a speech synthesis using the IPA data (when present), so not only would people have at least a minimal idea of how to pronounce it, but also will they be able to learn IPA with accustom. An other pro would be that it will provide an unified prononciation voice accross all words (possibly customable in preferences), while records will change and represent contributors diversity. This last sentance should not be taken as a critic of diversity, as previously said records should be considered of great value because they provide real world examples, and should stay as a complementary data to a speech synthesis. --Psychoslave (talk) 08:11, 25 March 2013 (UTC).

- For reasons outlined in the section on Voice recognition, the chances to get correct or usable speech synthesis from IPA transcripts are very limited. Odds are that a majority of cases will simply be incorrect. Do not take this as an argument not to try it, but you must be aware that each langage needs its own speech synthesis algorithm for the entire idea to function. --Purodha Blissenbach (talk) 04:46, 21 March 2014 (UTC)

Helping avoiding/creating neologisms.

Language primary purpose is to communicate, share ideas. Often people know no specific word to express what they are thinking and willing to communicate. Usually, one may use a sentence using a set of words which enable to express, more or less accurately, what they think. But when a new concept is central to a thought, one may decide to create a new word to express it. Different strategies may be used to coin such a word, each having pro and cons:

- Use etymological knowledge of the given language to build a word which doesn't add new roots, and will be both be short and hopefully understandable to someone having a good knowledge of this language. The advantage here is that it extend the language in more or less familiar way to speakers, possibly in a word that they will understand even if they never heard it before. For many word of this kind, no high knowledge is really needed, as many (all?) languages have affixes which enable to coin such adhoc words. But sometime making such a construction can require such a high linguistic level, especially in specific topics such as science activities, where people may be competent in their specialty but not in linguistic.

- Make an acronym. The clear advantage here is that you need no linguistic competence to coin a word. The evident con is that the coined word is completely opaque and native speakers won't be able to use their lexical knowledge to deduce its meaning. An acronym is not necessarily used because no specific expression exists, it's often a matter of shortness. Thus, DNA which stands for deoxyribonucleic acid trade a ten syllables against three.

- Using a loanword. Advantages are that the word exist, just eventually need some pronunciation tuning, and it probably have a known meaningful etymology. The con is that the word may be opaque to native speakers of the target language, so they can't establish semantic relations based on their already acquired lexical/meaning mind network.

Here wiktionnaries should help by:

- first, avoiding unwanted[1] redundant neologisms, making easy to find existing expression to express a given concept,

- making easier to create neologisms as relevant as possible given existent lexicon of the target language.

Add a user-friendly method for adding new pronunciations

Using for example Recorderjs (see demo).

Create a tool to help users move in batch their own pronunciations from Forvo to Wikimedia Commons

Forvo is a website that allows users to pronounce words in many different languages. Unfortunately recorded sounds are licensed using Creative Commons Attribution-NonCommercial-ShareAlike license, so only the authors could import existing pronunciations in Forvo to Commons.

Notes and references

- ↑ The goal is not to prevent people to create new words or languages if they want, just to let them know if there are existing expression if they would like to avoid it.

See also

Certain Wiktionary bugs:

- 36881-Wiktionary needs usable API (GSOC proj desc)

- 46610-Pronunciation recording tool (GSOC prop draft)

- 22249-Sister projects search function isn't displayed on some search result pages.

- 11415-__EDITPARENTSECTION__

- Wikidata:Wiktionary

- OmegaWiki

- Beyond categories

- WikiLang aims to be a multilingual, interwiki bridge between the development processes of Wikipedia, Wiktionary, Wikiversity and Wikibooks, by providing a centralized source of documentation about all languages—both lexical and grammatical—that could be expanded on in many useful ways.