Wikimania05/Paper-CM1

Wikipedia – Anonymous Users as Good Users

[edit]- Author(s):'' {{{...}}}

- License: Cathy Ma

- Slides: Cathy Ma

- Video: {{{radio}}}

- 'Note:' Cma presentation.pdf

About the slides: {{{agenda}}}

About license

TBD<include>[[Category:Wikimani templates{{#blocked:}}]]</include>

Abstract

[edit]Wikipedia has increasingly become an important portal for knowledge accumulation and dispersion. Many of its contributors (aka Wikipedians), however, were like the batmen in this project – Wikipedia has shown their trace of visits but they still remain rather invisible. This paper, thus, aims to serve two purposes to help us understand the Wikipedians systematically: (1) to capture a random sample of 100 user pages of the Wikipedians, and (2) to create a scale that systematically measures the extent to which the Wikipedians reveal their identities. It is hoped that by systematically examine how they reveal their identities on the user pages, we can explore if their quantity of edits actually correlate with the extent to which they expose their identity. So far the findings of the this paper suggests that is not the case, i.e. users who reveal more of who they are do not necessarily edit more.

Paper

[edit]The Wiki-Batmen – who are the Wikipedians?

[edit]Wikipedia as an online open project aims at providing a platform for collaborative building of an information archive that strives to become ‘the sum of all human knowledge’ . The policy of Neutral Point of View2 helps to create a platform that provides equal opportunity for participation. The structure and design of Wikipedia also serve this aim by posting relatively low entry barrier for participation as compared with other online projects. Low entry barrier means that user login is not required when users visit the site or even to edit its contents. New edits are made visible on the world wide web immediately after users save their changes online, with each edit falls under the peer-review system sustained by the volunteers on Wikipedia. In this respect, Wikipedia has far lower entry barriers than other online projects such as Sourceforge and Slashdot which both require user login and a certain level of computer knowlege (check Reignhold). On the other hand, with setting that minimizes the need for user to supply their information, there is little, if any, statistics on the nature and identity of users. Thus in this research I will try to look for an empirical way to analysis users in terms of their levels of disclosure and see how that correlate with their patterns of editing.

Wikipedia – quantifying the articles

[edit]My particular interests reside in unraveling the organizational structure and related psychological phenomena of Wikipedia, and in this paper the specific focus is given to an empirical investigation of the relationship between levels of identity disclosure and how it relates to patterns of editing. In this respect the paper by Viégas et al. has provided some insights in the way of which statistics can be employed to examine the correlations among patterns of editing by various users. They designed a graphical-statistical method called history flow visualization, which is a method of visualizing edit patterns on Wikipedia by mapping out the patterns of collaboration on wiki scientifically (Viegas, Wattenberg, & Dave, 2004). By mapping out the quantity of edits by different users of on histogram-like bars which adopted various colors in signifying the proportion of changes across time and different users, their research shed light on some key questions of Wikipedia in the areas of vandalism-repair, speed and efficiency of peer review, conflict resolution and authorship.

Of 70 different Wikipedia page histories that they sampled using history flow visualization, they found that the average speed of recovering mass deletion (deletion of all content of the page) is 2.8 minutes. This means that for every mass deletion on Wikipedia, other users in general are able to revert the article back within an average elapse of 2.8 minutes, where as only 1.7 minutes are needed on average for when obscene words are involved.

The speed of spotting and undoing vandalism of Wikipedia is quite impressive, given that volunteers alone do this. When it comes to empirically examining the credibility or effectiveness of the Wikipedia, the speed of reverting vandalism is just one of the several possible parameters for quality check. Other research has been done in view of measuring the quality of edits on Wikipedia. For example, Lih has created a 2-construct matrix in assessing the reputation for articles on Wikipedia, namely rigor and diversity (Lih, 2004). Rigor refers to the total number of edit of an article, where as diversity refers to the total number of unique users. In Lih’s paper these two constructs were adopted as simple but yet distinguishing measures for articles. Given the cumulative nature of the contents of Wikipedia’s article (which means that articles general evolve as the number of edits increase), numerical indicators like rigor and diversity are useful in tracking down the extent of their changes. Hence these two parameters, namely rigor and diversity, were employed to compare and contrast the impact of press-reported articles during certain time frame, which was proved especially useful in detecting changes across time.

Statistics and research in general point to a direction of dissecting the quality of the articles on Wikipedia. There are concise and representative tools and metrics that help us understand the epistemological aspect of Wikipedia. For example the history flow visualization tool maps out the quality and distributions of edits in a visual way, where as using rigor and diversity as metrics yield hard data for us to understand changes of articles across time. However not much so far is done on unraveling the users who created the articles and are the substantial building block of this online project. Thus the aim of this research is to (1) develop a tool that can define the levels of disclosure on Wikipedia based on the information Wikipedia users provided and (2) to run some pilot statistical tests to see how different levels of disclosure correlate with the number of edits.

Defining anonymity – spectrum of identity disclosure

[edit]For those who are familiar with the structure of Wikipedia would know that there are basically four types of users:

- Free riders

- Users who only surf the site and do not edit any of its content, the ‘free riders’, and they are excluded in this research because they do not contribute

- IP users

- Users who do not create users account, thus leave their IP as a trace of their edits (e.g. User: 207.142.131.236)

- Cloaked users

- Users who create an account but do not leave any information on a their user pages

- Disclosed users

- Users who create not only an account but also provided information on their user pages

A screen capture of a history page on Wikipedia shows different types of users (the third column). Edits followed by only chucks of numbers (e.g. 196.216.3.5) represents those IP users For edits followed by a user name in red, those are users who have registered but have not put anything on their user pages (i.e. cloaked users). Finally for edits followed by a user name in blue, those are the users who have created some contents on their user pages (i.e. disclosed users).

The focus of this research is on devising a tool to measure the levels of disclosure of the forth kind of users, i.e., those who provided information on their user page and have registered an account. On the other hand, if we are to map out the relationship between levels of disclosure and the quality of edits, how would it look like? (elaborate, assumption of good faith, anonymity, identity disclosure, social disinhibitive effects)

Scale of disclosure

[edit]User pages were captured and saved for analysis. I have sampled 100 of them and manually looked through them in order to tailor-make a construct that can best measure the levels of disclosure of user on Wikipedia.

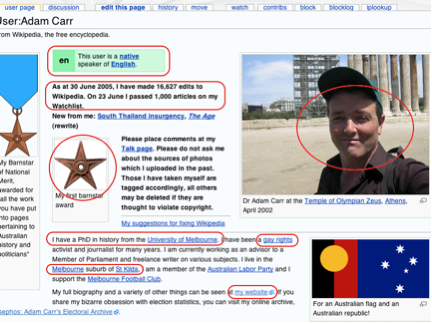

Below is a picture that illustrates the information a user provided which serves as a parameter for the development of the 24-construct checklist.

Adam Carr is one of the sampled users of this research. Mapping the information that he revealed based on the three main categories: (1) demographic information: he has revealed his geographic location and occupation, (2) identity-related: he has put his picture on the user page along with his political inclination and membership, and finally (3) Wikipedia-related information: we can see a barnstar, which is a token of recognition usually awarded by community members of the Wikipedia, his number of edits and articles that he has been keenly working on (as shown by ‘1,000 article on my watchlist’) and etc. After I sampled 100 pages of the users, the construct embraces different categories of information that users generally reveal on their user page.

For all the 100 pages that I have examined, 24 constructs were distilled as the yardstick for measuring information that users general give out on their user pages. One note is that for the scope of this research there is no attempt to verify the validity of the information, although a Google search may help giving an overview of the user if they happen to give out their ‘real names ’. As with other internet research, the contents given by the users are difficult, if not impossible, to verify thus content validation would not be part of this research.

Results

[edit]Significant correlations are shown in brackets:

- ( )* Correlation is significant at the 0.05 level (2-tailed).

- ( )** Correlation is significant at the 0.01 level (2-tailed).

Items without brackets are those who did not show significant correlation with the number of edits

Overall correlation between the 24 constructs and number of edits: (.265)**

For all the three main categories of disclosure, it was found that only variables that are related to interaction with Wikipedia yield significant correlation to the number of edits. Where as other variables, such as demographic information or identity-related items, do not correlate significantly with the number of edits at all.

Limitations

[edit]I note that the main limitation of this study is that quality of the edits, for the time being, has been omitted in this paper.

Limited Scope

[edit]There are currently about two million pages and six hundred thousand articles on the Wikipedia, so far users have made 21,549,609 edits since July 2002. And in this research only 20 minutes of edits were captured and analyzed. Thus further research with more representative samples should be done in order to capture more substantial data for analysis.

Edit count is not enough (Quality)

[edit]In this research the main variable is edit count, which is the number of edits contributed by the users. Edit counts by itself do not reveal the quality of edit and can be confounded by vandalism or non-Wikipedia related information such as users discussion on personal opinions on their community pages. Thus further research will need to take quality and relevance to the building of Wikipedia as an important variable of analysis when it comes to the study of user-contribution relationship on Wikipedia.

Confused by bots

[edit]Bots are robot-like machine or programs that can take over repetitive tasks on Wikipedia. As Andre Engels, the inventor of the bots puts it: ‘Bot is a machine that looks like a human […] A program that looks like a human visitor to a site’. Developers on Wikipedia have invented Bot to take care of repetitive tasks and hence the number of edits contributed by some of these bots sometimes exceeds the highest number of edits by human beings. In this research the I have tried to verify users by visiting their user pages and discard those who have been stamped as bots. But for users without user page, it is hard, if not impossible to verify if they are machines or humans.

References

[edit]- Lih, A. (2004). Wikipedia as participatory journalism: reliable sources? Metrics for evaluating collaborative media as a news resource. Paper presented at the 5th International Symposium on Online Journalism, Austin, USA.

- Mulgan, G., Steinberg, T., & Salem, O. (2004). Wide open: open source methods and their future potential: Demos.

- Viegas, F. B., Wattenberg, M., & Dave, K. (2004). Studying cooperation and conflict between authors with history flow visualizations. Paper presented at the CHI 2004, Vienna, Austria.