Template A/B testing/Results

This is a final report and summary of findings from more than six months of A/B testing user talk templates on Wikimedia projects. Conclusions are bolded below for easier skimming, but you're highly encouraged to compare the different versions of the templates and read our more detailed analysis.

Warnings about vandalism

[edit]- Further reading: Huggle data analysis notes

Huggle is a powerful vandal-fighting tool used by many editors on English, German, Portuguese, and other Wikipedias. The speed and ease of using Huggle means that reverts and warnings can be issued in very high numbers, so it is not surprising that Huggle warnings account for a significant proportion of first messages to new editors.

Together with the original Huggle test run during the Summer of Research, we ran a total of six Huggle tests in two Wikipedias -- English and Portuguese.

Example templates tested:

English

[edit]Our first experiment tested many different variables in the standard level one vandalism warning: image versus no image; more teaching language, personalization, or a mix of both (read). The results showed us that it is better to test a small number of variables, but there were some positive suggestions that increasing personalization led to a better outcome. This test reached 3241 registered and unregistered editors, of whom 1750 clicked through based on the "new messages" banner. More details about our first analysis round are available.

First experiment templates:

- default

- "teaching" version

- "personalized" version

- personalization and teaching version combined

Iterating on the results from the first Huggle test, we tested the default level one vandalism warning against the personalized warning, as well as an even more personalized version, which also removed some of the "directives"-style language and links to general policy pages. There were 2451 users in this test, which we handcoded into the following subcategories:

- 420 were 'vandals' that obviously should have been reverted and may have merited an immediate block. Examples: 1, 2.

- 982 were 'bad faith editors', people doing vandalism and the simple level 1 warning they received was appropriate. Examples: 1, 2

- 702 were 'test editors', people making test edits that should be reverted for quality but who aren't obvious vandals. Examples: 1 and 2

- 347 were 'good faith editors', who were clearly trying to improve the encyclopedia in an unbiased, factual way. Examples: 1, 2

Our results confidently showed that the two new warning messages did best at retaining good-faith users (who were not subsequently blocked) in the short term than the default. However, as time passed (and, presumably, those users received more default template messages on their talk that were not personalized for other editing activities), retention rates for the control and test groups converged.

Second experiment templates:

- default

- "personalized" version

- "no directives" version

| Read about an editor from this test. |

In our last test of vandalism warnings, we tested our best performing template message from the second test against the default and a much shorter new message written by a volunteer. Results showed that both new templates did better at encouraging good faith editors to contribute to articles than the default in the short term. The personal/no-directives version was a little more successful than the short message at getting users to edit outside of the article space (e.g., user talk), due to its invitation to ask questions of the warning editor.

Third experiment templates:

- default

- "no directives" version

- shorter version

Conclusions for all three tests:

As we hypothesized, changing the tone and language of the generic vandalism warning especially..

- increasing the personalization (active voice rather than passive, explicitly stating that the sender of the warning is also a volunteer editor, including an explicit invitation to contact them with questions);

- decreasing the number of directives and links (e.g., "use the sandbox," "provide an edit summary");

- and decreasing the length of the message;

...led to more users editing articles in the short term (e.g. more namespace 0 edits in the 0-3 days after receiving the warning). Editors who received a message that best conformed to this style went on to make edits that were, on average, equal to 20% of their former contributions. Note that this was despite the fact that they were reverted and then warned, which is a form of rejection that is the single biggest predictor of a decrease in new editor contributions.

Portuguese

[edit]Working with community members from Portuguese Wikipedia, we designed a test that was similar to our second Huggle experiment, testing a personalized, personalized and no directives, and default level one vandalism warning.

Our total sample size in this test was 1857 editors, The small number of registered editors that were warned during the course of the test (half as many as the English Huggle 2 test) meant that the sample size was too small to test for quantitative effects of the templates.

Additionally, both registered and unregistered editors warned were extremely unlikely to edit after the warnings, also making it difficult to see any effect from the change in templates. Qualitatively, this points to Huggle's impact as the most common vandalism-fighting tool on Portuguese Wikipedia, and the negative impact on new editor retention which has limited the size of the lusophone Wikipedia community.

Templates:

Warnings about specific issues, such blanking, lack of sources, etc.

[edit]- Further reading: Huggle data analysis notes

Based on our findings from previous experiments on the level one vandalism warning in Huggle, we decided to use our winning message strategy — a personalized message with no directives — to create new versions of all the other level one user warnings used by Huggle. These issue-specific warnings are used for warning messages about test edits, spam, non-neutral POV, unsourced content, and attack edits, as well as unsourced edits to biographies of living people, purposefully inserting errors, blanking articles or deleting large portions of text without an explanation. Some examples:

Templates:

|

|

|

|

One of the key initial results of this test was that some issue-specific warnings are very rarely used: the common issues encountered by Hugglers are unsourced additions, removing content without an edit summary, test edits, and spam. This suggests that the most common mistakes made by new or anonymous editors are not particularly malicious, and should be treated with good faith for the most part.

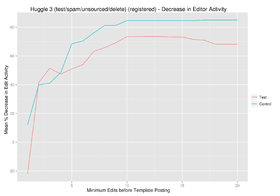

When we compared the outcome of the control group to the group that received personalized/no directives warnings, the templates had different effects on registered and unregistered users: for registered users who had made at least 5 edits before being warned, the new templates did better at getting people to edit articles. For unregistered editors, the effect was reversed, and the default version was more successful.

Our explanation for this is that the style of the new template, which was extremely friendly, reminded editors about the community aspect of encyclopedia building, and encouraged them to edit again to fix their mistakes, was only effective at registered editors who'd showed a minimum of commitment. The confidence in the result for registered editors also increased when looking at all namespaces, which supports the theory that the invitation to the warning editor's talk page or other links are helpful.

-

The new templates in this experiment were clearly more effective than the default for registered editors who'd made at least 5 edits before being warned.

-

New versions of the templates in this test were not as effective as the default for unregistered editors. For editors with less experience, the results were muddled.

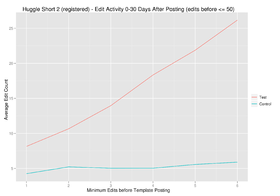

In our next test of issue-specific templates, we ran the default against a very short version of each message written by an experienced Wikipedian. This version exhibited some aspects that were friendlier than the current default, but did not begin by stating who the reverting editor was. It focused almost solely on the action the warned editor should take.

Templates:

|

|

In this experiment, we saw again that 'new versions of the templates did better at encouraging registered editors to edit overall. When we looked at unregistered editors, we saw that the control did better if you looked at only edits to articles, but the results were flipped when looking at edits to any namespace.

-

Both new templates, the longer and shorter versions, were far superior messages when directed at registered editors.

-

The new templates were not more effective for unregistered editors, though results were unclear for IPs with less than 5 edits before being warned.

-

When comparing just the shorter new template against the default, it was clearly superior at encouraging edits.

Warnings about external links

[edit]In addition to the test of spam warnings via Huggle, we experimented with new messages for XLinkBot, which warns users who insert inappropriate external links into articles.

Example templates:

Warnings:

Welcome messages:

- default welcome left by the bot along with level 0 warnings

- new welcome

| Read about an editor from this test. |

The results were inconclusive on a quantitative basis, with no significant retention-related effects for the 3121 editors in this test. One result we noted in this test was that the bot sends multiple warnings to IPs, which combine with Huggle/Twinkle-issued spam warnings and pile up on IP talk pages, making them hard to tease apart.

Though there was no statistically significant change in editor retention in the test, qualitative data suggests that:

- more boldly declaring that the reverting editor was a bot that sometimes makes mistakes may encourage editors to revert it more or otherwise contest its actions.

- it is clear that the approach used for vandalism warnings in prior tests may not be effective in the case of editors adding external links which are obviously unencyclopedic in some way (even if they are not always actually spam). These complex issues require very specific feedback to editors, and XLinkBot uses a complex cascading set of options to determine the most appropriate warning to deliver.

Warnings about test edits

[edit]| Read about an editor from this test. |

In addition to our experiment with test edit warnings in Huggle, we worked with 28bot by User:28bytes. 28bot is an English Wikipedia bot which reverts and warns editors who make test edits: it automatically identifies very common accidental or test edits such as the default text added when clicking the bold or italics elements in the toolbar.

While not an extremely high volume bot, the users this bot reverts are of particular interest, since they are not malicious at all. Improving the quality of the warnings for this bot could help encourage test editors to improve and stick around on Wikipedia.

Templates:

- default template for registered editors

- new template for registered editors

- default template for IP editors

- new template for IP editors

In comparing the default and test versions of the templates used by 28bot, there was an unexpected bias in the sample: editors in the control group almost all had received warnings prior to receiving the warning from 28bot, while editors who received test messages had almost no warnings before. From previous tests, we know that prior warnings tend to dilute the impact of any new messages. Additionally, the quantitative data from this test demonstrated to us that prior warnings are a very significant predictor of future warnings and blocks. While this devalued any attempt to compare the effectiveness of templates to each other, it may be fruitful for producing algorithmic methods for identifying problematic users.

Though comparing 28bot templates proved to be unsucessful, we did have a valuable alternative: comparing the survival of those reverted and warned by 28bot to those reverted by RscprinterBot. This is particularly interesting to compare, as RscprinterBot does not warn test editors for the most part, but targets a population of test editors to revert that is almost exactly the same as 28bot. We looked at a combined sample of 236 editors from these bots.

Through comparing those reverted and warned by 28bot against those simply reverted by RscprinterBot, we saw that registered editors which receive the template notification about why they were reverted and how to respond significantly outperform editors who do not receive a message. The mean number of Wikipedians who went on to make at least one edit after being reverted/warned by 28bot was .41, while the mean for Rcsprinterbot was .09.

This finding does not extend to unregistered editors, where we found no significant difference in editing activity afterwards. There was also no difference in the amount of editors from each group who went on to be blocked in the future; this is a positive result considering that one group's activity afterwards was appreciably higher.

You can read more in our data analysis journal.

Warnings about files

[edit]| Read about an editor from this test. |

- Further reading: our data analysis

In this test, we worked with English Wikipedia's ImageTaggingBot to test warnings delivered to editors that upload files which are missing some or all of the vital information about licensing and source. We ran our test with 1133 editors given a warning by ImageTaggingBot.

Templates:

|

|

The only statistically significant result we found was between the two templates visible above: the default warning about lack of any source or license info, and the new version tested. Only 9.6% of editors who received the new version edited in the file namespace at all afterwards. For the default, 18.6% went on to make edits to files. Since making corrections to files is the desired result of these notifications, we're inferring that the urgency of the default message is more effective and should be retained.

Warnings about copyright

[edit]In this test, we worked with English Wikipedia's CorenSearchBot to test different kinds of warnings to editors whose articles are very likely to be copied in part or wholesale from another website. CorenSearchBot relies on a Yahoo! API and is current no longer in operation, but during its prime work was the English Wikipedia's number one identifier of copyright violations in new articles. Read more about our data analysis in our journal notes.

The templates we tested were the welcome template given by the bot, a warning about copy-pasted excerpts, and a warning about entire articles that appeared to be copy-pasted. We also separated these warnings out by experience level, with anyone who made more than 100 edits prior to the warning receiving a special message for very experienced editors.

The results of this test were largely inconclusive, due in part to the bot breaking down in the midst of the test.

Deletion notifications

[edit]

- Further reading: our data analysis notes and qualitative feedback from the users who had been affected by these tests.

In this test, we attempted to rewrite the language of two of the three kinds of deletion notices used on English Wikipedia, to test whether clearer, friendlier language was better at retaining users and helping them properly contest a deletion of the article they authored.

Articles for deletion (AFD)

[edit]The goal of the new version we wrote for the AFD notification was to gently explain what AFD was, and to encourage authors of articles to go and add their perspective to the discussion.

For a quantitative analysis, we looked at editing activity in the Wikipedia (or "project") namespace among the 693 editors in both the test and control group, and found no statistically significant difference in the amount of activity there.

Since AFD discussions occur in this area, we've concluded that there is likely no significant positive change in the new template's ability to motivate editors to participate in deletion discussions. AFD is a complicated and somewhat intimidating venue for discussion to someone inexperienced with its norms, and how difficult it was to motivate editors to participate their more is not a surprise.

However, qualitative feedback we received from new editors suggested that it was an improvement simply to remove the number of excess links that are present in the current AFD notification – included in the default are links to a list of all policies and guidelines and documentation on all deletion policies.

Templates:

Proposed deletion (PROD)

[edit]| Read about an editor from this test |

Since the primary method for objecting to deletion via a PROD is simply to remove the template, we used the API to search for edits by contributors in our test and control groups for this action (shortly after receiving the message, not over all time).

When looking at revisions to articles not deleted, there was no significant difference:

- 16 (2.8%) of the 557 editors who received the test message removed a PROD tag.

- 13 (2.2%) of the 579 editors who received the control message removed a PROD tag.

So while there was a small increase in the amount of removed PROD templates in the test group, it was not statistically significant.

However, when examining deleted revisions (i.e. articles that were successfully deleted via any method), we see a stronger suggestion that the new template more effectively told editors how and why to remove a PROD template:

- 96 out of 550 (17%) users whose articles were deleted removed the PROD tag if they got the test message.

- 70 out of 550 users (13%) removed the PROD tag if they got the standard message we used as a control.

Note that the absolute numbers are also a lot higher than in non-deleted revisions (where roughly 2% of each groups removed the tag). This makes sense – most new articles on Wikipedia get deleted in one way or another.

These results match the qualitative feedback we received from Wikipedians about these templates: the new version was much clearer when it came to instructing new editors how to object to a PROD, but it did not prepare them for the likelihood their article was likely to be nominated via another method if PROD failed to stick.

Templates:

Speedy deletion (CSD)

[edit]| Read about an editor from this test. |

English Wikipedia's SDPatrolBot is a bot that warns editors for removing a speedy deletion tag from an article they started. We hypothesized that the main reason this happens is not a bad-faith action on the part of the article author, but because the author is new to Wikipedia and doesn't understand how to properly contest a speedy deletion.

To test this hypothesis, we created warnings that focused less on reproaching the user and more on inviting them to contest the deletion on the article's talk page, and tested the messages with a total of 858 editors.

The results showed that the new template did succeed in getting authors to edit the talk page rather than remove the speedy deletion tag, but only for those authors who had made many edits to their article before getting warned (i.e., those who had worked hard on the article and were probably more invested in contesting the deletion).

One unexpected effect of the test, however, was that, overall, the new warning made users more likely to be warned again by SDPatrolBot – i.e., to remove the speedy deletion tag a second time. This supports our results from the test with XLinkBot, which suggested that declaring that the reverting and warning editor was in fact a bot encouraged editors to take it less seriously.

Templates:

Welcome messages

[edit]Portuguese Wikipedia

[edit]In Portuguese, we tested a plain text welcome template very similar to the default welcome in English Wikipedia, as compared to the extremely graphical default welcome that is the current standard in the lusophone Wikipedia. Further reading: our data analysis.

Templates:

When looking at the contributions of all editors who received these two welcome templates, we initially did not see a significant difference their editing activity, with between 22-25% of the 429 editors making an edit after being welcomed.

However, when we limited our analysis to those who made at least one edit before being welcomed, we saw a small result: 30.77% those who received the test template edited after being welcomed, while 42.59% who received the standard Portuguese Wikipedia welcome did so. This result is of marginal significance statistically speaking, so if the Portuguese-speaking community is looking to implement any permanent change to these templates, further discussion about interpreting the results is highly recommended.

Some possible explanations for the result include:

- Simple bias in the groups of editors sampled. There were slightly more editors in the control sample and this seemed to minutely skew the results in favor of the control in a non-significant way, even before we filtered the analysis to only those who made edits before being welcomed.

- The test message was not read as much, as it was not graphical in any way.

- The current standard Portuguese welcome message begins with directives to Be Bold and collaborate with other editors, and only includes recommended reading ("Leituras recomendadas") at the bottom of the template. The test version includes a list of rules more direct and upfront. This may be discouraging, even though the large, graphical style of the current welcome is quite overwhelming.

German Wikipedia

[edit]The German Wikipedia welcome test was designed to measure whether reducing the length and number of links in the standard welcome message would lead new users to edit more. Though the sample size was very small (about 90 users total), the results were encouraging:

- 64% of editors who received the standard welcome message went on to make at least one edit on German Wikipedia;

- 88% of those who received the same welcome message, but with fewer links went on to edit;

- 84% who received a very short, informal welcome message went on to edit;

Templates:

Overall, these results support our findings from other tests: Welcome messages that are relatively short, to the point, and which contain only a few important links are more effective at encouraging new editors to get involved in the project. Our recommendation is to change the default German welcome template to the short version.

General talk page-related findings

[edit]- Very experienced Wikipedians do get templates a lot too. Though we began this testing project thinking in terms of new users, it quickly became clear over the course of many of these tests – especially Twinkle, ImageTaggingBot, and CorenSearchBot – that user warnings and deletion notices affect a huge number of long-time Wikipedians, too. Yet despite the considerable difference in Wikipedia knowledge between a brand-new user and someone who's been editing for years, in most cases people get the same message regardless of their experience.

- The more warnings on a talk page, the less effective they are. Especially for anonymous editors, the presence of old irrelevant warnings on a page strongly diluted the effectiveness of a warning or other notification. We strongly encourage regular archiving of IP talk pages by default, since these editors are generally not able to request it for themselves under usual circumstances. We began testing this via a SharedIPArchiveBot.

- When reverting registered editors, no explanation is even worse than a template. Quantitative data from comparing test editors reverted by 28bot and Rscprinterbot strongly backed up previous surveys that suggested the most important thing to do when reverting someone was to explain why. In the 2011 Editor Survey, only 9% of respondents said that reverts with an explanation was demotivating, while 60% said that reverts with no explanation was demotivating.

Future work

[edit]Since the results of the various tests were each quite different, and in some cases inconclusive, we do not recommend any single blanket change to all user talk messages. However, we do recommend further testing or new tests in some areas, as well as permanent revision to some templates.

Promising future tests include, but are not limited to:

- The speedy deletion (CSD) warnings left by Twinkle

- Block notices, especially username policy blocks which are in good faith

- More tests of welcome template content, especially outside of English

Templates we recommend be revised directly based on successful testing:

- Level one warnings in English Wikipedia, especially those used by Huggle and Twinkle

- Twinkle articles for deletion (AFD) and (PROD) notifications

Perhaps most importantly, none of these experiments tested whether handwritten messages were superior or inferior to templates of any kind. A test of whether asking for custom feedback in some cases (such as through Twinkle) produces better results is merited, and is part of the idea backlog for the Editor Engagement Experiments team.