Research:Wiki-Reliability: A Large Scale Dataset for Content Reliability on Wikipedia

This project is built on top of the WikiProjectReliability which is dedicated to improving the reliability of Wikipedia articles. The quality and reliability of Wikipedia content is maintained by a community of volunteer editors. Machine learning and information retrieval algorithms could help scale up editors’ manual efforts around Wikipedia content reliability. However, there is a lack of large-scale data to support the development of such research. To fill this gap, we release Wiki-Reliability, the first dataset of English Wikipedia articles annotated with a wide set of content reliability issues.

Background

[edit]On Wikipedia, the review and moderation of content is usually self-governed by Wikipedia’s volunteer community of editors, through collaboratively created policies and guidelines [1] [2]. However, despite the size of Wikipedia’s community (41k monthly active editors for English Wikipedia in 2020), the labor cost of maintaining Wikipedia’s content is intensive: Wikipedia patrollers reviewing at a rate of 10 revisions/minute would still require 483 labour hours per day to review 290k edits saved across all the various language editions of Wikipedia [3].

With the large labor costs associated with patrolling new edits, automated strategies are beneficial to helping Wikimedia’s community of maintainers avoid a task overload, allowing them to focus their efforts on more beneficial content moderation efforts. This has been carried out successfully at scale for the purpose of counter-vandalism, such as with the ORES service[4], an open source algorithmic scoring service which enables the scoring of Wikipedia edits in real time, through the use of multiple independent Machine Learning classifiers.

The goal of the project is to encourage automated strategies for the moderation of content reliability, by providing Machine Learning datasets for this purpose.

Citation and Verifiability Templates

[edit]One of the ways reliability is governed in Wikipedia is through the use of templates, which present as messages on a page they’re included in, and serves as a warning for gaps in reliability of a page’s content. To build this dataset, we rely on Wikipedia “templates”. Templates are tags used by expert Wikipedia editors to indicate content issues, such as the presence of “non-neutral point of view” or “contradictory articles”, and serve as a strong signal for detecting reliability issues in a revision.

WikiProjectReliability maintains a list of maintenance templates related to citation and verifiability issues. These serve as a warning marker not just for the reader, but also as a tag for maintenance purposes, which points out to editors that moderation fixes are needed to improve the reliability of an article. Thus, we can get an idea for the reliability of an article by checking for the presence of these templates.

We select the 10 most popular reliability-related templates on Wikipedia, and propose an effective method to label almost 1M samples of Wikipedia article revisions as positive or negative with respect to each template. Each positve/negative example in the dataset comes with the full article text and 20 features from the revision’s metadata.

Previous work

[edit]Previous works [5] [6] obtained positive and negative instances of a template being present by randomly selecting from a current snapshot of pages, rather than comparing subsequent revisions. Our approach is able to capture multiple instances of template addition and removal over the full revision history of pages.

[6] constructed a negative set from Wikipedia’s Featured Articles and Good Articles, which ignores stylistic differences which could be what the model would learn to predict instead. In contrast, our approach takes into account differences in article category and quality, because we extract pairs of related contrasting examples. We surmise that this would improve the ability of a model to learn from the data. Additionally, our approach addresses the imbalanced class issue where the number of positive examples exceed the negative examples.

Methodology

[edit]Selection of templates

[edit]We categorised the 41 WikiProjectReliability maintenance templates by article, section, and in-line level. We then manually curated the maintenance templates based on their impact to Wikimedia, prioritising templates which are of interest to the community.

Parsing Wikipedia Dumps

[edit]The full history of Wikipedia articles is available through periodically updated XML dumps. We use the full English Wikipedia dump from September 2020, sizing 1.1TB compressed in bz2 format. We convert this data to AVRO and process it using PySpark. We apply a regular expression to extract all the templates in each revision, and retain all the articles that contain our predefined list of templates. Next, using the MediaWiki History dataset we obtain additional information (metadata) about each revision of the aforementioned articles.

Handling instances of vandalism

[edit]The accurate detection of true positive and negative cases is further complicated by instances of vandalism: where a template has been maliciously/wrongly added or removed. To handle this, we rely on the wisdom of the crowd by ignoring revisions which have been reverted in the future by editors. Research by [7] suggests that 94% of reverts can be detected by matching MD5 checksums of revision content historically. Thus, we use the SHA checksum method as a reliable method of detecting whether a revision was reverted in the future

However, comparing SHA checksums of consecutive revisions is a computationally expensive process as it requires processing through the entire history of revisions. Fortunately, the MediaWiki History dataset contains monthly data dumps of all events with pre-computed fields of computationally expensive features to facilitate analyses. Of interest is the revision_is_identity_reverted feature in revisions events, which marks whether a revision was reverted by another future revision, which we use to exclude all reverted revisions from the articles’ edit history.

Obtaining positive and negative examples

[edit]For each citation and verifiability maintenance template, we construct a dataset which consists of positive and negative examples of a template’s presence. We iterate through all consecutive revisions of a page’s revision history to extract positive/negative class pairs. We define a positive example as the revision where a template was added. The presence of a template serves as a strong signal for detecting that a reliability issue exists in a revision.

Labeling negative samples in this context is a non-trivial task. While the presence of a template is a strong signal of a content reliability issue, the absence of a template does not necessarily imply the converse. A revision may contain a reliability issue that has not yet been reviewed by expert editors and flagged with a maintenance template. Therefore, we iterate through the article history succeeding the positive revision, and label as negative the first revision where the template does not appear, i.e. the revision where the template was removed. The negative example then acts as a contrasting class, as it constitutes the positive example which has been fixed for its reliability issue.

Final pipeline

[edit]1. Obtain Wikipedia edit history

[edit]We download the Wikipedia dump available at September 2020, and load it in AVRO format for PySpark processing.

2. Obtaining “reverted” status of a revision

[edit]We query the Mediawiki History dataset to extract the “reverted” status of a revision.

3. Check if the revision contains a template

[edit]For each template in the template list, we loop over all non-reverted revisions to find the first revision where the template has been added. We mark such revision as positive to indicate that the revision contains the template.

4. Process positive/negative pairs

[edit]We iterate through all consecutive revisions of a positive example to find the next non-reverted revision where the template has been removed, and mark it as negative.

We share all processing code on our Github page.

Data

[edit]After processing all reliability-related templates for positive/negative class pairs, we filter out templates with pair counts of less than 1000. This leaves us with the following top 10 templates:

| Template | Type | Count |

|---|---|---|

| unreferenced | article | 389966 |

| one source | article | 25085 |

| original research | article | 19360 |

| more citations needed | article | 13707 |

| unreliable sources | article | 7147 |

| disputed | article | 6946 |

| pov | article or section | 5214 |

| third-party | article | 4952 |

| contradict | article or section | 2268 |

| hoax | article | 1398 |

Following that, we compute both metadata and text-level features for our data.

Metadata features

[edit]We extract metadata features for each revision in our data by querying the ORES API’s Article Quality model, resulting in 26 metadata features in total.

For our final released datasets, we narrow down our feature list by filtering out features of the lowest importance based on our benchmark binary models. To analyse the performance of different features across all templates, we obtained the importance scores of features from our benchmarked XGBoost models, which achieved the best performance on our metadata features. We trained XGBoost models on different subsets of features ordered by their importance, and obtained the accuracy score for each. We determined the most commonly occurring features of least importance across all templates, which we remove from our final dataset, reducing our feature size from 26 down to 20. We confirmed that the models trained on the new reduced subset of features achieves comparable (and sometimes improved) accuracy to the full set. The schema for our released datasets for each template is as follows:

| Field | Description |

|---|---|

| page_id | Page ID of the revision |

| revision_id | ID of the revision |

| revision_id.key | ID of the corresponding pos/neg revision |

| revision_text_bytes | Change in bytes of revision text |

| stems_length | Average length of stemmed text |

| images_in_tags | Count of images in tags |

| infobox_templates | Count of infobox templates |

| paragraphs_without_refs | Total length of paragraphs without references |

| shortened_footnote_templates | Number of shortened footnotes (i.e., citations with page numbers linking to the full citation for a source) |

| words_to_watch_matches | Count of matches from Wikipedia's words to watch: words that are flattering, vague or endorsing a viewpoint |

| revision_words | Count of words for the revision |

| revision_chars | Number of characters in the full article |

| revision_content_chars | Number of characters in the content section of an article |

| external_links | Count of external links not in Wikipedia |

| headings_by_level(2) | Count of level-2 headings |

| ref_tags | Count of reference tags, indicating the presence of a citation |

| revision_wikilinks | Count of links to pages on Wikipedia |

| article_quality_score | Letter grade of article quality prediction |

| cite_templates | Count of templates that come up on a citation link |

| cn_templates | Count of citation needed templates |

| who_templates | Number of who templates, signaling vague "authorities", i.e., "historians say", "some researchers" |

| revision_templates | Total count of all transcluded templates |

| category_links | Count of categories an article has |

| has_template | Binary label indicating presence or absence of a reliability template in our dataset |

Text features

[edit]While certain citation-related templates can be distinguished by metadata features, some reliability templates are distinguished by differences in their content text. Thus, we also create text-based datasets for the purpose of text classification.

For each revision in our dataset, we query the API for its wikitext, which we parse to obtain only its plain text content, filtering out all wikilinks, and non-content sections (reference, external links, etc). Finally, we obtain the diff between the revision texts of each positive/negative pair, obtaining the changed sections of text for each revision. We produce two versions of our text datasets: one composed of the diff text, and another of the full article level text.

The schema for our released text datasets are as follows:

| Field | Description |

|---|---|

| page_id | Page ID of the revision |

| revision_id_pos | Revision ID of the positive example |

| revision_id_neg | Revision ID of the corresponding negative example |

| txt_pos | Full/Diff text of the positive example |

| txt_neg | Full/Diff text of the corresponding negative example |

We release all our final datasets on Figshare.

Multilingual Wiki-Reliability

[edit]We expanded the Wiki-Reliability dataset beyond English to the multilingual language projects on Wikipedia. We build datasets for the top 10 reliability-related templates.

Template Coverage Analysis

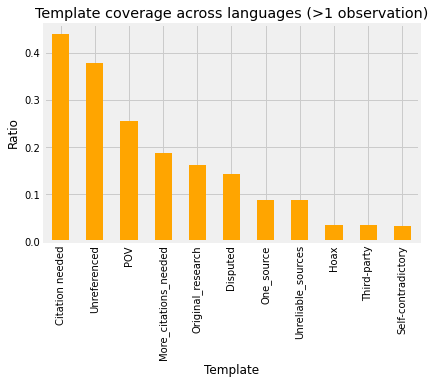

[edit]Each language project has its own language version of templates. We obtain these through interlanguage links of the equivalent reliability templates across the different language projects.

We perform an analysis of template transclusion coverage across the 295 language wikiprojects for the 2021-04 snapshot at the article-level. Figure:Template Coverage across Languages shows the plot of template coverage across the 295 language wikis, showing the proportion of language wikis in which a template occurs in at least one article.

Figure:Language Coverage across Templates shows the plot of language coverage across the top 10 reliability templates, showing the proportion of templates which have at least 100 transclusions for each language wikiproject. We see that Chinese, Japanese, and Turkish have at least 100 observations for 6/10 templates.

Dataset Construction

[edit]We apply the same methodology for constructing the reliability template datasets for each language wikiproject. For each language wikiproject, we obtain datasets of template addition and removal pairs for the language-linked version of each of the top 10 reliability template, if it exists in that language wiki.

We observed that all 295 language projects also contained transclusions of English-language versions of a template. Figure:WikiReliability Template Paircounts (ruwiki) shows an example of the final template pair counts for the language-linked and English versions of the top 10 reliability templates for the Russian language project.

In general, the counts for the English template versions are far fewer compared to the language-linked versions. However, some language projects may make direct use of the English version of a template such as POV instead of a translated version of the page. Thus, we also obtain separate versions of the datasets where the English-language version of the template is trancluded.

Datasets

[edit]We plan to release the multilingual datasets soon. The processing pipeline for multilingual Wiki-Reliability (rewritten in Spark) is available in our Github project.

Analysis

[edit]Downstream Task

[edit]In order to measure the informativeness of our dataset, we establish some baseline models for comparison by future work on the English Wiki-Reliability dataset. For each template, we train baseline classification models predicting the label has_template, which acts as an indicator for the presence of a citation and verifiability issue. We train baseline models in the following aspects: Metadata feature-based models, and text-based models.

Metadata-based Models

[edit]We train benchmark models for the metadata feature datasets across all templates. We benchmark each template on the following models: Logistic Regression, SVM, Decision Tree, Random Forest, K-Nearest Neighbours, Naive Bayes, XGBoost, and finally, an ensemble of all the aforementioned classifiers.

Each classifier is trained using 5-fold cross validation, with GroupKFold to ensure non-overlapping groups of pageIDs across the train and test splits. This ensures that all revisions of the same page ID will not appear in the test set if it already occurs in the train set, and vice versa. We ensure that the train test splits have balanced label distributions.

For all experiments, we recorded the overall classification accuracy as well as the precision, recall, F1-score, and Area Under the ROC Curve.

Text-based Models

[edit]We train text classification models on our text data across all templates. We convert the raw text data to vector representations in the following manners: by computing TF-IDF features, and by encoding the text using pre-trained word embeddings.

TF-IDF

[edit]We obtain TF-IDF features from our raw text data, with a max vocabulary size of 1000, after comparing that this has minimal performance difference to training on the full vocabulary.

We then benchmark on the following models for our TF-IDF text features: Logistic Regression, SVM, Decision Tree, Random Forest, K-Nerest Neighbours, XGBoost.

Word Embeddings

[edit]Finally, we trained simple binary text classifiers on pre-trained word embeddings. We perform Transfer Learning to train the classifiers using pretrained text embedding modules from TF-Hub. We test our data on the following TF-Hub embedding modules:

- nnlm-en-dim128: encodes each individual word into 128 dimension embedding vectors and then averages them across a sentence for a final 128-dimensional sentence embedding.

- random-nnlm-en-dim128: a text embedding module with the same vocabulary and network as nnlm-en-dim128, but with randomly initialised weights

- universal-sentence-encoder: which takes in variable length English text and outputs a 512 dimensional vector

We add a DNNClassifier classification layer on top of each text embedding module, and train in two modes:

- With module training: training only the classifier (i.e. freezing the module), and

- Without module training: training the classifier together with the module

Each model is trained over 25 epochs.

Results

[edit]Metadata-based Models

[edit]Across all templates, the XGBoost model achieves the highest performance scores. The ensemble StackingCVClassifier model is able to achieve improved or comparable performance to the XGBoost model.

We show an example of the score results for the template original research below:

| Model | Accuracy | Precision | Recall | F1 score | AUC-ROC |

|---|---|---|---|---|---|

| Logistic Regression | 0.554029 | 0.556082 | 0.554029 | 0.549863 | 0.575842 |

| Support Vector Machine | 0.580475 | 0.5814 | 0.580475 | 0.57928 | 0.609065 |

| Decision Tree | 0.561312 | 0.561323 | 0.561312 | 0.561293 | 0.561292 |

| Random Forest | 0.5789 | 0.580497 | 0.5789 | 0.576799 | 0.620721 |

| K-Nearest Neighbours | 0.558703 | 0.558993 | 0.558703 | 0.558158 | 0.583732 |

| Naive Bayes | 0.527143 | 0.540403 | 0.527143 | 0.479102 | 0.552176 |

| XGBoost | 0.605191 | 0.607226 | 0.605191 | 0.603311 | 0.654589 |

| Ensemble | 0.573011 | 0.573641 | 0.573011 | 0.57217 | 0.597178 |

We also computed the feature importances from our classifier, plotted below:

We release a notebook of the benchmarking models and scores for all other templates on PAWS.

Text-based Models

[edit]TF-IDF

[edit]On the template original research, we obtain the following results:

| Model | Accuracy | Precision | Recall | F1 score | AUC-ROC |

|---|---|---|---|---|---|

| Logistic Regression | 0.571408 | 0.571676 | 0.570918 | 0.570273 | 0.599031 |

| Support Vector Machine | 0.504947 | 0.252473 | 0.500000 | 0.338844 | 0.560614 |

| Decision Tree | 0.531903 | 0.532120 | 0.532075 | 0.531737 | 0.524847 |

| Random Forest | 0.539470 | 0.540924 | 0.540140 | 0.537245 | 0.564506 |

| K-Nearest Neighbours | 0.530411 | 0.530288 | 0.530261 | 0.530298 | 0.543235 |

| XGBoost | 0.565724 | 0.567247 | 0.564831 | 0.561859 | 0.597537 |

The models trained on our text-based features do not perform as well as on metadata features. This is unsurprising as the text data is more difficult to learn from. As our data is at the article/diff level as opposed to at the sentence level, the observed performance could be attributed to multiple factors, such as the TF-IDF features not being expressive enough.

We release a notebook of the benchmarking models and scores for all other templates on PAWS.

Word Embeddings

[edit]Finally, we also trained some simple binary text classifiers on our text data using pre-trained word embeddings from TF-Hub. The results for the original research template are presented below:

| Model | Accuracy | Precision | Recall | F1_score | AUC-ROC |

|---|---|---|---|---|---|

| nnlm-en-dim128 | 0.517463 | 0.519591 | 0.534816 | 0.5270935801 | 0.512551 |

| nnlm-en-dim128-module-training | 0.526909 | 0.525261 | 0.614616 | 0.5664362291 | 0.513625 |

| random-nnlm-en-dim128 | 0.476038 | 0.483633 | 0.621337 | 0.5439044993 | 0.462589 |

| random-nnlm-en-dim128-module-training | 0.479678 | 0.486417 | 0.623406 | 0.5464570049 | 0.460638 |

| universal-sentence-encoder | 0.528729 | 0.524502 | 0.671493 | 0.5889647055 | 0.525615 |

| universal-sentence-encode-module-training | 0.521536 | 0.509312 | 0.840239 | 0.6342017539 | 0.516086 |

The best performing model from our experiments is the universal-sentence-encoder model, which obtains an accuracy of 52.45%. However, the performance of the embedding based approach does not exceed a simple TF-IDF Log reg model. We surmise that this is due to the simple model architecture not being expressive enough to model the task-- for example, we use pre-trained sentence level embedding modules despite our data being at the article-level, due to computational constraints for this project. The limited dimension size used to represent the document-length text, may dilute the signal.

Due to computational constraints, we were also unable to train our data on more complex models such as BERT. We believe that such models could lead to greater improvements on the task and hope that the final released datasets encourage future work on this task.

Links

[edit]

References

[edit]- ↑ Ivan Beschastnikh, Travis Kriplean, and David W. McDonald. 2008. Wikipedian Self-Governance in Action: Motivating the Policy Lens. In International AAAI Conference on Web and Social Media (ICWSM).

- ↑ Andrea Forte, Vanesa Larco, and Amy Bruckman. 2009. Decentralization in Wikipedia Governance. Journal of Management Information Systems 26, 1 (2009), 49–72.

- ↑ Dan Cosley, Dan Frankowski, Loren Terveen, and John Riedl. 2007. SuggestBot: using intelligent task routing to help people find work in wikipedia. In Proceedings of the 12th international conference on Intelligent user interfaces. ACM, 32–41.

- ↑ Aaron Halfaker and R. Stuart Geiger. 2020. ORES: Lowering Barriers with Participatory Machine Learning in Wikipedia. Proc. ACM Human Computer Interaction. 4, CSCW2, Article 148 (October 2020), 37 pages.

- ↑ Maik Anderka, Benno Stein, and Nedim Lipka. 2012. Predicting quality flaws in user-generated content: the case of wikipedia. In Proceedings of the 35th international ACM SIGIR conference on Research and development in information retrieval (SIGIR '12). Association for Computing Machinery, New York, NY, USA, 981–990.

- ↑ a b Shruti Bhosale, Heath Vinicombe, and Raymond Mooney. 2013. Detecting promotional content in wikipedia. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, 1851–1857.

- ↑ Aniket Kittur, Bongwon Suh, Bryan A. Pendleton, and Ed H. Chi. 2007. He says, she says: conflict and coordination in Wikipedia. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI '07). Association for Computing Machinery, New York, NY, USA, 453–462.