Research:Post-edit feedback/PEF-2

| This page in a nutshell: This page will host the results from the second iteration of R:PEF |

This page documents the results of the second iteration of the Post-edit feedback experiment. The goal of the experiment was to determine whether receiving feedback had any significant desirable or undesirable effect on the volume and quality of contributions by new registered users, compared to the control group.

We measured the effects on volume by analyzing the number of edits, contribution size and time to threshold for participants in each experimental condition; we measured the impact of the experiment on quality by looking at the rate of reverts and blocks in each experimental condition.

Prior to performing the analysis we generated a clean dataset from the entire population of participants in the experiment to filter out known outliers and focus on genuinely new registered users.

Unless otherwise noted, all analyses refer to a 2-week interval since registration time to include a supplementary week after the 1-week treatment period. We report when significant differences emerge comparing the in-treatment and post-treatment period.

Research questions

[edit]- RQ1. Does receiving feedback increase the number of edits?

- RQ2. Does feedback lead to larger contributions?

- RQ3. Does receiving feedback shorten the time to the second contribution?

- RQ4. Does feedback affect the rate at which newcomers are blocked?

- RQ5. Does feedback affect the success rate of newcomers?

| Treatment | 1 edit | 5 edits | 10 edits | 25 edits | 50 edits | 100 edits |

|---|---|---|---|---|---|---|

| Control | 3535 | 843 | 389 | 118 | 42 | 13 |

| Historical | 3607 | 853 | 371 | 99 | 33 | 11 |

Edit volume - Edit Count & Bytes Added

[edit]The following analysis address the question:

- RQ1. Does receiving feedback increase the number of edits?

Edit count is the most direct measure of editor activity. We measured the total edit counts of new editors that were added by experimental condition in the first 14 days of activity since registration. New users would not receive the treatment message until after completing their first edit. Therefore, for each editor included in the experiment the first contribution was omitted.

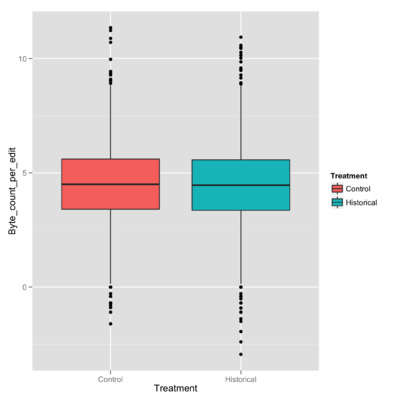

- RQ2: Does feedback lead to larger contributions?

The bytes added are computed in four ways for each editor:

- Net - the net sum of bytes added or removed

- Positive - the sum of bytes added

Below are the means of byte changed normalized by edit count for each group. We considered logarithmic transformations of bytes changed to work with normally distributed data. In order to perform the log operation on the distribution over "Net" byte count the net negative samples were ommited (about 15% of total samples). The samples for total bytes added for any given editor were normalized by edit count, so for example, if an editor had made five edits contributing 100,200,300,50, and 50 bytes the sample for this editor would be . Furthermore, the byte count data was verified to be log-normal under the Shapiro–Wilk test for each treatment and bytes added metric and, given rejection of the null hypothesis (), t-tests were performed over the transformed data sets.

Finally, the sample group was sub-sampled based on the milestones reached and analysis was executed separately for each of these groups.

At least one edit:

R Output Edit Count

|

|---|

[1] "Processing Metric edit_count ..." [1] "Processing treatment control ..." [1] "Sample Size: 5067" [1] "Mean: 5.73810933491218" [1] "Processing treatment historical ..." [1] "Sample Size: 5106" [1] "Mean: 4.76615746180964" [1] "T-test for treatment1 historical under edit_count" Welch Two Sample t-test data: t1 and ctrl t = -1.332, df = 6475.449, p-value = 0.1829 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -2.4024405 0.4585367 sample estimates: mean of x mean of y 4.766157 5.738109 |

R Output Bytes Added (Net & Positive)

|

|---|

[1] "Processing Metric bytes_added_net ..." [1] "Processing treatment control ..." [1] "Sample Size: 4172" [1] "Mean: 675.897552233935" [1] "Log Mean: 4.84501234490462" [1] "Log SD: 1.80334700944111" [1] "Processing treatment historical ..." [1] "Sample Size: 4189" [1] "Mean: 631.088538597629" [1] "Log Mean: 4.8046557700368" [1] "Log SD: 1.83063245067292" [1] "T-test for treatment1 historical under bytes_added_net" Welch Two Sample t-test data: t1 and ctrl t = -1.0154, df = 8357.998, p-value = 0.3099 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.11826257 0.03754942 sample estimates: mean of x mean of y 4.804656 4.845012 [1] "Processing Metric bytes_added_pos ..." [1] "Processing treatment control ..." [1] "Sample Size: 4521" [1] "Mean: 650.379701700953" [1] "Log Mean: 4.76932470827655" [1] "Log SD: 1.86205220432389" [1] "Processing treatment historical ..." [1] "Sample Size: 4517" [1] "Mean: 662.897631197254" [1] "Log Mean: 4.77219397012983" [1] "Log SD: 1.84844301028586" [1] "T-test for treatment1 historical under bytes_added_pos" Welch Two Sample t-test data: t1 and ctrl t = 0.0735, df = 9035.624, p-value = 0.9414 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.07363828 0.07937680 sample estimates: mean of x mean of y 4.772194 4.769325 |

At least five edits:

R Output Edit Count

|

|---|

[1] "Processing Metric edit_count ..." [1] "Processing treatment control ..." [1] "Sample Size: 1159" [1] "Mean: 19.2950819672131" [1] "Processing treatment historical ..." [1] "Sample Size: 1141" [1] "Mean: 15.4005258545136" [1] "T-test for treatment1 historical under edit_count" Welch Two Sample t-test data: t1 and ctrl t = -1.2374, df = 1469.371, p-value = 0.2161 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -10.068340 2.279228 sample estimates: mean of x mean of y 15.40053 19.29508 |

R Output Bytes Added (Net & Positive)

|

|---|

[1] "Processing Metric bytes_added_net ..." [1] "Processing treatment control ..." [1] "Sample Size: 1028" [1] "Mean: 335.308856601793" [1] "Log Mean: 4.95773516964509" [1] "Log SD: 1.47819614339751" [1] "Processing treatment historical ..." [1] "Sample Size: 993" [1] "Mean: 430.754503040083" [1] "Log Mean: 4.91251031795925" [1] "Log SD: 1.58892759783986" [1] "T-test for treatment1 historical under bytes_added_net" Welch Two Sample t-test data: t1 and ctrl t = -0.6619, df = 1996.286, p-value = 0.5081 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.17921677 0.08876707 sample estimates: mean of x mean of y 4.912510 4.957735 [1] "Processing Metric bytes_added_pos ..." [1] "Processing treatment control ..." [1] "Sample Size: 1145" [1] "Mean: 354.219910966528" [1] "Log Mean: 4.96745394691687" [1] "Log SD: 1.52978642175134" [1] "Processing treatment historical ..." [1] "Sample Size: 1129" [1] "Mean: 583.61043706938" [1] "Log Mean: 4.93766493534276" [1] "Log SD: 1.60921251009533" [1] "T-test for treatment1 historical under bytes_added_pos" Welch Two Sample t-test data: t1 and ctrl t = -0.4523, df = 2262.548, p-value = 0.6511 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.15894169 0.09936366 sample estimates: mean of x mean of y 4.937665 4.967454 |

At least ten edits:

R Output Edit Count

|

|---|

[1] "Processing Metric edit_count ..." [1] "Processing treatment control ..." [1] "Sample Size: 552" [1] "Mean: 33.4420289855072" [1] "Processing treatment historical ..." [1] "Sample Size: 519" [1] "Mean: 26.1599229287091" [1] "T-test for treatment1 historical under edit_count" Welch Two Sample t-test data: t1 and ctrl t = -1.1091, df = 703.703, p-value = 0.2678 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -20.172774 5.608562 sample estimates: mean of x mean of y 26.15992 33.44203 |

R Output Bytes Added (Net & Positive)

|

|---|

[1] "Processing Metric bytes_added_net ..." [1] "Processing treatment control ..." [1] "Sample Size: 505" [1] "Mean: 313.728768315528" [1] "Log Mean: 5.00375381388526" [1] "Log SD: 1.38156556001068" [1] "Processing treatment historical ..." [1] "Sample Size: 460" [1] "Mean: 262.417910824173" [1] "Log Mean: 4.90365018609712" [1] "Log SD: 1.38545229416708" [1] "T-test for treatment1 historical under bytes_added_net" Welch Two Sample t-test data: t1 and ctrl t = -1.1225, df = 954.157, p-value = 0.2619 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.27510813 0.07490088 sample estimates: mean of x mean of y 4.903650 5.003754 [1] "Processing Metric bytes_added_pos ..." [1] "Processing treatment control ..." [1] "Sample Size: 552" [1] "Mean: 345.986279404488" [1] "Log Mean: 5.0894331845964" [1] "Log SD: 1.39942519838873" [1] "Processing treatment historical ..." [1] "Sample Size: 517" [1] "Mean: 598.114833976045" [1] "Log Mean: 5.01104570988809" [1] "Log SD: 1.35577471269409" [1] "T-test for treatment1 historical under bytes_added_pos" Welch Two Sample t-test data: t1 and ctrl t = -0.9301, df = 1065.776, p-value = 0.3525 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.24376173 0.08698678 sample estimates: mean of x mean of y 5.011046 5.089433 |

At least twenty-five edits:

R Output Edit Count

|

|---|

[1] "Processing Metric edit_count ..." [1] "Processing treatment control ..." [1] "Sample Size: 159" [1] "Mean: 79.6352201257862" [1] "Processing treatment historical ..." [1] "Sample Size: 130" [1] "Mean: 59.8307692307692" [1] "T-test for treatment1 historical under edit_count" Welch Two Sample t-test data: t1 and ctrl t = -0.8755, df = 208.599, p-value = 0.3823 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -64.39786 24.78896 sample estimates: mean of x mean of y 59.83077 79.63522 |

R Output Bytes Added (Net & Positive)

|

|---|

[1] "Processing Metric bytes_added_net ..." [1] "Processing treatment control ..." [1] "Sample Size: 145" [1] "Mean: 226.068984205479" [1] "Log Mean: 4.96643026742924" [1] "Log SD: 1.06034302486461" [1] "Processing treatment historical ..." [1] "Sample Size: 114" [1] "Mean: 209.51882457994" [1] "Log Mean: 4.89118138165821" [1] "Log SD: 1.0920944340629" [1] "T-test for treatment1 historical under bytes_added_net" Welch Two Sample t-test data: t1 and ctrl t = -0.5575, df = 239.385, p-value = 0.5777 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.3411229 0.1906251 sample estimates: mean of x mean of y 4.891181 4.966430 [1] "Processing Metric bytes_added_pos ..." [1] "Processing treatment control ..." [1] "Sample Size: 159" [1] "Mean: 274.915579020194" [1] "Log Mean: 5.08194732006123" [1] "Log SD: 1.26429886569131" [1] "Processing treatment historical ..." [1] "Sample Size: 130" [1] "Mean: 235.993573979585" [1] "Log Mean: 5.02020573080006" [1] "Log SD: 1.03040697199858" [1] "T-test for treatment1 historical under bytes_added_pos" Welch Two Sample t-test data: t1 and ctrl t = -0.4574, df = 286.998, p-value = 0.6477 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.3274235 0.2039403 sample estimates: mean of x mean of y 5.020206 5.081947 |

At least fifty edits:

R Output Edit Count

|

|---|

[1] "Processing Metric edit_count ..." [1] "Processing treatment control ..." [1] "Sample Size: 45" [1] "Mean: 195.955555555556" [1] "Processing treatment historical ..." [1] "Sample Size: 41" [1] "Mean: 116.560975609756" [1] "T-test for treatment1 historical under edit_count" Welch Two Sample t-test data: t1 and ctrl t = -1.0463, df = 54.669, p-value = 0.3 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -231.48240 72.69324 sample estimates: mean of x mean of y 116.5610 195.9556 |

R Output Bytes Added (Net & Positive)

|

|---|

[1] "Processing Metric bytes_added_net ..." [1] "Processing treatment control ..." [1] "Sample Size: 40" [1] "Mean: 239.267255195948" [1] "Log Mean: 4.95482642279293" [1] "Log SD: 1.16683813935989" [1] "Processing treatment historical ..." [1] "Sample Size: 40" [1] "Mean: 187.267537169432" [1] "Log Mean: 4.71418715169494" [1] "Log SD: 1.06172502152303" [1] "T-test for treatment1 historical under bytes_added_net" Welch Two Sample t-test data: t1 and ctrl t = -0.9647, df = 77.315, p-value = 0.3377 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.7373014 0.2560228 sample estimates: mean of x mean of y 4.714187 4.954826 [1] "Processing Metric bytes_added_pos ..." [1] "Processing treatment control ..." [1] "Sample Size: 45" [1] "Mean: 277.629441771944" [1] "Log Mean: 5.22840919178115" [1] "Log SD: 0.931856342633689" [1] "Processing treatment historical ..." [1] "Sample Size: 41" [1] "Mean: 229.291974855973" [1] "Log Mean: 4.94473999286836" [1] "Log SD: 1.00534667958695" [1] "T-test for treatment1 historical under bytes_added_pos" Welch Two Sample t-test data: t1 and ctrl t = -1.3531, df = 81.65, p-value = 0.1797 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.7007350 0.1333966 sample estimates: mean of x mean of y 4.944740 5.228409 |

At least one hundred edits:

R Output Edit Count

|

|---|

[1] "Processing Metric edit_count ..." [1] "Processing treatment control ..." [1] "Sample Size: 14" [1] "Mean: 484.285714285714" [1] "Processing treatment historical ..." [1] "Sample Size: 12" [1] "Mean: 247.666666666667" [1] "T-test for treatment1 historical under edit_count" Welch Two Sample t-test data: t1 and ctrl t = -1.037, df = 16.079, p-value = 0.3151 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -720.1616 246.9235 sample estimates: mean of x mean of y 247.6667 484.2857 |

R Output Bytes Added (Net & Positive)

|

|---|

[1] "Processing Metric bytes_added_net ..." [1] "Processing treatment control ..." [1] "Sample Size: 11" [1] "Mean: 229.406669680937" [1] "Log Mean: 4.91198268865895" [1] "Log SD: 1.1065715024704" [1] "Processing treatment historical ..." [1] "Sample Size: 11" [1] "Mean: 195.969411899016" [1] "Log Mean: 4.58418080579895" [1] "Log SD: 1.32018683152677" [1] "T-test for treatment1 historical under bytes_added_net" Welch Two Sample t-test data: t1 and ctrl t = -0.6311, df = 19.408, p-value = 0.5353 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -1.4133482 0.7577444 sample estimates: mean of x mean of y 4.584181 4.911983 [1] "Processing Metric bytes_added_pos ..." [1] "Processing treatment control ..." [1] "Sample Size: 14" [1] "Mean: 296.674331844149" [1] "Log Mean: 5.15561020776912" [1] "Log SD: 1.06501799606155" [1] "Processing treatment historical ..." [1] "Sample Size: 12" [1] "Mean: 259.162261249939" [1] "Log Mean: 4.84816581571695" [1] "Log SD: 1.27657700824566" [1] "T-test for treatment1 historical under bytes_added_pos" Welch Two Sample t-test data: t1 and ctrl t = -0.6603, df = 21.55, p-value = 0.5161 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -1.2742982 0.6594094 sample estimates: mean of x mean of y 4.848166 5.155610 |

There were no significant differences in bytes added per edit when considering positive and negative bytes added. Nor was there a significant result for edits. However, the control group had consistently larger edit counts. Given more data the observed effect size may prove to be significant.

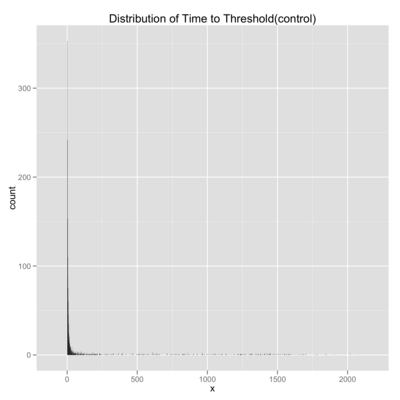

Time to threshold

[edit]We measured the time to threshold as the number of minutes between historical milestones. Only editors that reached successive milestones were included in this analysis. Using this metric helps us address the following:

- RQ3. Does receiving milestone feedback shorten the time to reach the next milestone?

Milestone: 1st Edit - 5th Edit

R Output

|

|---|

[1] "Processing treatment control ..." [1] "Sample Size: 1328" [1] "Mean: 428.634789156626" [1] "SD: 552.596301197372" [1] "Processing treatment historical ..." [1] "Sample Size: 1303" [1] "Mean: 404.027628549501" [1] "SD: 535.706250327613" [1] "T-test for treatment1 historical under time_diff" Welch Two Sample t-test data: ctrl and t1 t = 1.1598, df = 2628.62, p-value = 0.2463 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -16.99781 66.21213 sample estimates: mean of x mean of y 428.6348 404.0276 |

Milestone: 5th Edit - 10th Edit

R Output

|

|---|

[1] "Processing treatment control ..." [1] "Sample Size: 682" [1] "Mean: 450.475073313783" [1] "SD: 547.879891974263" [1] "Processing treatment historical ..." [1] "Sample Size: 647" [1] "Mean: 411.343122102009" [1] "SD: 533.742223682875" [1] "T-test for treatment1 historical under time_diff" Welch Two Sample t-test data: ctrl and t1 t = 1.3188, df = 1326.063, p-value = 0.1875 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -19.07783 97.34173 sample estimates: mean of x mean of y 450.4751 411.3431 |

Milestone: 10th Edit - 25th Edit

R Output

|

|---|

[1] "Processing treatment control ..." [1] "Sample Size: 241" [1] "Mean: 694.435684647303" [1] "SD: 557.765681286775" [1] "Processing treatment historical ..." [1] "Sample Size: 203" [1] "Mean: 668.704433497537" [1] "SD: 557.733693265889" [1] "T-test for treatment1 historical under time_diff" Welch Two Sample t-test data: ctrl and t1 t = 0.4843, df = 429.28, p-value = 0.6284 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -78.70409 130.16659 sample estimates: mean of x mean of y 694.4357 668.7044 |

Milestone: 25th Edit - 50th Edit

R Output

|

|---|

[1] "Processing treatment control ..." [1] "Sample Size: 78" [1] "Mean: 764.538461538462" [1] "SD: 506.461693076038" [1] "Processing treatment historical ..." [1] "Sample Size: 67" [1] "Mean: 697.328358208955" [1] "SD: 533.078747609886" [1] "T-test for treatment1 historical under time_diff" Welch Two Sample t-test data: ctrl and t1 t = 0.7745, df = 137.283, p-value = 0.4399 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -104.3783 238.7985 sample estimates: mean of x mean of y 764.5385 697.3284 |

Milestone: 50th Edit - 100th Edit

R Output

|

|---|

[1] "Processing treatment control ..." [1] "Sample Size: 30" [1] "Mean: 631.8" [1] "SD: 518.036638299541" [1] "Processing treatment historical ..." [1] "Sample Size: 17" [1] "Mean: 976.647058823529" [1] "SD: 581.519877258773" [1] "T-test for treatment1 historical under time_diff" Welch Two Sample t-test data: ctrl and t1 t = -2.0307, df = 30.251, p-value = 0.05115 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -691.53713 1.84301 sample estimates: mean of x mean of y 631.8000 976.6471 |

The table below contains the mean values and sample sizes of time-to-threshold for each milestone event:

| Treatment | Sample Size | 1st - 5th Edit | 5th - 10th Edit | 10th - 50th Edit | * 50th - 100th Edit |

|---|---|---|---|---|---|

| Control Sample | 1328 | 682 | 241 | 78 | 30 |

| Historical Sample | 1303 | 647 | 203 | 67 | 17 |

| Control Mean (minutes) | 428.63 | 450.48 | 694.44 | 764.54 | 631.8 |

| Historical Mean (minutes) | 404.03 | 411.34 | 668.70 | 697.33 | 976.65 |

| Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 | |||||

The only milestone event that came close to a significant result was observed under the 50th-100th edit event. However, the significance is still on the fringe of being marginal and the sample sizes are relatively small. This may motivate a greater interest in investigating more targeted responses among more active early editors. It should finally be noted that the time to threshold was shorter for the control treatment not receiving any feedback.

Quality

[edit]While feedback may increase the volume of newcomer edits, it might do so at the cost of decreased quality. This is concerning since increasing the amount of edits that will need to be reverted is counter productive. Similarly increasing the amount of newcomers who eventually need to be blocked would increase the burden on en:WP:AIV.

To explore whether the changes in the volume of newcomer work were coming at the cost of decreased quality, we examined the work that newcomers performed in their first two weeks of editing. We identified two aspects of newcomers and the work that they perform: the proportion of newcomers who were eventually blocked from editing and the rate at which newcomers' contributions were rejected (reverted or deleted). We used these metrics to answer the following questions:

- RQ4. Does feedback affect the rate at which newcomers are blocked?

- RQ5. Does feedback affect the success rate of newcomers?

Block rate

[edit]To determine which newcomers were blocked, we processed the logging table of the enwiki database to look for block events for newcomers in the experimental conditions. We decided that a newcomer had been blocked if there was any event for them with log_type="block" AND log_action="block" between the beginning of the experimental period and midnight GMT Sept. 5th. Blocked newcomers plots the proportion of newcomers were blocked by experimental condition. As the plot suggests, the difference in proportions varies insignificantly (around 0.072) which suggests that the experimental treatment had no meaningful effect on the rate at which newcomers were blocked from editing.

To make sure that this result wasn't due to blocks of editors who hadn't earned them through a series of bad-faith edits, we examined the relationship between the number of edits these newcomers saved and the proportion of them that were blocked. Blocked newcomers by revisions shows a steady increase in the proportion of newcomers that were blocked between 1 and 4 revisions. This seems likely due to the 4 levels of warnings that are used on the English Wikipedia.

R Output Block Rates

|

|---|

[1] "T-test for treatment1 historical under block_count" Welch Two Sample t-test data: ctrl and t1 t = 0.4718, df = 42322.56, p-value = 0.6371 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.0004647933 0.0007594753 sample estimates: mean of x mean of y 0.0009875657 0.0008402246 |

The observed block rates for the two groups were extremely small: control = .099% and historical = .084%. Although, the block rate for the historical group was smaller the result was not significant.

Success rate

[edit]We examined the en:SHA1 checksum associated with the content of revisions to determine which revisions were reverted (see Research:Revert detection) by other editors. By comparing the number of revisions saved with the number of revisions reverted, we can build a proportion of reverted revisions (see Research:Metrics/revert_rate) and the success rate (the proportion of revisions saved by an editor that were not reverted). We use the success rate of an editor as a proxy for the quality of their work and a direct measure of the additional work their activities necessitate from Wikipedians.

To look for evidence of a causal relationship between PEF and the quality of newcomer work, we calculated the mean success rate for newcomer for each experimental condition.

R Output Revert Rates

|

|---|

[1] "Treatment: control" [1] "Samples: 5235" [1] "Mean: 0.0245267195984868" [1] "Treatment: historical" [1] "Samples: 5279" [1] "Mean: 0.0292926869221453" [1] "T-test for treatment1 historical under revert_rate" Welch Two Sample t-test data: ctrl and t1 t = -1.625, df = 10420.69, p-value = 0.1042 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: -0.0105149549 0.0009830203 sample estimates: mean of x mean of y 0.02452672 0.02929269 |

The revert rate for the control was actually lower, however the result is not significant.