Research:Measuring edit productivity

One critical measure of wiki editing activities is productivity. Such measures are commonly used as an outcome measure in intervention experiments (c.f. [1] and [2]). Yet measures like R:productive edit are simple booleans. They don't differentiate between major contributions that survive the review of many other editors and minor changes that are edited out in the next refactoring. In this project, we'll apply a content persistence strategy to measuring the article-writing productivity of editors. We identify thresholds of survival that are meaningful and propose a measurement strategy for approximating "productivity" that is more robust and meaningful than past measures.

Methods

[edit]We use the behavior of editors around contributed content to learn about the quality and productivity of a submission based on a simple pair of assumptions: content contributed to an article that is not removed by other editors is high quality and contributing content that is not removed is a productive activity. These assumptions have been stated and used for such measurements in recent work (e.g. [3][4][5]

Measurement strategies

[edit]We seek to experiment with two strategies for identifying high quality content and measuring productivity: thresholding and log-persistence measures.

- Thresholding

- Assumption: Newly contributed content that survives some minimum amount of time/future edits by another editor is high quality. This assumption works well if high quality content is just as likely as moderate quality content to be removed in some future rewrite of the page. Wikipedia's primary review mechanisms happen at the boundary as new contributions are made[6][7], so this seems likely to work.

- Log-persistence

- Assumption: Newly contributed content that survives longer does so for a reason, but there are diminishing returns. Recent work has shown a diminishing return pattern of content quality vs. revisions survived for small numbers of edits[8]. This past work shows what appears to be a roughly linear relationship between ``log(revisions persisted)`` and the quality of a contribution.

In this project, we'll compare measurements using both strategies.

Temporal concerns

[edit]A small proportion of Wikipedia articles are constantly being edited while the vast majority of articles rarely see change. Due to the assumptions of our measurement strategy (the way that other Wikipedians react to a contribution is indicative of quality), we may need to wait an excessively long time to be able to judge the quality/productivity of contributions to articles that are seldom changed. So, we take advantage of the time visible to supplement persisted edits as an indicator of quality.

Calibration

[edit]For simple thresholding and log-persistence measures, we can rely on previous work[8] to calibrate. However well need to perform our own analysis to learn how long a contributed word must be visible in order for us to consider it high quality (thresholding) or what function we should use to weight time visible (log-persistence). This analysis compares time visible and edits persisted on relatively inactive articles.

Results

[edit]Calibration

[edit]First, we look at the persistence of content tokens through revisions and over time. The graphs below show the hazard of permanent removal from the article.

#Revisions persisted (hazard) shows that the hazard of removal drops below 5% after a 2 followup revisions, but the hazard continues to fall with additional revisions persisted. So, it looks like a good threshold for damage would be set between 2-5 revisions, but if the hazard decay represents some quality aspect of the contribution, more information can be captured by measuring and log-weighting long-term revisions persisted.

#Time visible (hazard) shows that the hazard of removal drops below 5% after about an hour, but that could be dominated by highly active pages. There's also an interesting trend of declining steps every 24 hours. It seems that this could be indicative of the review patterns employed by Wikipedians.

Measurements

[edit]Anonymous vs registered editors

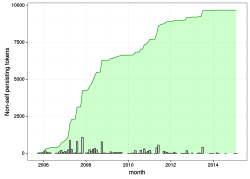

[edit]We analyzed the English Wikipedia to look for overall productive contributions over time. We focused on two key cross-sections of Wikipedia editors: registered editors and anonymous editors (aka IP editors). #Persistent words by user registration shows a striking stability in the overall persistent words added to Wikipedia since mid 2006 at about 130 million non-self-persisting tokens added per month by registered editors. This is surprising because of the well-studied decline in the overall number of editors who are working on Wikipedia since 2007[9]. Still, we can see a clearly declining trend in the number of persisting words contributed by anonymous editors. While we used to see about 30 million persisting words added by anons per month in 2007, but that's declined to 23 million persisting words added by 2015. #Unregisted persistent words prop shows the proportion of persisting words added by anonymous editors. There's clearly a drop over time from more than 25% in 2005 to about 13% in 2015.

Discussion

[edit]These trends show two very interesting things:

- Registered editors in English Wikipedia are not getting less productive despite a dramatic reduction in the active population of editors.

- Anonymous editors contribute substantially to overall productivity; however, their proportion of overall contribution has been steadily declining since the beginning of 2006

Productive efficiency

[edit]Given the decline of the editor population[9] and how past work suggested that there's been a corresponding decline in the total labor hours contributed to Wikipedia[10], we thought the more stable level of productivity was curious. So we performed a direct comparison between the monthly labor hours approximation and the observed rate of persisting tokens added. #Monthly persisting tokens (smoothed) shows a time-limited (post 2004[11]) plot of the total # of persisting tokens added by non-bots and we can see the combined trends of the anon-registered productivity plots above. #Monthly labor hours (smoothed) shows a clear decline in the total number of labor hours over the stable period of #Monthly persisting tokens (smoothed). Finally, #Monthly persisting tokens per labor hours (smoothed) shows the underlying rate of persistent tokens added per labor hour. We can see a clear crash as Wikipedia becomes very popular and the editing population grows exponentially. But following the crash and changes since 2007, there's a clear recovery as Wikipedians approach 2004-ish levels of productive efficiency.

Discussion

[edit]This result is surprising. It implies that as Wikipedia declined in numbers, efficiency increased at a rate that was proportional -- enough to maintain a nearly consistent overall level of productivity.

Bots and tools?

[edit]Maybe the source of this new efficiency is bots and tools. To check this hypothesis, we brought bots and "tool-assisted" edits back into the analysis and dug into some of the most productive tools. #Monthly persisting tokens by editor type shows that bot and tool-assisted editors contribute a small proportion of overall productive contributions compared to anonymous and registered editors contributing the regular way. #Monthly persisting token proportion shows that the productive contributions via tool-assistance has been increasing steadily since 2006. #Monthly persisting token proportion highlights the top 5 "tools" used to contribute productive content. Note the fall of reflinks (a closed-source tool) and the rise of refill (an open-source replacement). Auto-wiki browser -- a bot-like force multiplier for mass editing -- remains the leader in productive tool-assisted activities.

Discussion

[edit]Tools and bots don't explain the increasing overall efficiency, but tool-assisted productive contributions are certainly on the rise. Most tools that editors use to contribute lots of new productive content are force multipliers (awb, autoed, ohconfucius) and a couple are reference helpers (refill and reflinks).

Individual contributions

[edit]In order to get a sense for an editors' overall productivity, we also took some measurements of individual contribution efforts. #EpochFail's productivity shows us the productive contributions to English Wikipedia by the author of this study. This plot showcases the kind of contributions this measure does not see. I'm primarily a researcher and tool developer. This measure does not capture the majority of my contributions to Wikipedia. Similarly, #Guillom's productivity shows a partial picture as well since User:Guillom is also active on French Wikipedia and his contributions would not be captured in this measure of English Wikipedia. #Jimbo_Wales' productivity shows the productivity of one of our favorite contributors. Note his somewhat sporadic and bursty contribution patterns. #DGG's productivity shows the contribution level of a very prolific long-time contributor to English Wikipedia. When viewing his own productivity graph, DGG reflected that he had intentionally kept his activity level at a sustained level as a personal goal. As of today the end of this analysis DDG had added more than 2.5 million persisting tokens to English Wikipedia articles!

Discussion

[edit]There are many things that this measure doesn't capture in the large set of productive activities that Wikipedians engage in. However it does show some interesting dynamics in individual contribution patterns. Comparing DGG's overall productivity to Guillom's with this strategy might be difficult given what is and is not measured.

See also

[edit]- R:Content persistence

- R:Editor productivity

- github.com/mediawiki-utilities/python-mwpersistence -- Content persistence tracking utilities

References

[edit]- ↑ Research:VisualEditor's effect on newly registered editors

- ↑ Research:Teahouse long term new editor retention

- ↑ Halfaker, A., Kittur, A., & Riedl, J. (2011, October). Don't bite the newbies: how reverts affect the quantity and quality of Wikipedia work. In Proceedings of the 7th international symposium on wikis and open collaboration (pp. 163-172). ACM.

- ↑ Priedhorsky, R., Chen, J., Lam, S. T. K., Panciera, K., Terveen, L., & Riedl, J. (2007, November). Creating, destroying, and restoring value in Wikipedia. In Proceedings of the 2007 international ACM conference on Supporting group work (pp. 259-268). ACM.

- ↑ Adler, B., de Alfaro, L., & Pye, I. (2010). Detecting wikipedia vandalism using wikitrust. Notebook papers of CLEF, 1, 22-23.

- ↑ Geiger, R. S., & Ribes, D. (2010, February). The work of sustaining order in wikipedia: the banning of a vandal. In Proceedings of the 2010 ACM conference on Computer supported cooperative work (pp. 117-126). ACM.

- ↑ Geiger, R. S., & Halfaker, A. (2013, August). When the levee breaks: without bots, what happens to Wikipedia's quality control processes?. In Proceedings of the 9th International Symposium on Open Collaboration (p. 6). ACM.

- ↑ a b Biancani, S. (2014, August). Measuring the Quality of Edits to Wikipedia. In Proceedings of The International Symposium on Open Collaboration (p. 33). ACM.

- ↑ a b R:The Rise and Decline

- ↑ Geiger, R. S., & Halfaker, A. (2013, February). Using edit sessions to measure participation in Wikipedia. In Proceedings of the 2013 conference on Computer supported cooperative work (pp. 861-870). ACM.

- ↑ Note that data pre-2004 was removed as it was excessively messy