Grants:Project/Maximilianklein/humaniki/Final

![]() This project is funded by a Project Grant

This project is funded by a Project Grant

| proposal | people | timeline & progress | finances | midpoint report | final report |

Welcome to this project's final report! This report shares the outcomes, impact and learnings from the grantee's project.

Part 1: The Project

[edit]Summary

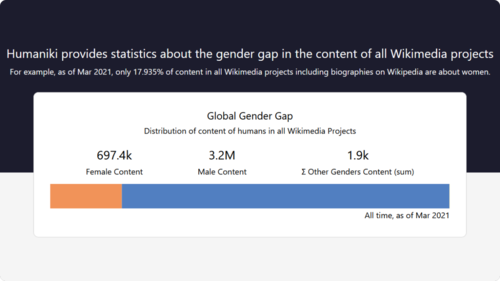

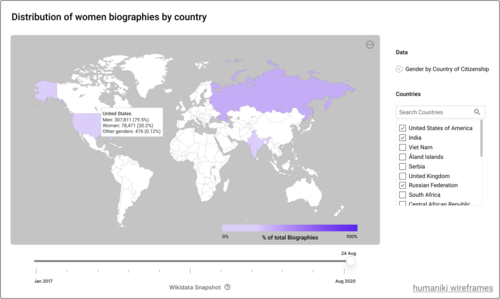

[edit]- We created the Humaniki web app, a data dashboard describing the diversity of humans represented in all wikimedia projects.

- It is the merging and maturing of two previous prototypes, this time co-designed with the editor community.

- The user-led design and renewed developer energy manifested in the collection of more detailed statistics, an elegant interface, and a public API.

- Humaniki has already been used over 1000 times by editors and readers, and we already have Wikiproject and community-developer data consumers.

- The best way to understand it is to start digging yourself at [1].

Project Goals

[edit]1) Engineer reliable, polished diversity data collection for the future.

[edit]

ORIGINAL GOALS

Merging two overlapping single-maintainer tools into one comprehensive multi-maintainer tool will mature these tools out of their prototype phases. This will benefit the Wikimedia's wikiprojects and communities by solidifying diversity data collection for the years to come. In addition the result will be a more polished, more capable, portal for diversity data that will increase the public face of this corner of feminist data enthusiasts.

FINAL RESULT

We completed our goal of making reliable polished diversity data collection by successfully merging the two previous projects of WHGI and Denelezh into humaniki. The new codebase is a superset of the previous features, enabled by a collaboration of 3 developers, rather than singly by one. Humanikidata.org is a new polished webapp whose UI was created with participatory research with the editor community. In addition, because of the supported launch, and owing to being hosted on Wikimedia's Cloud Platform, the new webapp is more stable, and expandable. It is currently in-use by the Wikimedia editor community.

2) Create more detailed, actionable statistical outputs for editors.

[edit]

ORIGINAL GOALS

While interesting, and creating the first views into Wikipedia's biography composition, not all the data produced by WHGI and Denelezh is that actionable. Communities have long pointed out that in addition to knowing some of these statistics, the tools aren't delivering on their potential to help users focus their editing. We would like to enable comparison features that can answer questions like: "Which women are represented in French Wikipedia but not English Wikipedia?" "Which occupations have seen the least amount of biographies created in the last 2 years?" "Which biographies are being created in my historical interest areas, so that I can find similar minded editors?" "Have there been any Wikipedias that have seen systematic anti-diversity editing? When was it?" "In what non-gender dimensions are the humans of two wikis similar/different?"

FINAL RESULT

We met our goal of creating more detailed actionable statistic for editors, by implementing ideas that were generated through partipatory design. Our design process with 24 community members resulted in ideation of many features we hadn't though of ourselves. The shortlisted features that emerged were (along with their status):

- Publication-ready presentation (completed)

- Customizable visualizations (completed)

- Gender gap evolution views (enabled)

- Internationalization (enabled)

- Wikidata human property human coverage data (completed).

3) Enable future community features through an API.

[edit]

ORIGINAL GOALS

The data that is produced through WHGI and Denelezh is not easily re-usable outside of their own webapps. We want to make sure the data is usable by in the full tools/data ecosystem. For instance we want to:

- Enable data ingest for the Gender Campaigns Tool being built.

- Easily enable bot-updating of stats or lists on to Wikipages or embeds into any website. E.g. weekly reports delivered to Wikiprojects.*

- There are even moon-shot ideas that could be unlocked with an open API, like A/B testing of editing efforts between languages, or AI training data to know what predicts biased editing patterns.

FINAL RESULT

We met our goal of enabling future community features by restructuring our data collection process and creating a public API. After conversations with other developers in the ecosystem, including the proposed Gender Campaigns Tool, we have started keeping more granular historical data that will enable these other tools. Our public API (documentation) is up and running, and already used by Wikiproject Women in Red to create their own analyses. We have even noticed that our public API was being ingested by a facebook data collection bot.

Project Impact

[edit]Important: The Wikimedia Foundation is no longer collecting Global Metrics for Project Grants. We are currently updating our pages to remove legacy references, but please ignore any that you encounter until we finish.

Targets

[edit]- In the first column of the table below, please copy and paste the measures you selected to help you evaluate your project's success (see the Project Impact section of your proposal). Please use one row for each measure. If you set a numeric target for the measure, please include the number.

- In the second column, describe your project's actual results. If you set a numeric target for the measure, please report numerically in this column. Otherwise, write a brief sentence summarizing your output or outcome for this measure.

- In the third column, you have the option to provide further explanation as needed. You may also add additional explanation below this table.

| Planned measure of success (include numeric target, if applicable) |

Actual result | Explanation |

| 1 new repository, with 2+ contributors | 1 new repository, with 3 contributors | Our new code repository has been worked on collaboratively by our devs. |

| 1/2 year successful running | 1/2 year successful running | Our webapp has been reliably updating and handling traffic without error since the alpha launch, 6 months ago. |

| 1,000 unique visitors | 1,200 unique visitors | Our visitor tracking shows 1,200 unique visitors in a 6-month timeframe. |

| 5 prominent Wikiepidans trained | 6 prominent Wikiepidans trained | We conducted a guided-walkthrough of humaniki with 6 enthusiastic interview participants. |

| Elicitation outputs | Elicitation outputs | Design recommendations from interviews (13 participants) and surveys(23 participants), [on slideshare |

| 5+ features delivered | 5 features delivered | Features include: (1) publicaiton-ready presentation, (2) customizable visualizations, (3) data completeness details, (4) nonbinary gender representation, (5) mobile friendly) |

| Software acceptance outputs | Software accepted | We conducted rounds of iterative research with our biggest power users, who started using the new app. |

| 1,000 clicks+ | Obsoleted | Initially we thought part of our featureset would include links back to Wikipedias, after UX research other features were prioritized more highly. |

| 1 new Wikiproject user | 1 new Wikiproject user | Italian Wikiproject:WikiDonne were onboarded after UX research, and now use humaniki to inform their work. |

| 1 new API user | 1 new API user | Community members created an on-wiki worklist derived from the API: Wikipedia:WikiProject_Women_in_Red/Gender_imbalance_per_country |

Story

[edit]Looking back over your whole project, what did you achieve? Tell us the story of your achievements, your results, your outcomes. Focus on inspiring moments, tough challenges, interesting anecdotes or anything that highlights the outcomes of your project. Imagine that you are sharing with a friend about the achievements that matter most to you in your project.

- This should not be a list of what you did. You will be asked to provide that later in the Methods and Activities section.

- Consider your original goals as you write your project's story, but don't let them limit you. Your project may have important outcomes you weren't expecting. Please focus on the impact that you believe matters most.

The story of humaniki is one of software maturity. The concept of measuring the wikipedia biography gender gap had already been proved (twice), but the software that drove it wasn't refined, or easy to use - and that's what humaniki accomplished. We convened the developers and community for a participatory redesign. Then burrowed our heads to achieve a level of polish making the app not only functional, but delightful. And less obviously, we laid the foundation for this project to grow in the future - which has already begun as the webapp is in use by the community today.

The first step to creating mature software is not technical, but organizational, it's having the right stakeholders involved in planning. The precursor tools WHGI (Max Klein, 2014) and Denelezh (Envel Le Hir, 2017) were pet-projects created in isolation by single developers, but ironically with the same ideas. Only at Wikimania 2019 we were able to meet for the first time and agreed to work together. With grant-funding we were able to hire a UI developer (Eugenia Kim) and a UX researcher who was also a community member (Sejal Khatri) who subsequently surveyed and interviewed 24 more Wikimedians. Having everyone converge and seeing community interest was synergetic, which sparked our and their enthusiasm, and led to co-design and planning that ensured our time and energy was well spent.

As technical founders we had many advanced theoretical ideas about next features, however interviewing users of our retiring tools showed us many more important areas needing improvement. We had many moments of insight, from the mundane - our domain names were not memorable - to intricate UI details. Just as one example, an anti-pattern that came up, was many users screenshotting our webapps to embed in reports or flyers, but the graphs always needed extra annotation and beautifying. Now each visualization and table on our app has been designed to be screenshot-able, containing all the relevant explanation, metadata, and licensing information in a single rectangle. We thought we should invest primarily in more statistics, but the community wanted more frontend. The UI is now a pleasure to use, rather than an impediment; our webapp is modern, stable, and mobile friendly.

Behind the scenes, our UI improvements were enabled by our new technology stack, fixing a lot of development shortcuts that were previously taken in the precursor tools. For instance, we can now, support all nonbinary genders, add future demographic dimensions, show arbitrary historical comparisons, and deliver our data over an API. Launching our webapp and seeing the community reactions on-wiki, and on twitter was so validating, with users posting discoveries they'd made. We had 500 visitors in our first week, and still sustain 70-100 unique visits per week. Another areas of empirical success was the API's serve-yourself data. We experienced a lot of pride and knew the concept was proved when we saw Wikiproject Women in Red building their own worklists from our API, without ever having talked to or supported the developers. The time invested into good practices and maturity like these, we believe will serve us well, far beyond the grant timeline.

Survey(s)

[edit]If you used surveys to evaluate the success of your project, please provide a link(s) in this section, then briefly summarize your survey results in your own words. Include three interesting outputs or outcomes that the survey revealed.

Other

[edit]Is there another way you would prefer to communicate the actual results of your project, as you understand them? You can do that here!

View our webapp at https://humanikidata.org ! View our blog posts on diff, particularly our launch blog.

Methods and activities

[edit]Please provide a list of the main methods and activities through which you completed your project.

User Research and Participatory Design

[edit]

- Project advertising

- Writing blogs

- Contacting community members on-wiki

- Contacting community member on listserves

- User data gathering

- Lighter-touch user surveys

- Long form user interviews

- Design processes to distill features

- Grounded theory method

- 5 page design method

- How/Wow/Now matrix method

- 4 iterations of prototyping features in figma with community feedback

- Cognitive walktrhoughs

- Communicate results to participants

- Launch app

- Engage with response on social media

- Write update blogs

- Create youtube walkthrough videos

- Report presentation and Q&A with Wikimedia Research

Y mira, acaba de salir esta herramienta para visibilizar la brecha de género en todos los proyectos del movimiento Wikimedia. Los datos son bastante demoledores... https://humaniki.wmcloud.org — PatriHorrillo, tweet, https://twitter.com/PatriHorrillo/status/1381580059757150210

Software Development and Testing

[edit]

- Architectural planning

- Convening developers for schematic discussions and workshop

- Writing code in multiple disciplines

- data processing layer (java/python/SQL),

- API layer (python),

- UI layer (js-react).

- Pair programming for junior developer-education.

- Software integration testing

- 2 rounds of agile user-testing

Project resources

[edit]Please provide links to all public, online documents and other artifacts that you created during the course of this project. Even if you have linked to them elsewhere in this report, this section serves as a centralized archive for everything you created during your project. Examples include: meeting notes, participant lists, photos or graphics uploaded to Wikimedia Commons, template messages sent to participants, wiki pages, social media (Facebook groups, Twitter accounts), datasets, surveys, questionnaires, code repositories... If possible, include a brief summary with each link.

- Main

- Humaniki Webapp, our main product - http://humanikidata.org/

- Monthly blogs on development at wikimedia diff - https://diff.wikimedia.org/author/sek2016/

- User Experience Research

- UX Research Report Presentation, a complete overview of how we co-designed humaniki - https://www.slideshare.net/SejalKhatri/humaniki-user-research-report

- UX prototypes video, explanations of wireframes and mockups - https://www.youtube.com/watch?v=0cbPWeJ8PiQ

- Code

- Phabriactor board https://phabricator.wikimedia.org/project/view/4967/

- Developer's contribution Guide, an entrypoint for understanding how humaniki works technically - https://github.com/TheEugeniaKim/humaniki/blob/master/docs/CONTRIBUTION_GUIDE.md

- Specific repos

- Wikidata dump processing.

- https://github.com/notconfusing/denelezh-import

- This is a Wikdata-Toolkit Java program that processes huge the wikidata dumpfiles and subsets it into CSVs that just relate to humans.

- Metrics generation

- https://github.com/notconfusing/humaniki-schema

- This is python/SQL layer that aggregates data into metrics about humans. It is written mainly in SQLAlchemy, that compiles into mysql-dialect sql before executing.

- API layer.

- https://github.com/notconfusing/humaniki-backend

- This is a python-flask HTTP server that provides a public api at humaniki.wmcloud.org/api to serve results from metric generation.

- Web UI.

- https://github.com/theeugeniakim/humaniki

- This is a react-js dashboard that gets. You may develop on the dashboard while getting live data by setting REACT_APP_URL environment variable, thereby removing the other repos as dependencies.

- Wikidata dump processing.

- Specific repos

- Social media accounts

- Humanikidata on twitter https://twitter.com/humanikidata

- FAQs

- On-wiki FAQ mw:Humaniki/FAQ

Learning

[edit]The best thing about trying something new is that you learn from it. We want to follow in your footsteps and learn along with you, and we want to know that you took enough risks in your project to have learned something really interesting! Think about what recommendations you have for others who may follow in your footsteps, and use the below sections to describe what worked and what didn’t.

What worked well

[edit]What did you try that was successful and you'd recommend others do? To help spread successful strategies so that they can be of use to others in the movement, rather than writing lots of text here, we'd like you to share your finding in the form of a link to a learning pattern.

Community Engagement

[edit]- Contact community first. Since some of our team members are long term Wikipedians, we were able to follow a defined process to involve the interested community members in the project research and development. This helped us establish effective communication between different stakeholders.

- Learning_patterns/Let_the_community_know. Having access to diff.wikimedia, Wikimedia’s official news channel, helped us put our project in front of a large audience, and in getting exposure to community members.

- Learning_patterns/How_to_conduct_interviews_with_your_project_partners. We received a 54% participation response rate for our interview research from surveyees which showed the community's high interest in engaging with our project.

Technical

[edit]- Build essentials first. Identify what any incarnation of the project would require technically and work on that first, while other details are being identified. For instance, in this case, we designed the core database schema while co-design was in progress.

- Focus on an MVP release. Don't release your project all at once, that doesn't leave enough room for iteration. In our case this was to get the full software stack running head-to-toe on Wikimedia servers, before extra features like visualizations came.

- Organize coding into epics. Split your remaining features into alpha, beta, and v1 releases. We used Wikimedia's Phabriactor to keep track of those epics.

Team Support and Workflow

[edit]

- Sharing Facilitation responsibilities: Collaborating with the team members and sharing meeting facilitation roles kept everyone energized.

- Daily standups. In our remote environment, with timezone differences, we asynchronously posted what we were going to work on that day

- Learning patterns/Working with developers who are not Wikimedians Weekly Scrum: Synchronously, once or twice a week, we had active discussions about the project workflow and updating the strategy based on the updated workflow.

- Collaborative UX/UI exercises, both with the team and seeing feedback from users helped to nail down concrete direction which helped to ground me on different features/prioritizing

- Learning_patterns/Let_the_community_know - We used the Wikimedia Diff blog to keep community updated on our progress.

- Learning_patterns/How_to_conduct_interviews_with_your_project_partners. We sought to understand what our partners needed in the abstract first, and then later converged onto practical details.

What didn’t work

[edit]What did you try that you learned didn't work? What would you think about doing differently in the future? Please list these as short bullet points.

- Learning patterns/Grant projects are not startups. Overpromised slightly on features. One of our goals was to make humaniki feature-complete with respect to duplicating Denelezh and WHGI, we came very close at least 90%, but some of those items will need to be added in volunteer time after the grant. They were the internationalization and evolution views.

- No in-person 'offsites'. Our remote team could have used a convening to fraternize at the beginning, and worked together on a sprint at the end. However covid restrictions made this too hard, and we elected to convert our travel budget to more design budget.

- Managed too much of our own infrastructure. We decided to run our own database on wmcloud for autonomous development-speed reasons. However it might have made more sense to take the time to integrate with Wikimedia's spark cluster in the future.

Other recommendations

[edit]If you have additional recommendations or reflections that don’t fit into the above sections, please list them here.

Next steps and opportunities

[edit]Are there opportunities for future growth of this project, or new areas you have uncovered in the course of this grant that could be fruitful for more exploration (either by yourself, or others)? What ideas or suggestions do you have for future projects based on the work you’ve completed? Please list these as short bullet points.

- Small items from our Phabricator board.

- Occupations metrics

- Backfill metrics with Denelezh and WHGI historical metrics

- Evolution API and visualizations.

- I18n is technically easy now with react, but it's a campaign effort.

- Grand vision ideas.

- Enable the 'Gender campaigns tool' by 'listmaking'.

- Anomaly detection.

Part 2: The Grant

[edit]Finances

[edit]Actual spending

[edit]Please copy and paste the completed table from your project finances page. Check that you’ve listed the actual expenditures compared with what was originally planned. If there are differences between the planned and actual use of funds, please use the column provided to explain them.

| Expense | Approved amount | Actual funds spent | Difference |

| Data engineering | $6,000 | $6,000 | $0 |

| Frontend engineering | $13,000 | $13,000 | $0 |

| UX Researcher | $8,000 | $8,000 | $0 |

| Project Management | $3,000 | $3,000 | $0 |

| Community engagement (was Travel pre-COVID) | $5,500 | $5,500 | $0 |

| Total | $35,500 | $35,500 | $0 |

We moved more money into the community and frontend positions taking money away from the data engineering portion, which were were able to have donated time from there.

Remaining funds

[edit]Do you have any unspent funds from the grant?

Please answer yes or no. If yes, list the amount you did not use and explain why.

- NO

If you have unspent funds, they must be returned to WMF. Please see the instructions for returning unspent funds and indicate here if this is still in progress, or if this is already completed:

- N/A

Documentation

[edit]Did you send documentation of all expenses paid with grant funds to grantsadmin![]() wikimedia.org, according to the guidelines here?

wikimedia.org, according to the guidelines here?

Please answer yes or no. If no, include an explanation.

- YES

Confirmation of project status

[edit]Did you comply with the requirements specified by WMF in the grant agreement?

Please answer yes or no.

- YES

Is your project completed?

Please answer yes or no.

- YES

Grantee reflection

[edit]We’d love to hear any thoughts you have on what this project has meant to you, or how the experience of being a grantee has gone overall. Is there something that surprised you, or that you particularly enjoyed, or that you’ll do differently going forward as a result of the Project Grant experience? Please share it here!

At the outset of the grant some advice that we received from the Grants team was that hiring junior developers may allow us to stretch our wage budget and bring new contributors to the Wiki world. Following that advice we selected a junior-experience candidate that was qualified, but less well-versed in our particular technologies. It took us longer, and with more pair programming to accomplish our tasks, but our junior developer benefited, and will hopefully be a long time dev contributor to the Wikimedia movement.

COVID of course impacted our project. We were grateful to have been able to convert our travel funding. Still we were thinking that we may work in person as a team at the beginning of the grant, but unfortunately we had to convert to fully remote work. The Grants team was thankfully sensitive to this change.