Grants:IEG/Learning/round 1 2013/post-decision

After decisions were announced for round 1 IEGs, we collected feedback from 2 surveys of participants in April 2013. This feedback, combined with ongoing committee discussions and other community input, will be used to iterate on the Individual Engagement Grants program from May-July 2013, before the second round of IEG begins.

Below you'll find a list of recommendations culled from the survey responses, followed by the aggregated survey results.

See the methodology section of this report for survey methodology.

Recommendations

[edit]We suggest focusing on the following changes for round 2 iteration:

- Submission

- Build a guidelines page with more concrete proposal examples now that we have them from round 1. We can point to what a really good 6-month project scope looks like, what kinds of projects and expenses are considered favorably, etc.

- Keep the iterative process for proposal development - it was a strength that we need to keep even as the program inevitably grows more structure.

- Incorporate endorsement into part 2 of the proposal process rather than separating it in a part 3 workflow, so that it is clear that community notification should happen right away. We'll want to update the application steps to make this clearer as well.

- Build in more/better notifications for participants (can we get Echo on Meta soon?)

- Make the decision-making process steps/info clearer in the program pages.

- Continue to focus on people to spread awareness of the program. Village Pump posting in hundreds of languages is always time-consuming and could be cut from the promotion strategy if needed.

- Review

- Restructure review process to increase the amount of qualitative feedback from the committee at individual levels as well as in the aggregate. The scoring process was useful for committee members and internal decison-making comparisons, and we should keep that quantitative set of metrics. But we also know that quantitatives aren’t sufficent feedback on project plans for proposers, so we need to increase focus on the qualitative aspects of review.

- Keep building IdeaLab. Upcoming sprints will focus on surfacing dynamic content and increasing systems that drive more traffic/activity to the space, both of which will help get proposers more early feedback and discussion on their proposals.

- Use the strength of committee diversity for good by dividing up proposals by project type and reviewers by expertise, so that reviewers can focus on areas of core interest/competence. Let’s see if this helps alleviate potential burn-out concerns from having to carefully review too many proposers, and promote more scalability for the program as a whole.

- Engage more community members with expertise in the various types of projects to give feedback on proposals and project ideas.

- Update committee pages to set clear expectations about the workload required, now that we have a better sense of it, to avoid surprise!

- Revisit scoring rubric to account for level of risk, and degree of confidence in reviewer's assessment, and look into the idea that 5-point scale resulted in too many average scores (let's collect some further data on this).

- Setup a private wiki to improve collaborative aspects of the committee's formal review, replacing mailing lists for some of the consensus-building discussions.

- Look into scoring tools, potentially a buildout of the system used for scoring Wikimania scholarships.

- Do more things that encourage unselected proposers to keep engaging. IEG shouldn't feel like a contest with winners and losers, everyone should feel like they've learned and gotten something valuable from the experience. Simple acknowledgements like badges, notifications and clear feedback can all contribute to a good experience.

Future growth opportunities

[edit]- IdeaLab can help scale the potential for individual grantmaking. Further investment here is our first priority this year.

- The grantmaking process may be an opening for new contributors to join the Wikimedia movement. One proposer’s comments remind us that because our target is existing contributors, we aren't taking full advantage of the enthusiasm of newbies: "The IEG program is wonderful, however it seems "inward-facing" to people who have been active editors and know their way around the culture of Wikipedia. I love Wikipedia, and would love to be a contributor, but as someone new to the editing culture, I found the communication system to be somewhat opaque. I'm used to communicating by e-mail." Although our grants are intended for active Wikimedians, we could also choose to use them as a tool for teaching people about the Wikimedia movement. We’ll probably not focus on this kind of expansion for the current year, but should keep it in mind for the future.

- Several participants wish that WMF grantmaking partnered more closely with WMF tech to offer assistance or code review so that more technical IEG projects can be supported in the future. Organizational priorities remain a dependency here, no work is yet planned in this regard.

IEG Experience

[edit]The overall pilot experience appears to have been good! Participants say they are likely to return in future rounds and to tell their friends/colleagues to participate.

- Proposer, will you apply to IEG again in the future?

- Proposer, will you recommend others apply to IEG in the future?

- Committee member, will you serve on IEG Com again in the future?

- Committee member, will recommend others serve on IEG Com in the future?

- Comments

- "Thanks for a great experience." - committee member

- "I think for a first go-round this was a very well executed process. I valued the committee's role in evaluating projects and thought it dovetailed nicely with the due diligence process lead by WMF folks." - committee member

- "I have a lot to live up to with these grants but feel like I have the support I need to be successful. I appreciate that." - grantee

- "I hope to see the brand IEG as ubiquitous as GSOC." - proposer

- "Keep it going, guys! This is definitely one of the important initiatives to the movement these years! I am having great hopes for the IEGs." - proposer

Publicity

[edit]Personal invites appear most effective for encouraging participation, and banners, mailing lists, and social media all seem worth continuing to do.

- Proposer, how did you find out about IEG?

- Committee member, how did you find out about IEG?

Committee setup

[edit]Overall, participants felt the committee setup went well.

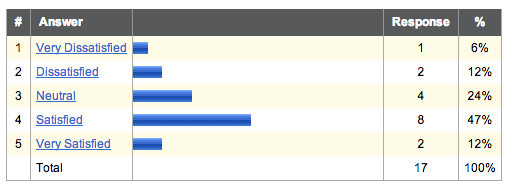

- How satisfied were you with how the IEG Committee was selected and organized?

Workload

[edit]Self-assessment of committee members' workload:

- 70% of committee respondents felt the workload was more than they had anticipated

- 30% felt it was as-expected

Those members who had not served on committees in the past rated their workload as greater than expected more often than those who had not. We see 3 interpretations for this finding:

- We're doing a good job enticing volunteers to serve on committees outside of the usual suspects

- We should do a better job setting expectations about workload

- We should be careful to avoid committee burnout by keeping the workload manageable

What worked well

[edit]Diversity

[edit]- "You actively pursued diverse representation from the community, reached out to them, and didn't set too high a bar to entry. Siko was very helpful."

- "Good diversity of skills in the committee."

- "Selecting members from both the community and the WMF staff was something I liked a lot. The participation of the community helped in generating and implementing new ideas from time to time."

- "Proved to be a diverse group of competent people. It's often hard to get both diverse backgrounds/projects/languages and competence at the same time."

Open interaction

[edit]- "It was good to see that the preparation was done fairly publicly and widely advertized well before the deadline."

- "I liked interactive internal communication and flexibility of outcomes."

- "It's open ended nature. No bureaucracy."

Organization

[edit]- "Giving us a fair amount of time to review proposals was also helpful to ensure that the work suited everyone's lifestyles."

- "I liked the system of vote."

- "I liked how well organized the things were for us - asking grantees to provide relevant information."

Engagement & collaboration

[edit]- "I found the fellow committee participants to be engaged and taking a sense of responsibility for their role."

- "The discussions with other people"

- "Collaborating with the committee members"

- "The ability to review ideas and discuss proposals with some people that I would not usually interact with - be it because of the projects they usually edit on, or the time zones that they are in."

- "Collaborative work toward the result. I enjoyed working through the careful selection process."

- "Siko. She was awesome!"

Learning & impact

[edit]- "It was intellectually stimulating"

- "Ability to learn about money management and cost-benefit analysis"

- "Learning about interesting projects and people which I otherwise would never know"

- "Ability to approve a bunch of projects that are potentially very important that would otherwise never have happened"

Where we can improve

[edit]Mailing list discussions

[edit]- "I think that few projects required much more discussions than others. In my opinion we should avoid discussing a lot for few projects."

- "Liked least the long discussions with other people"

- "Too much chatter on the mailing list about procedures. But this wiill diminish in subsequent years."

- "Bit circular threads in mailing list, not too high participation."

- "The email threads were a little annoying, I might prefer a digest form, although that reduces timely responses. On wiki communication is best where possible."

Review tools

[edit]- "Create an easier to navigate scoring interface and next round try using a private wiki instead of email and google docs"

- "Whilst the individual scoring was good, because it was private it mean that I didn't know which one of my fellow committee members had given a certain grant a particular score."

- "If we could somehow score individually then have these scores released between members of the Committee, then we could possibly discuss where we disagree to see why our views differed so radically if that does happen."

- "For new Committee members perhaps when we first start reviewing proposals, we should review one proposal "together" as a Committee so that everyone understands how to appropriately review a proposal."

- "Webex meetings, in person meetings, t-shirts :)"

Expectations

[edit]- "The workload was a little higher than expected because it was the first time that we were scoring grants. Therefore, policies and rules had to be formulated. Having set clear rules and policies, I think the workload will decrease in the rounds to come because rules and policies have already been formulated."

- "The gap between the workload I expected and actually spent made me feel underperforming."

- "Defining areas (tech, GLAM, etc) and allowing reviewers work exclusively on some of them might help they work more efficiently and help recruit people with necessary skills for each area."

- "Perhaps we should assign reviewers to a particular group of proposals so that they can spend time on say 10 proposals instead of glancing over 20?"

- "Consider financial support for committee members who spend dozens or hundreds of hours on IEG over several months, otherwise this level of volunteer involvement is not very realistic or advisable."

Proposal submission

[edit]Proposers were generally satisfied the proposal submission process:

- 89% of respondents were satisfied or very satisfied, the remaining 2 respondents were neutral.

What worked well

[edit]Design & workflow

[edit]- "Good design with clear work flow."

- "I liked the clear instructions"

- "It was fairly straightforward and seemed less formal than standard grant application processes in other contexts, which is totally appropriate for IEG."

- "I thought the template was very useful."

- "Clarity in the exposition of the requirements & templates to fill the submit"

- "I enjoyed learning about the Wikimedia editing process. It made me want to contribute more to the whole project, as I find the whole idea quite inspiring."

- "I like the friendly non-markup-like feel of the various IEG pages. Has its own character. I like that."

Feedback & iteration

[edit]- "Continual feedback--from talk page comments and endorsers, to the formal committee review (especially the qualitative comment summaries, more of that please), to the due diligence process which was really a chance to improve the proposals even further. Basically the fact that the proposal was a living document which could grow with the grant was a great sense of flexibility and ongoing improvement."

- "I really appreciated the feedback I got from people within the grant program and from community members."

- "The continued feedback"

- "The fact that I could continuously keep tweaking it was very helpful"

Where we can improve

[edit]MediaWiki

[edit]- "Initially it was not clear for me that I should to change the status to "PROPOSED""

- "The 3 stage process on the proposal process was slightly clunky, I'd like to see it streamlined. I recognize that markup is not very UI friendly for this kind of preloading templature, but I can still dream."

- "Maybe using Semantic Forms with SMW or something similar to avoid having to edit templates "crude" :-)"

Proposal review

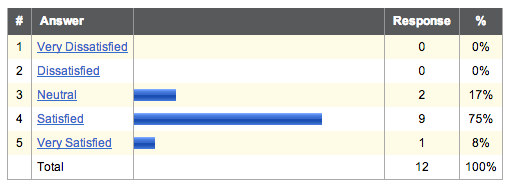

[edit]Proposer, satisfaction with the review process and feedback provided:

Committee member, satisfaction with the review process:

What worked well

[edit]Assessment & scoring

[edit]- Committee perspective

- "There was a clear set of assessment criteria on a simple 1-5 scale that was easy to understand but had sufficient choice to appropriately score the different proposals (we didn't need a 1-3 or 1-7 scale)."

- "Exceedingly well structured - especially for a first time. Lots of backup and support, as well as good documentation for both qualitative and quantitative scoring."

- "Perhaps there were too many links and documents, but this is a symptom of lots of good documentation. Workflow was very clear considering the amount of stuff there was to read."

- "The infoboxes with the summarized proposals were really helpful. Also, the google score form that we had to fill in. Made it easier to do the job."

- "The review criteria covered everything that I'd expect it to."

- "Review criteria were very clear and helpful"

Feedback & input

[edit]- Committee perspective

- "It was a team effort, and there was enough discussion on the list that we came to a relative consensus on the proposals we approved."

- "We gave sufficient time for community members to also be able to comment on the proposals, which was particularly helpful when it came to reviewing the proposals."

- "Good job at structuring applications - the applicants provided relevant input"

- "Liked having the opportunity to give my opinion in private"

- Proposers perspective

- "I liked the iteration process to improve the proposal before review"

- "I liked the continual feedback"

- "Being able to go back and edit parts of my proposal after receiving feedback was very helpful."

- "It was useful to be force to explain things clearer, and for people who were not aware of the issues. The review was specific and clear."

- "I liked the opportunity to explain the things that initially the committee have doubts on"

- "It was a very open process and didn’t feel like the decisions were being made behind closed doors."

Where we can improve

[edit]Assessment & scoring

[edit]- Committee perspective

- "I think it would have been ideal if the proposals were scored on a 10 point scale. In doing so, proposals getting similar scores could have been avoided."

- "It might be good to have a "confidence" score which shows how much the reviewer is confident about their judgement."

- "Should add a criteria about proposal risk level"

- Proposers perspective

- "The feedback from the committee was vague, did not align well with the numerical scores..., and did not accurately account for the depth or balance of community feedback for the proposal. It felt like there was behind-the-scenes politics going on that I have no idea how to engage with."

- "I didn’t like the quantitative scores, even reviewing all 20+ proposals didn't give a particularly good sense of what was most liked. It appeared to be mostly 3's and 4's in all cases. I didn't really have a sense from those scores what was actually preferred. I forget if there was an average score for the proposal, but that might be useful to provide a more concrete number, although it risks people focusing on numbers over substance."

Feedback & input

[edit]- Committee perspective

- "I see one of the characteristics of IEG in comparison to Grants is that it has deadlines. While reviewers and proposers may work together, the responsibility to prepare a proposal in a good form in time is up to the proposer. This fact should perhaps be more emphasized."

- "The communication between reviewers and proposers to clarify things should have been more encouraged, perhaps with a longer time to do so."

- Proposers perspective

- "We only got to know the scoring and summary, not all of it. There should be more feedbacks by more members."

- "I wasn't sure when I am supposed to inform the local community about the project so they can comment/show support."

- "Definitely could use some more robust feedback early so that we could address anticipated concerns."

- "Feedback should be given as soon as possible, it takes a lot of time before we know the result that we are disqualified :("

- "It was hard to know how to communicate with the deciders. Actually, it was never clear who the deciders were. Questions would show up in the comment section on my proposal, but I would only see them if I checked; there was no e-mail notification. And then I wasn't sure if the questions could be posed by anyone, of if they were coming from the panelists. There seems to be no system for notifying applicants of feedback, or directions on where to go to find posted feedback. (Note: this is from someone new to the movement, and we might consider this a reminder of how talk pages and notifications feel totally broken to the un-wikified)"

- "I think reviewers should be more involved in the proposal development and curating phase. Idealab makes a lot of sense, and I'd like to see committee members spend some time sharing their insights before the formal review process."

- "maybe the proposals need more better suggestions from more experienced editors to improve the proposals. I think we should list out experienced editors who have done projects and research works previously and request them to give suggestions on current proposals. May be we are already doing this, I don't know but we need to reach out to more such users."

- "If there's time people should be advised on the weaknesses of their proposal before the judging, so they can have time to improve. We do want more projects as a community after all."

- "Allowing a revision period for response to committee thoughts."

- "Have the committee engage with the details of grant proposals during the development period, so that fewer proposals get sideswiped by the particular easily-fixed concerns of individual committee members."

- "Give feedback to participants as soon as a proposal is submitted, so that he can modify plan as per requirement."

Expectations

[edit]- Proposers perspective

- "Allowing to submit technical proposals that require technical mentorship or code reviewing"

- "Well, it would be absolutely awesome to be able to dedicate WMF Tech staff time! :-)"

- "I think it should be clearer what the program will fund or not fund, in particular regarding how much work should be done, as I had to narrow down the scope of the project during the process, which was still not enough."

- "Providing a more reasonable range of funding that can be requested. It seems like the larger grants were all rejected, even though the program stated that grants could request up to $30,000"

- "There should be some guidelines as to the size of the project."

- "More guidelines, more budget estimations for different kind of projects."

Proposal outcomes

[edit]Selected

[edit]All 5 respondents whose proposals were selected said they generally felt like they had all the information needed to setup their grant in a timely fashion. Further data will be collected on the grantee experience in a post-grant survey.

Not selected

[edit]Most proposers whose projects were not selected in the pilot round said the feedback provided was useful for making changes to their projects for future endeavors. Some also noted though (as described above) that the feedback they got was too vague, they disagreed with the assessment of their proposal, or they would have preferred more discussion time.

- Comments

- "it was great getting experience writing such a grant and getting feedback."

- "I understand that my actual idea was misunderstood and I probably need to phrase it better and clearer next time."

IdeaLab

[edit]About half of the proposers said they participated in the IdeaLab, and the most common request from IEG proposers is for more feedback on their plans, from more people, as early as possible in the process.

We see this as evidence of the potential for a space where ideas can be collaboratively developed into grant proposals with input from many volunteers. It appears to be worth investing in more work-sprints to fully realize the vision of this space.

- Comments

- "I really like the fact that It exists and want to see more grants go through it, perhaps even as a requirement."

- "I liked that there was some feedback before the formal evaluation."

- "I liked the design very much!"

Survey methodology

[edit]To help understand the different user experiences of IEG participants from the pilot round of this program, we ran 2 surveys in April 2013, beginning 2 weeks after round 1 grantees were announced. The survey was created using Qualtrics and a template including a link to the survey was distributed using Global Message Delivery. For proposal submitters, the survey message was delivered to the editor's talk page on their home wiki if the editor linked to that page on their IEG proposal or their user page on meta.wikimedia.org. If no home wiki was specified, the survey was delivered to the editor's talk page on meta.wikimedia.org. The survey was closed after two week, by which point no new responses had been received for several days.

- targeted committee members who reviewed proposals (18 editors).

- targeted anyone who submitted a proposal and included responses from selected grantees as well as proposers whose projects were not selected (32 editors).

The survey questions were primarily focused on eliciting feedback on the IEG process and suggestions for how the Foundation could improve the experience for both participants and reviewers in future rounds. Respondents were also asked, but not required to provide, information about related experience and basic demographic information.

Committee

[edit]- 13 respondents total (72% of total invited to take the survey)

- 12 respondents participated all the way through the review process

- 1 respondent recused when formal review began because was also a round 1 proposer

- Other Wikimedia committee experiences

- WMF Grants (GAC)

- Wikimania Jury

- Sister projects

- Language com

- Affiliations com

- Mediation com

- None

Proposers

[edit]- 16 respondents total (50% of total invited to take the survey)

- 6 (38% of respondents) were selected for an IEGrant

- 10 (63%) did not have their proposal selected in round 1

- Home projects

- EN:WP (x6)

- PT:WP

- OR:WP (x2)

- BG:WP

- IT:Wikisource

- Commons

- 1 avid reader but not not an editor of any Wikimedia project

- Countries

- United States (x4)

- India (x3)

- Portugal

- Bulgaria

- Spain (x2)

- Italy

- Gender

- 75% male

- 13% female

- 13% decline to state