Learning and Evaluation/Evaluation reports/2015/Other Photo Events/Limitations

This report systematically measures a specific set of inputs, outputs, and outcomes across Wikimedia programs in order to learn about evaluation and reporting capacity as well as programs impact. Importantly, it is not a comprehensive review of all program activity or all potential impact measures, but of those program events for which data was accessible through voluntary and grants reporting.

Read this page to understand what the data tells you about the program and what it does not.

Response rates and data quality/limitations [edit] |

In this round of reporting, 19 organizations and individuals reported 103 different photo events in 2013 and 2014. In addition to data reported directly through voluntary reporting, we were able to mine existing reports and fill many data gaps for grantee reported events through publicly available information on organizer websites, Wikimedia project pages and by retrieving on-wiki metrics. This information included: number of participants, event start and end times, number of media files uploaded, number of unique media used, and ratings of image quality (Featured, Quality, and Valued.) Where start or end times were not reported, we estimated the dates from the first or last day a photo was uploaded to an event category. Only a minority of events reported key inputs such as budget, staff hours, and volunteer hours, and this information cannot be mined. Thus, while we explore these inputs, we cannot draw many strong conclusions about program scale or how these inputs affect program success. In addition, the data for other photo events are not normally distributed, the distributions are, for the most part, skewed. This is partly due to small sample size and partly to natural variation, but does not allow for comparison of means or analyses that require normal distributions. Instead, we present the median and ranges of metrics and use the term average to refer to the median average, since the median is a more statistically robust average than the arithmetic mean. To give a complete picture of the distribution of data, we include the means and standard deviations as references. To see the summary statistics of data reported and mined, including counts, sums, arithmetic means and standard deviations, see the appendix. | |||

Priority goals [edit] |

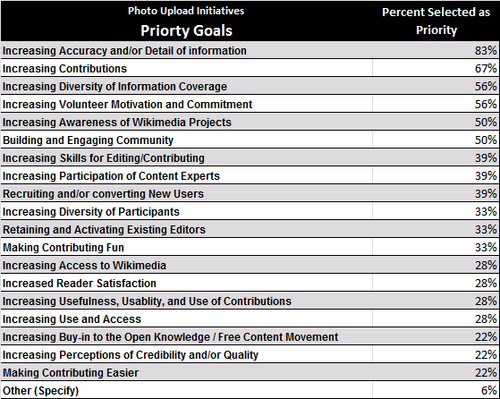

The most popular goals, those noted as a priority by more than half of program leaders were to increase accuracy and/or detail of information, contributions, diversity of information coverage, and volunteer motivation and commitment. We asked program leaders to share their priority goals for each photo event.[1] Seven event organizers reported priority goals for 18 photo events. The number of priority goals selected ranged from 1 to 18; the average number selected was six.[2] The table below lists program leader goals in order of their prominence, from most often selected to least. The most commonly selected goal was «Increasing accuracy and/or detail of information» (selected 15 times); the second most common goal was «Increasing Contributions» (12 times). Event organizers most commonly selected 6 priority goals per event.  Percent of goals selected by program leaders as priority goals.

| |||