Talk:Image filter referendum/Archives/2011-04

| Please do not post any new comments on this page. This is a discussion archive first created in April 2011, although the comments contained were likely posted before and after this date. See current discussion or the archives index. |

User option to display immediately

Is it intended there will be a user option to bypass this feature and display all images immediately always? DGG 13:45, 16 August 2011 (UTC)

- Displaying all images immediately is the default behavior. Images will only be hidden if a reader specifically requests that they be hidden. Cbrown1023 talk 15:11, 16 August 2011 (UTC)

At the moment its "opt in" - but you can already hear the calls for "opt out". --Eingangskontrolle 20:27, 17 August 2011 (UTC)

Committee membership

I'm curious to know the answers to the following questions, if answers are available: How has the membership of this committee been decided? Who has it been decided by (presumably by some group at the Foundation - I'm guessing either the board, or the executive)? What steps are being taken to ensure that the committee is representative of the broad range and diversity of Wiki[p/m]edia's editors and users? Thanks. Mike Peel 22:36, 30 June 2011 (UTC)

- As an add-on comment to my last: I see that there are 2 US, 2 Canadian, 1 UK and 1 Iranian on the committee at present - which is very much skewed to English and the western world. E.g. there's no-one who speaks Japanese, Chinese or Russian, and no-one that is located in the southern hemisphere. If this is a conscious choice, it would be good to know the reason. behind it. Thanks. Mike Peel 07:59, 1 July 2011 (UTC)

- In fact this looks pretty much an en.wiki thing. Nemo 10:39, 1 July 2011 (UTC)

- Why does it matter?

- The committee's job is to let you have your say about whether WMF ought to create a software feature that offers individual users the ability to screen out material they personally don't choose to look at.

- The committee's job is not to decide whether Foroa (below) has to look at pictures of abused animals, or which pictures constitute abused animals, or anything like that.

- Unless you think this group of people will do a bad job of letting you express your opinion on the creation of the software feature, then the composition is pretty much irrelevant. WhatamIdoing 20:28, 1 July 2011 (UTC)

- My main worries are the accessibility of committee members from a linguistic point of view (i.e. if non-en/fa/az/es speakers have questions about the process), and the risk of perception of this vote as a US-centric stance (which could lead to voting biases, e.g. "it's already been decided so why should we bother voting", or "let's all vote against the American world-view!"). Mike Peel 21:03, 1 July 2011 (UTC)

- The membership of the committee was largely put together by me, but informed by many other people. It's also not totally done yet, so if someone has interest, I'd love to talk to them. I've added a couple of Germans, and you're right that the southern hemisphere is under represented. You are, of course, correct in everything you say there - but we're going to try out the infrastructure that's being built for multi-lingualism by the fundraising team and hope that helps to cover some of the gaps. We will be calling heavily on the translators, as always. Philippe (WMF) 21:36, 1 July 2011 (UTC)

- My main worries are the accessibility of committee members from a linguistic point of view (i.e. if non-en/fa/az/es speakers have questions about the process), and the risk of perception of this vote as a US-centric stance (which could lead to voting biases, e.g. "it's already been decided so why should we bother voting", or "let's all vote against the American world-view!"). Mike Peel 21:03, 1 July 2011 (UTC)

- Geez lets put in a token German and a token Iranian. The whole "committee" is a joke. It consists of mainly anglosaxon heritage people whom will decide for the rest of the world what they can see. My oh my. The last 10 years living outside of my own country I have started to hate native English speakers. They are arrogant and think that simply because they speak English they are more than the rest of the world. And now we get a committee of mostly North Americans deciding for the other 4.5 billion people in the world .... great. Just fucking great. Waerth 02:23, 2 July 2011 (UTC)

- I don't think we're doing a "token" anything. There was a concerted attempt to bring in people of varying backgrounds who also brought skills in helping with the election. I find it unsurprising that some of the people who came to our attention were Germans, given the size of the German language Wikipedia. It's disrespectful to imply that they are tokens: they're not. They're intelligent people who bring a great deal of value. The same with Mardentanha, who - in addition to being Iranian - has been a member of the Board of Trustees steering committee and thus brings practical knowledge to the table. Philippe (WMF) 11:46, 3 July 2011 (UTC)

- Waerth, I don't think you understand the proposal. The committee has one job. The committee's job is "Find out whether people want a user preference so that they can decide what they see on their computer".

- The committee does not "decide for the rest of the world what they can see". They may decide whether we will add one more button to WMF pages, but you would be the only person who decides whether to click that button on your computer. I would be the only person who could decide whether to click that button on my computer. The committee does not decide "for the rest of the whole world what they can see". WhatamIdoing 19:06, 5 July 2011 (UTC)

- You are wrong. Whether or not was already decided. We can only elect other boardmembers for the next term, who promise to revoke this motion. --Eingangskontrolle 20:31, 17 August 2011 (UTC)

- I don't think we're doing a "token" anything. There was a concerted attempt to bring in people of varying backgrounds who also brought skills in helping with the election. I find it unsurprising that some of the people who came to our attention were Germans, given the size of the German language Wikipedia. It's disrespectful to imply that they are tokens: they're not. They're intelligent people who bring a great deal of value. The same with Mardentanha, who - in addition to being Iranian - has been a member of the Board of Trustees steering committee and thus brings practical knowledge to the table. Philippe (WMF) 11:46, 3 July 2011 (UTC)

- Geez lets put in a token German and a token Iranian. The whole "committee" is a joke. It consists of mainly anglosaxon heritage people whom will decide for the rest of the world what they can see. My oh my. The last 10 years living outside of my own country I have started to hate native English speakers. They are arrogant and think that simply because they speak English they are more than the rest of the world. And now we get a committee of mostly North Americans deciding for the other 4.5 billion people in the world .... great. Just fucking great. Waerth 02:23, 2 July 2011 (UTC)

As a suggestion: try including an invitation to join the committee within the announcement itself - and particularly the translated versions of it. Saying "if someone has interest, I'd love to talk to them" is fantastic - but not particularly useful for diversifying the languages spoken by the community if it's only said in English. It's also rather hidden if it's just said on this page... Mike Peel 20:26, 4 July 2011 (UTC)

User option to display immediately

Is it intended there will be a user option to bypass this feature and display all images immediately always? DGG 13:45, 16 August 2011 (UTC)

- Displaying all images immediately is the default behavior. Images will only be hidden if a reader specifically requests that they be hidden. Cbrown1023 talk 15:11, 16 August 2011 (UTC)

At the moment its "opt in" - but you can already hear the calls for "opt out". --Eingangskontrolle 20:27, 17 August 2011 (UTC)

Cost

Will the information provided include also an estimate of the costs the WMF will need to face to implement the filter? Nemo 07:35, 2 July 2011 (UTC)

- A fair question, since one of the tradeoffs may be between a quick inflexible solution that helps some users but frustrates others, vs. a better solution that takes more energy to develop. I'd like to see such an estimate myself (of time involved, if that's easier than cost). –SJ talk | translate 11:42, 3 July 2011 (UTC)

- I'll see if we can get a scope for that, yes. Philippe (WMF) 00:36, 4 July 2011 (UTC)

- Correct me if I'm wrong, but I thougt there was a US law that limits publication of prices for services. --95.115.180.18 22:11, 1 August 2011 (UTC)

- No, there's not. It would be a violation of the business' free speech rights. The closest thing we have to that is that the (private) publishers of the phone books usually refuse to print prices, because they're worried about fraud/false advertising. WhatamIdoing 22:43, 14 August 2011 (UTC)

- Correct me if I'm wrong, but I thougt there was a US law that limits publication of prices for services. --95.115.180.18 22:11, 1 August 2011 (UTC)

- I'll see if we can get a scope for that, yes. Philippe (WMF) 00:36, 4 July 2011 (UTC)

Today we have severe problems with the servers - the money would be better spend there. If someone feels the need for such a tool, he should develop it and offer it as an extention of firefox. No money from the donations for free content to such an attack against free content. --Eingangskontrolle 15:21, 16 August 2011 (UTC)

- I think every developer and ops person at the WMF (and volunteers) agrees with you we would rather be doing other things. However, in practice, the question we need to answer is: is it easier to achieve our goals with an opt-in image filter, or without one? The alternative could be that many libraries and schools would, sooner or later, block Wikipedia and Wikimedia Commons. That said, I think the thread OP is totally right, we should get a work scope here. NeilK 18:01, 16 August 2011 (UTC)

- The proposed solution is not that great for schools and libraries (or indeed anyone) that want to censor. The user can change the settings, which is the whole idea. Rich Farmbrough 22:30 16 August 2011 (GMT).

Voting process

Transparency of voting

Please consider using "open voting" in such a way that we can see/validate the votes, such as in steward elections. I do not trust a third party as currently proposed.

Also, please consider placing a sitenotice. I do not believe that VP posts are enough to inform every member. NonvocalScream 20:47, 4 July 2011 (UTC)

- Open voting works well for voting from Wikimedia user accounts, but I would imagine it won't work as well for votes from readers (assuming that readers will also be eligible to vote - which I would view as a prerequisite). Posting a site notice (along the lines of the fundraising banners) should also be a prerequisite to this referendum in my opinion. Mike Peel 20:54, 4 July 2011 (UTC)

- You're moving too fast. :-) The only thing that's been published so far is the call for referendum, which has been posted to village pumps and mailing lists. That is not the only notice we are going to be doing throughout the process — it's the first of many. We will be using a CentralNotice during the actual vote period, as far as I know. Cbrown1023 talk 01:22, 5 July 2011 (UTC)

- Voting will be verified as usual for the Board of Trustees elections (meaning, the committee will verify them using SecurePoll). The third party will not be responsible for that. The third party, incidentally, is Software in the Public Interest, as usual. Philippe (WMF) 01:23, 5 July 2011 (UTC)

- You're moving too fast. :-) The only thing that's been published so far is the call for referendum, which has been posted to village pumps and mailing lists. That is not the only notice we are going to be doing throughout the process — it's the first of many. We will be using a CentralNotice during the actual vote period, as far as I know. Cbrown1023 talk 01:22, 5 July 2011 (UTC)

- Ok, I understand Cbrown's remark and I'm comfortable with that response. Could you help me understand the advantage to using the closed voting system, versus the open one? NonvocalScream 04:17, 5 July 2011 (UTC)

- It's the same advantage that convinces us to use secret ballots in the real world: A greater likelihood that people will freely vote for what they individually believe is best for themselves and the group, without fear of public censure or retaliation. WhatamIdoing 19:27, 5 July 2011 (UTC)

- And automatic checking is less time consuming than hunting for voters with their second edit etc Bulwersator 06:49, 7 July 2011 (UTC)

Ability to change our vote

I see no hint in my Special:SecurePoll/vote/230 page that once I have voted I'll be able to come back and change my vote. I'd like to be able to do that in case I hear more discussion which changes my mind. Without that guarantee (which should be stated since it is not the way most real elections work) folks may delay while they researche the vote, and end up forgetting to vote. Can you change the ballot to make it clear one way or the other? Nealmcb 18:01, 17 August 2011 (UTC)

You can come back. You will get a warning message and you can vote again. The final vote will overwrite older ones. --Eingangskontrolle 20:43, 17 August 2011 (UTC)

Status of referendum text

There's less than a month until this vote is supposed to start (August 12). A few people have asked to have some advance notice on the text of the referendum prior to the vote (plus I imagine it needs to be translated). When will the text be available? --MZMcBride 07:00, 19 July 2011 (UTC)

- Hopefully in the next 24 hours, if all goes well. Thanks :) Philippe (WMF) 18:53, 19 July 2011 (UTC)

Vote/Referendum Question

When can we see the proposed wording of the question? Kindly, NonvocalScream 17:43, 23 July 2011 (UTC)

- You've probably already seen it, but: Image filter referendum/Vote interface/en. Cbrown1023 talk 18:56, 15 August 2011 (UTC)

Suggest splitting question

I would suggest splitting "It is important that the feature be usable by both logged-in and logged-out readers." into two questions: one asking about logged-in readers, the other asking about logged-out readers. Otherwise, what would anyone that thinks that the feature should only be enabled for logged-in readers vote? Alternatively, if this question is intended to ask "should this feature be enabled for logged-out as well as logged-in users?", then the phrasing should definitely be improved... Mike Peel 21:10, 23 July 2011 (UTC)

- suggestions welcome :) the latter is what was meant. Basically, you can do a lot with account preferences, as you know; it takes a bit more hackery to do it for anons... but that's 99% of our readers, who are the target audience. -- phoebe | talk 18:45, 31 July 2011 (UTC)

- Actually, it's all of us on occasion, because you can't stay logged in for longer than 30 days. That means that even the most active user is going to have at least one moment every 30 days when s/he is logged out. WhatamIdoing 19:23, 4 August 2011 (UTC)

- I arrived here with the same question that Mike Peel asked. Votes based on the current wording may not produce as clear results as if it were split into two questions. Rivertorch 05:01, 10 August 2011 (UTC)

- I agree with Mike Peel, that question should be split in two. -NaBUru38 18:40, 15 August 2011 (UTC)

- I guess it's too late to implement this change now, which is a shame. Would anyone on the committee be able to explain why this wasn't implemented? Mike Peel 19:11, 16 August 2011 (UTC)

- I note that this has been archived without any response posted to my question. I'm very disappointed by this. Mike Peel 23:28, 10 September 2011 (UTC)

- The committee didn't have control over the instrument... they didn't write it, and didn't feel free to alter it, rightly or wrongly. I think I can safely say that there are a number of things on it that they would have changed if they felt like they could. Philippe (WMF) 01:08, 11 September 2011 (UTC)

- I note that this has been archived without any response posted to my question. I'm very disappointed by this. Mike Peel 23:28, 10 September 2011 (UTC)

- I guess it's too late to implement this change now, which is a shame. Would anyone on the committee be able to explain why this wasn't implemented? Mike Peel 19:11, 16 August 2011 (UTC)

- I agree with Mike Peel, that question should be split in two. -NaBUru38 18:40, 15 August 2011 (UTC)

Quantification of representation of the world-wide populace

It would be very useful and interesting to quantify what fraction of different countries hold that this is an important (or unimportant) feature, particularly if it turns out that the countries with low representation in this poll and in Wikimedia's readership believe that this is a key issue. It is crucial that this is quantified given Wikimedia's pre-existing biases, which could mean that the poll will simply measure the selection bias present in those questioned.

If e.g. it turned out that American (or British/European) editors thought that this feature was unimportant, but that African/Asian cultures thought it was very important, then it would obviously be very important to implement it for Wikimedia's future growth (even if that implementation happens on a geo-located basis). If the vote came back simply saying that this was not important to Wikimedia's current editing populace, then it could be argued that this just shows a western bias rather than presenting meaningful results. Mike Peel 21:36, 23 July 2011 (UTC)

- I totally agree. I hope we can figure out a way to measure this while keeping anonymity etc. Side note: this is a complex (but hopefully solvable) type of problem -- how to take a global, representative referendum on any question that affects the projects? this is something we need to figure out how to do better, now that we are collectively so big. I'll be happy if this referendum is a test for good process. -- phoebe | talk 18:32, 31 July 2011 (UTC)

- Similarly, although perhaps somewhat less importantly, if the feature is strongly supported by women, then I hope that fact would be considered relevant to Wikipedia's future growth. WhatamIdoing 19:29, 4 August 2011 (UTC)

Transferring the board

Maybe it could be wise to transfere the board to a more neutral country like Switzerland? aleichem 23:45, 24 July 2011 (UTC)

- ha :) which board? The WMF board doesn't actually really have a home (members are from the US, Europe & India at the moment); but we do govern the WMF which remains US-based. At any rate, the board's deliberations are more influenced by wikipedian-ness than they are by nationality :) -- phoebe | talk 18:29, 31 July 2011 (UTC)

- which remains? on what authority? aleichem 01:31, 7 August 2011 (UTC)

- The Wikimedia Foundation is a legal entity located in the USA, moving it to Switzerland would mean moving it to a country with laws much closer to European Union Privacy law. I for one am not convinced that this could be done without deleting a lot of information we hold on living people. The Foundation is ultimately governed by a board and several members of the board are elected by the community, either directly or via the chapters. So I suppose that if the community wanted to move the legal registration from the USA to Switzerland or indeed any other country the first thing to do would be to present a case for this and the second thing would be to elect board members who favoured the idea. At the moment I think that is a long way off, and judging from the recent elections there is little if any support for fundamental change to the WMF. WereSpielChequers 08:07, 9 August 2011 (UTC)

- which remains? on what authority? aleichem 01:31, 7 August 2011 (UTC)

Inadvertent overwrite with telugu translation

Admins: Please restore the english version.Sorry for the inadvertent overwrite.-Arjunaraoc 12:01, 25 July 2011 (UTC)

- You don't need to be an admin to revert changes. ;-) I've reverted the page back to the english version. Mike Peel 13:07, 25 July 2011 (UTC)

What does this mean?

The last question: "It is important that the feature be culturally neutral: as much as possible, it should aim to reflect a global or multi-cultural view of what imagery is potentially controversial."

Does this mean that the categories would be drawn according to some kind of global average? Does it mean more categories would be created otherwise? I don't know what effect a vote either way would have on development. Wnt 19:49, 30 July 2011 (UTC)

- We were thinking, with this and a few other points, of sort of a straw poll on what principles are most important. I'm not yet sure what it would mean specifically for design, but the answer to this would help everyone know what's important to the wikimedia community at large (I can assume that many people agree with this principle, for instance, but not everyone does -- I expect a truly global standard would offend both those who think there is nothing controversial in our image collections, and those who think there is a great deal of controversial material). -- phoebe | talk 18:27, 31 July 2011 (UTC)

- I take a culturally neutral feature as being one that can be tuned to suit people of many diverse cultures, some might choose to filter out images that I'm happy to look at, others might choose to see photos that I'd rather not see. That means a much more sophisticated filter than a simple on off button, and consequently so many user chosen lines that there is no need to debate where one draws the line. If instead we were debating a simple NSFW option of excluding material deemed Not Safe For Work then yes we would need to work out a global average of prudishness. But we aren't so we don't need to. WereSpielChequers 07:54, 9 August 2011 (UTC)

- Given the complexities of designing a system to meet fine grained requirements, the conclusion follows we should have no such system at all. If anyone wants one, they can design their own at the browser level,and the WMF should take the neutral position of neither helping or hindering them. Nothing has to be done or developed here. It's the business of other entities entirely. (The only other logically consistent position is to regard freedom of expression as such an absolute good that we should interpret our role as hindering the development of any censoring apparatus. It would still be legal under the CC license, of course, just that we would arrange the system to make it as difficult as possible. This is what I myself would ideally want, but I accept of the need to maker our content accessible to those with other views than my own, and consequently not actively hindering outside filtering.) DGG 03:33, 17 August 2011 (UTC)

IP editors

The current mock ups show the edit filter option appearing even if you are logged out. I'm uncomfortable about this as it implies that one person using an IP could censor the viewing of others. I would be much less uncomfortable if this was cookie based, so I could make decisions as to what appeared on my pc. I don't like the idea that the last person to be assigned my IP address gets to censor my viewing. So my preference is that filters are only available if you create an account and login, or via a session cookie on that PC, not a permanent cookie. WereSpielChequers 04:49, 9 August 2011 (UTC)

- I believe it would be handled based on cookies, not based on an IP address. You're right that having the settings associated with an IP address just wouldn't make sense. Cbrown1023 talk 21:16, 9 August 2011 (UTC)

- Thanks for the explanation. I can see an advantage that schools will be able to set a filter, but of course anyone will be able to change the filter for the whole school IP. Libraries and internet cafes will be in a similar situation. I foresee this leading to lots of queries. WereSpielChequers 14:58, 10 August 2011 (UTC)

Please be sure to release ballots

The data is invaluable. To 'announce the results', release the full dataset, minus usernames. Sublte trends in the data could affect the implementation. E.g. If targets Brazil or India overwhelmingly want this feature, that's alone might be a good reason to build it. --Metametameta 10:03, 10 August 2011 (UTC)

- Well, I'd certainly like to see the (properly anonymised, of course) dataset, if only because I'm a sick person that enjoys analysing stacks of data. Disagree with your example though, the opinion of someone in India or Brazil should not be considered any more important than the opinion of someone in Zimbabwe, Australia, or Bolivia (to give some examples). Craig Franklin 08:03, 16 August 2011 (UTC).

- Some countries will undoubtedly have skewed results because they restrict (or prohibit altogether) visiting Wikipedia. In this case, their sample size will be smaller on average per capita. At the same time, those with the ability to circumvent the government blockings of "sensitive" websites will no doubt against this image filter vote because they will view it as oppressive. OhanaUnitedTalk page 15:30, 16 August 2011 (UTC)

- I would disagree. Yes there will be some who are like that, but there will also be others who realise that if this happens, the government might be nicer and allow it (and just block the images).

- Some countries will undoubtedly have skewed results because they restrict (or prohibit altogether) visiting Wikipedia. In this case, their sample size will be smaller on average per capita. At the same time, those with the ability to circumvent the government blockings of "sensitive" websites will no doubt against this image filter vote because they will view it as oppressive. OhanaUnitedTalk page 15:30, 16 August 2011 (UTC)

Referendum start moved to Monday, August 15

The start of the referendum has been moved to Monday, August 15, and all of the other dates will be bumped by around 3 days. Instead of rushing to put the technical aspects together quickly, we decided it would be better to just push the start date back a little and do things right. Updated schedule:

- 2011-08-15: referendum begins.

- 2011-08-17: spam mail sent.

- 2011-08-30: referendum ends; vote-checking and tallying begins.

- 2011-09-01: results announced.

This gives us more time to finish up the translations and look at the proposals. :-) Cbrown1023 talk 19:01, 12 August 2011 (UTC)

- Hi. I've got some questions in IRC regarding why the referendum has not started today as scheduled and I can't find any information on-wiki regarding this. Any ideas? Best regards, -- Dferg 15:34, 15 August 2011 (UTC)

- Generally we are just waiting on the rollout of notices and making sure the voting software is coordinated... I think we are suffering from having people in lots of different time zones, so eg. while it has been the 15th of August for a while in some places, it is only just still 9:00am in San Francisco :) We are planning to get it out *sometime* today. I don't know if there's been any other technical delays. -- phoebe | talk 16:32, 15 August 2011 (UTC)

- Indeed. It's going to start sometime today. :-) Cbrown1023 talk 16:38, 15 August 2011 (UTC)

Sigh ... I guess I'll dismissing more global notices over the next two weeks :/. Please no big banners. ←fetchcomms 19:16, 15 August 2011 (UTC)

Self censorship is fine, thus this is a great idea

Hopefully this will decrease attempt by people to censor content seen by other such as we see here [1] Doc James (talk · contribs · email) 21:32, 14 August 2011 (UTC)

- Precisely. I can't understand why so many people are opposing a plan that will increase users' freedom to avoid content they don't want. Nyttend 04:51, 16 August 2011 (UTC)

- Because it can and will be abused by censors (there is nothing easier than to inject a cookie saying i don't want to see specific kind of content at the firewall level - which will have nothing to do with user preferences). Ipsign 07:13, 16 August 2011 (UTC)

- The so-called 'self-censorship' label is a disingenuous mask since it also involves the completely subjective tagging of images. As noted previously, if the project is implemented, then "....welcome to image tag wars", where dozens or hundreds of editors will argue whether an image is or is not sexually explicit, is or is not violently graphic, is or is not offensive to gays/lesbians/women/Asians/blacks/whites/Jews/Muslims/Christians etc.... And the proposed categories for tagging will naturally morph over time with mission creep driven by fundamentalists who will take it on their own to decide exactly what should be tagged on Wikipedia.

- The simple solution: if you're a fundamentalist who is offended by legal images permitted under Wikipedia's non-censorship policy, then start your own fundamentalist wiki and stay there. Fundamentalist driven tags applied to images to permit 'self-censorship' today are but two steps removed from compulsory censorship implemented by state legislators and tin-pot dictators. Fork off your own encyclopedia and implement all the image tags you'd like over there –just don't call it Wikipedia. Harryzilber 05:02, 18 August 2011 (UTC)

- Well my concern at this proposal is quite simple: how will the filters be implemented? How will images be determined to fall into a given category? Consider Jimmy Wales' misguided attempt to delete "pornographic images" from Commons, which resulted with him selecting several acknowledged works of art -- & amazingly, none of the images of penises. The reason filters haven't been implemented yet, despite on-&-off discussion of the idea for years, is that no one has proposed a workable way to separate out objectionable images -- how does one propose criteria that define something, say, as pornographic without at the same time including non-pornographic images? (Dissemination of information about birth control in the early 20th century was hampered because literature about it was treated like pornography.) Explain how this is going to work & convince me that it is workable -- then I'll support it. Otherwise, this proposal deserves to be filed here & forgotten. -- Llywrch 06:05, 16 August 2011 (UTC)

Self Consorship?

(durante décadas la gente ha luchado por liberarse de censura disctatorial, por poder ofrecer una educación integra y laica a todos. como es que aquí se puede considerar que hay "imagenes controvertidas". en dicho caso se debe someter a modificación el artículo cuando por supuesto no se refiere a manipular la historia, no considero factible por ejemplo censurar imagenes historicas de guerra, educación sexual, fotos de comunidades nativas, imagenes de como las rebeliones, torturas. en ese sentido cada uno es responsable por lo que quiere ver y puede en su propio ordenador o movil adaptar un control paternal sobre las imagenes. si el problema son los niños... tendran miedo porue no se sienten capacitados para dar una explicación de acuerdo con la edad de ellos, por lo que deberían buscar a un profesional para que les ayude a dialogar. Son los niños los que deben entender mas el mundo que les rodea y sus origenes, para así crear un futuro mejor y más unido en la paz. No los dejen en la ignorancia, ese es el objetivo de este proyecto, conocimiento al alcance de todos libre de la enferma censura.)

for decades people have fought for freedom from censorship disctatorial, be able to offer education and integral secular all. as it is here to be considered as "controversial images." in this case must be submitted to change course when the article is not about manipulating history, I consider it feasible, for example historical images of war censorship, sex education, pictures of native communities, as images of rebellion, torture. in that sense each is responsible for what you want to see and be on your own computer or mobile phone to adapt a parental control over the images. if the problem is the children ... be afraid porue feel unprepared to give an explanation according to their age, so they should seek a professional to help them to talk. They are children who need to understand more the world around them and their origins, to create a better and more united in peace. Do not let them in ignorance, that is the goal of this project, all knowledge available to the patient free of censorship.

Waste of time

Here is my comments that I made in my vote:

There is very little to be gained if this feature was introduced. The way Wikipedia is written it would be unusual for offensive images to appear where they are not expected. To quote the example used in this poll, a reader will not get picture of naked cyclists if viewing the bicycle article. Editors generally use images that are applicable to an article since they are conscious of the wide range of sensitivities of the readers of Wikipedia. Images that are offensive to some may be present on an article about some aspect of sex for instance, but in these cases the reader may be unwilling to view the article.

Implementing this proposed feature will place yet another burden on the overworked editors. Rather than placing this burden on editors, of which the vast majority are volunteers, a better option is to have a clear warning on every page stating that Wikipedia is not censored in any way. This can easily added to the footnote containing other disclaimers that currently appears all Wikipedia pages.

If parents or guardians have wish to control the browsing habits of children in their care they can use other means of control such as supervision or content filtering software.

The internet contains huge amounts of easily accessible material that is considered by some to be offensive. Those who are offended by certain images must take responsibility for how they browse the internet in order to avoid offence. Alan Liefting 07:11, 16 August 2011 (UTC)

- You may think that it makes sense to not put naked cyclists on a bicycle article, but situations like that are not at all uncommon. Did you know that the German Wikipedia once ran w:de:Vulva as its featured article of the day and included a picture of a woman's vulva right there on the Main Page? Any reader who went to dewiki's Main Page saw that image. Just because we both think something like that isn't the best thing to do, doesn't mean that that's not going to happen. About putting burdens on editors, it doesn't require doing anything new. It's designed to work with our existing category system. People won't have to do anything new — they already maintain our category system. Cbrown1023 talk 15:21, 16 August 2011 (UTC)

- About w:de:Vulva on the German article of the day: An endless discussion (de) and bad press (de) was the result, which lead to interest in this proposed image filter. --Krischan111 23:40, 16 August 2011 (UTC)

What does "culturally neutral" mean?

I'm afraid don't understand what is meant in the questionnaire with the words "culturally neutral" and "global or multi-cultural view".

Some readers probably will think that something being "culturally neutral" would mean that it is applied for all person in the same way, regardless of their particular culture; so that a "cultural neutral" filter would never filter any image for the reason that it may hurt the culture of a reader; e.g. that a "cultural neutral" filter would never filter pictures of Mohammad (this at least was what I thought when reading the questionnaire the first time today).

However now I suspect, "culturally neutral" here has exactly the opposite meaning: that a filter takes account of the culture of reader, regardless which culture this is; so that a very "culturally neutral" filter would allow people to choose to not see pictures of Mohammad.

The same applies for the meaning of "global" and "mutli-cultural". I would think a "global" encyclopaedia provides the same knowledge, content and pictures for all readers, regardless of their particular local place, country, or culture. However, in the questionnaire "global or multi-cultural view" may mean the exact opposite, that a "global" and "multi-cultural" encyclopaedia would provide each reader with a different kind of knowledge, content and pictures, adjusted to their local place country, or culture.

So I think the wording of the questionnaire is highly misleading, if comprehensible at all, --Rosenkohl 13:08, 16 August 2011 (UTC)

Tired of censorship

Many are becoming increasingly concerned about the range of unpleasant or explicit material available on the internet. However, many are also becoming rather concerned about the limitations on free speech and the growing acceptance of censorship. How does one reconcile these two? I acknowledge that Wikipedia is a community, but it is also an encyclopaedia.

When I was in school, I remember watching holocaust documentaries, etc. But it was important to have seen these things, however unpleasant. It was important to our education that we could understand. Had these images been censored, we would have felt cheated of a proper history education. If censorship of that kind is not practiced in a school, I dread to imagine the day it is accepted for an encyclopaedia.

We must consider the context of these images. They exist in an encyclopaedia (albeit online) and are almost certainly relevant to the subject. Some images may be unpleasant, but we shouldn't simply filter them out because of it. If a child were to look in encyclopaedia, they may find the same materials. Equally, there are academic books and journals available in every library containing the most horrific of images, but they are included because of their educational value. Filtering out relevant (or potentially highly relevant) images seems to be more harmful than helpful.

Whilst this would only affect those who choose to censor themselves, we are still permitting censorship and promoting ignorance. If the image is relevant to the subject, then the end user is placing restrictions on their own learning and filtering out content because of personal intolerance. Wikipedia aims to educate people, but it can't do that by filtering out their access to useful information (in the form of images), regardless of whether people make that choice. To paraphrase Franklin, those who would give up essential liberty don't deserve it.

Alan Liefting makes a good point. Why not simply have a content warning? If one watches a documentary, there is often a warning that the piece contains sex, violence, etc. The viewer is advised to use their discretion in watching the documentary, but the content is certainly not censored. Why should an encyclopaedia be any different?

Wikipedia was built on ideas of freedom of speech. Perhaps it's about time we defended it and took a harder line on censorship. If parents or particular institutions wish to block content, they are free to do so through various software, DNS services, etc. If they want to censor content, that's their decision, but we shouldn't be doing it for them. Zachs33 11:15, 16 August 2011 (UTC)

- I agree with you that sometimes people need to see certain things, and that we shouldn't exclude an important image just because it might offend some people. That's why this feature is good: if someone decides at some point they don't want to see naked images or images of violence, they don't have to. If they then found themselves at "Treatment of concentration camp prisoners during the Holocaust" and noticed that there was a hidden article of some naked prisoners being hosed down, they could make a conscious decision to see that image. They'd read the caption and be like, "Okay, I hid this by default, but I want to see the image in this case." A little bit of a delay removes the surprise and empowers the reader to choose what they want to see. It's not our job to force them to see that, but it is still our job to make sure it's there for them if they're interested. It will always be clear exactly where an image is hidden, and it will always be easy to easily unhide a specific image. Cbrown1023 talk 15:30, 16 August 2011 (UTC)

- There's an old expression - "freedom to choose". It's often true to say that there is freedom in choice, but it's not always realistic. Should we be encouraging users to censor information from themlseves? An image is a form of information. What about textual content that describes unpleasant torture or treatment of jews? Should users be able to choose to filter out unpleasant paragraphs? Of course, this would not be feasible, but would we support such a change if it were possible? Of course not. The Blindfolds and stupidity? topic (among others) discusses self-censorship and the reality of restraint in such a choice. I find the idea of filtering 'controversial content' to be rather frightening. I'm sure there are many holocaust deniers on the internet. The potential for content (of any kind - whether it's a text, image or otherwise) to be flagged as controversial because of such people is far more offensive than any image. Many users have jumped in to this discussion out of principle. We're talking about the principle of free speech and the potential problems should we permit such a proposal. Zachs33 16:48, 16 August 2011 (UTC)

- My stance on the issue is allow the ignorant to remain in ignorance if they so choose. Taking the jewish torture content example, imagine someone wishes to remain in ignorance of jewish torture. Now there are two possibilities: 1) we leave Wikipedia as it is, and this person avoids Wikipedia because they know it contains content about jewish torture. Perhaps they use Wikipedia for a while but then they see something about jewish torture and are immediately repelled. Or 2) we provide a filtering tool, and the person uses Wikipedia with the tool to filter out jewish torture content. The person may occasionally see an area that is blocked out and says "this content is hidden by your filtering preferences". They probably know that it is content about jewish torture, but they are not repelled because the content itself isn't there.

- By providing the filtering tool, we increase the likelihood that this person will continue to use Wikipedia. You might say the ideal situation is to force this person to understand the truth about jewish torture, and that may be true. But option #1 will not provide that outcome. Option #2 is, imho, the lesser of two evils, and a step closer to the ideal. B Fizz 18:53, 16 August 2011 (UTC)

- You have a point in that we don't want to become too paternalistic, but I'm not sure it's fair to use the word 'force' in this context. A primary aim of Wikipedia is to educate. If someone does not use Wikipedia because, in reading an article, they wish to selectively ignore potentially entire elements of that article, there is perhaps no hope in educating them. In the context of your example, we're talking more about intolerance than ignorance. We should perhaps tolerate intolerance, but we certainly shouldn't encourage it (by providing a mechanism such as the one proposed). If they want to read through an encyclopaedia crossing out the parts they don't like, one wonders why they're reading it in the first place. Zachs33 20:15, 16 August 2011 (UTC)

- Is it so bad to be intolerant of human suffering? The photo at the head of the smallpox article, for example, is probably extremely important for a certain audience (people who have never seen the disease and need to be able to identify it), but I'm guessing a large portion of the readership gains nothing of value from the image and would rather not have the nightmare fuel. I bet many medical images are actually being left out of articles because an easy way to opt out of that kind of content doesn't exist. 68.103.144.192 07:15, 18 August 2011 (UTC)

Translation problems

Though ukrainian translation of questions is ready, this page is not in Ukrainian. How to fix this?? --A1 11:30, 16 August 2011 (UTC)

- Thanks for translating, A1! The vote interface translations do not show up automatically, unfortunately. They are routinely exported from Meta-Wiki and imported to the voting site. The Ukrainian translation will be included in the next export. Cbrown1023 talk 15:31, 16 August 2011 (UTC)

- Could the same be done for french translation, which was probably translated using a "google translate" or similar tool... French speaking sites currently have this insufferable translation . Thanks in advance. Loreleil 10:22, 17 August 2011 (UTC)

Voting instructions

The voting instructions say "Read the questions and decide on your position." But the main questions I see are "Why is this important?", "What will be asked?", and "What will the image hider look like?", and I don't think I need to form a position on these. It's very confusing. Am I voting on how much I support or oppose each of the bullet points in the "What will be asked?" section, or how much I support or oppose the proposal as a whole? It's rather unclear. Either way, these are "questions" I can "read", but proposals (or principles) I can support or oppose. Could this be reworded for clarity? Quadell 11:33, 16 August 2011 (UTC)

No, the acclamation has already started. There is no "no" option. You can just express, that it is not importent. --Eingangskontrolle 15:04, 16 August 2011 (UTC)

- The guiding principles in the What will be asked? section is what you will be commenting on. Cbrown1023 talk 15:32, 16 August 2011 (UTC)

Another blow against freedom

A reason given for this change in your introduction is "the principle of least astonishment". In four years of using Wikipedia several times daily I have never accidentally come across an image that astonished me. That's a pity. Astonishment is a valuable ingredient of learning. I fear that the people who are pressing for this change are not those who are afraid they may see a picture of a nude cyclist (an example that you give), but those who wish to prevent others from this. It won't work. My mother, an astute psychologist, forbade me to read any of the books in my brother's room as they "were too old for me", with the result that I had read them all by the age of ten. Labelling an image as hidden will only entice people to look and find out why. When the people pressing for the change discover that it doesn't work, they will press for stronger controls. And then for even stronger controls. Freedom is surrendered in a series of tiny steps, each of which appears innocuous on its own. No doubt you will assure readers that you won't follow that path. You won't be able to keep that promise. Once on the slippery slope it's very difficult to get off. Apuldram 12:01, 16 August 2011 (UTC)

What does the referendum mean?

The official start of censorship in Wikipedia. --Eingangskontrolle 12:31, 16 August 2011 (UTC)

- Don't be naive. Conservatives won't be offended by evolution enough to opt-out of being forced to view illustrations or evidence of it. This is about penises:

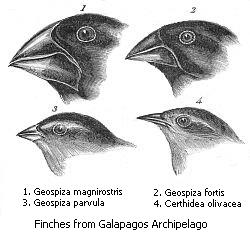

thumb|center|300px|Something children should have the freedom to choose not to view.

- Michaeldsuarez: No doubt you are tempting users to remove the image to prove your point. I don't particularly wish to see the image and am certainly tempted to remove it (although I shall refrain from doing so), but that's because I don't wish to view the kind of content to which it belongs. If I were looking at articles on masturbation techniques, to which the image belongs, I would have no problem with the image. A child will only come across the above image if viewing a relevant article, which will be of an explicit nature, both in images and in text. My only problem with the image is that it does not belong on this talk page as it lacks relevance. I understand your point, but it's rather weak. Zachs33 13:44, 16 August 2011 (UTC)

- This is a free image, not a fair use image. If I wanted to, I could post 50 of them on my userpage. It's more relevant than the finch image inserted earlier. There are ways for children to wonder onto enwiki's masturbation article. There are links to that article from the "marriage" article, the "Jock" disambiguation page, the "List of British words not widely used in the United States" article, etc. The only image I've ever removed from an article was a drawing of a little girl by a non-notable pedophilic

artistDeviantART user. I'm not against images such as the one I've embedded above. I won't be censoring myself. I'm an Encyclopedia Dramatica sysop. What I'm against is imposing my will and beliefs on others. Users ought to have a choice. --Michaeldsuarez 14:00, 16 August 2011 (UTC)- I've seen much tamer images deleted from Wikipedia articles - when people don't like an image, they start with the whole "what does it add to the article" line. Heck, an image of a brown smear was removed from en:santorum (neologism), though the mastergamers there managed it by repeatedly extending article protection until it was deleted as an unused Fair Use image. I've also seen much tamer images deleted from userpages (e.g. Commons:User:Max Rebo Band). So this is pretty much a strawman as I see it. Wnt 14:32, 16 August 2011 (UTC)

- This is a free image, not a fair use image. If I wanted to, I could post 50 of them on my userpage. It's more relevant than the finch image inserted earlier. There are ways for children to wonder onto enwiki's masturbation article. There are links to that article from the "marriage" article, the "Jock" disambiguation page, the "List of British words not widely used in the United States" article, etc. The only image I've ever removed from an article was a drawing of a little girl by a non-notable pedophilic

- Michaeldsuarez: No doubt you are tempting users to remove the image to prove your point. I don't particularly wish to see the image and am certainly tempted to remove it (although I shall refrain from doing so), but that's because I don't wish to view the kind of content to which it belongs. If I were looking at articles on masturbation techniques, to which the image belongs, I would have no problem with the image. A child will only come across the above image if viewing a relevant article, which will be of an explicit nature, both in images and in text. My only problem with the image is that it does not belong on this talk page as it lacks relevance. I understand your point, but it's rather weak. Zachs33 13:44, 16 August 2011 (UTC)

Hello, I've changed one file in this section to an internal link. I don't believe this file is offensive or off topic, but think it is distracting for the purpose of this talk page. Since I had started two new sections further above on this talk page today (and before this file had been added to this talk page), my hope is that there will be a higher chance for usefull responses on this talk page when the file is not directly displayed, greetings --Rosenkohl 14:37, 16 August 2011 (UTC)

- Sigh. Actually I thought it was pretty good bait. And there are people who thought it was unacceptably indecent for Elvis to waggle his hips. There is a time coming when Adam and Eve will be able to dance through the Garden of Eden unclad and unashamed. Wnt 14:43, 16 August 2011 (UTC)

- A clear and concise image about what images this proposal is really trying to prevent from unwanted child viewage is censored, while the deceptive, scare-tactic "OMG. They're going to censor Darwin" image is to be kept? --Michaeldsuarez 14:52, 16 August 2011 (UTC)

- I choose this image because it is not obvious that it will be endangered. But it is. First we will filter the images with 99% disagreement, then 90%... then your example... and then we will mark only the images that will find the acceptance of the remaining board members (there will be no more users left in this project). --Eingangskontrolle 21:04, 17 August 2011 (UTC)

- Well, what undermines your point is that you're using an image which is hard to use in an encyclopedia article; if you were sampling our fine (but too small) collection on Category:Gonorrhea infection in humans it would be easier to see this - indeed, in the U.S. such images are shown to young children by the government as sex education. So if your image isn't encyclopedic why is it on Commons? Because it's useful for a German Wikibooks page.[2] Now someone viewing that page probably wants to see the pictures.

- In any case, bear in mind that our opposition to this image hiding proposal doesn't mean opposition to all image hiding - I have, several times for each, proposed a simple setting to allow a user to thumbnail all images at a user-defined resolution (can 30 pixels really be all that indecent?), and an alternate image hiding proposal where WMF does nothing to set categories but allows users to define their own personal categories and lists and share them on their own, so there is no need for WMF to define or enforce categories. Also note that there are many private image censorship, ehm, "services", which a person can subscribe to, and which are in fact, alas, taxpayer-subsidized in schools and libraries. Wnt 15:11, 16 August 2011 (UTC)

- A clear and concise image about what images this proposal is really trying to prevent from unwanted child viewage is censored, while the deceptive, scare-tactic "OMG. They're going to censor Darwin" image is to be kept? --Michaeldsuarez 14:52, 16 August 2011 (UTC)

Dont be naive. They (the createtionits) control school districts and they try to control the media by removing book from public libaries. And they will tag images they dont link to be shown. Its a shame, that there was no single "no" to this proposal. --Eingangskontrolle 15:00, 16 August 2011 (UTC)

- Have you read any of the comments on this talk page? The opposition is extremely vocal. There are plenty of no's here. It's religion that's constantly censored. You can't have rosary beads: [3], [4]. You can't even voluntarily pray in school. I could understand forbidding mandatory school prayers, but if an individual makes a personal choice to pray for himself or herself, why should we stop them? Couldn't they give students the choice to use their recess time to either pray or play? Wouldn't that be an acceptable compromise? In addition, Creationists are far from having the ability to take over the wiki. --Michaeldsuarez 15:45, 16 August 2011 (UTC)

- Stop the madness. Let's not drag the creationism battle here. It's off topic. Philippe (WMF) 18:36, 16 August 2011 (UTC)

- Apart from objections to some medieval paintings of Muhammad, I haven't seen much effort to censor religion here. Certainly I agree that students should be able to dress as they wish, and say what they will, but what can I do about that on Wikipedia? Wnt 19:40, 16 August 2011 (UTC)

- Stop the madness. Let's not drag the creationism battle here. It's off topic. Philippe (WMF) 18:36, 16 August 2011 (UTC)

When did personal preference become synonymous with censorship?

I'm going to go against the grain here and suggest that the implementation laid out here is inadequate and wrong-headed. The principle of "least surprise" doesn't suggest that people should be given the chance to block an offensive image after it has been seen, it suggests that people not be surprised by such images. This suggests an opt-out (opting out of filtering) system, rather than an opt-in system. Anything less will not satisfy the critics of Wikipedia's use of images (who are acknowledged to have a valid point by the very development of this system).

Since images are necessarily going to be sorted as into categories of offensiveness for any such system to work, it is simple to restrict display of all possibly offensive images unless the user takes deliberate action to view them. This would be a preference for logged in users and a dialog for non-registered readers, just as in the current proposal. An inadequacy of the current proposal is that it does not tell the user why a particular image has been hidden. I suggest that this is necessary for a reader to make the choice of viewing or not viewing a hidden image. As a quick example, suppose that one an image is both "sexual" (i.e., contains genitals) and medical. As a non-registered user, I may have no problem with sexual images but may be squeamish about medical images. Although it may be apparent from context, there is no reason to deprive the user of information which helps them make an informed choice.

I understand that if the WMF were to implement an opt-out system there would be a very loud hue and cry from the same people who are here crying "censorship". Giving someone the ability to choose not to see things that may offend them is not censorship. Certain comments here read to me as though the people making the comments feel that they know better and everyone should accept their particular worldview. Clearly people are upset that others do not wish to see images of Mohammed and feel that allowing users to block those images is some form of personal defeat. The WMF will never convince the hardcore "anti-censorship" crowd and any opt-in or opt-out system will be met with equally strident reaction. The WMF needs to look past the comments of resident ideologues and examine the concerns raised by its critics and casual users. Opt-in filtering does not answer their concerns nearly as well as opt-out filtering would. Growing the userbase means attracting new users, not placating those who have become entrenched in positions that no longer serve the project.

Be brave. Implement opt-out filtering. Delicious carbuncle 14:17, 16 August 2011 (UTC)

- Personal choice is a good thing, yes. The problem with this proposal is that there are two things which are not personal choice.

- Categorization of images. The poll asks about the importance of flagging images, talks about 5-10 categories of images. But who decides whether an image is in or out of a given category, and how do they decide? The problem is, you're going to have people arguing about, say, whether a woman breastfeeding a baby, where you see a few square inches of non-nipple breast skin, is indecent or not. When these disputes escalate - or as the offense-takers get bolder and start hunting for scalps - this is going to lead to user blocks and bans. Both the arguments and the editor losses are significant costs to the project.

- Speaking of costs. We still have a pretty rudimentary diff generator that can't recognize when a section has been added, doesn't mark moved but unaltered text in some other color, etc. We still have no way to search the article history and find out who added or deleted a specific source or phrase, and when. WMF has things to spend its money on that don't require a site wide referendum to see if they're a good idea or not. There are probably hundreds of listed bugs that any idiot can see we should have devs working on right now. Wnt 14:37, 16 August 2011 (UTC)

- Wnt, I know you aren't going to block any images, so why do you care if people choose to spend their time arguing about what is or isn't too much skin? As for cost, you and I both know that this site-wide referendum is a done deal. Image filtering is going to be implemented. I am suggesting what is really a minor change in terms of implementation. I do not pretend to know what the WMF should be working on, but it has asked for opinions in this case, so I have offered mine. Delicious carbuncle 15:41, 16 August 2011 (UTC)

- As I just explained, the reason why I care is that (a) developers are probably going to waste months fiddling around with this image hiding toy, when there are more pressing tasks for them to do, and (b) soon ArbCom cases like the one you're involved in and which I commented about during its initial consideration (w:Wikipedia:Arbitration/Requests/Case/Manipulation of BLPs) will include a whole new field of controversy with people saying that so-and-so has too loose or too tight a standard when tagging images. Especially if there are 10 categories - I can't even imagine what they all would be. So we won't just be ignoring the image blocking, and you know it, because it's going to be this huge new battlefield for inclusion/deletion. This is a big step in the wrong direction, and we already have too much trouble. Wnt 19:48, 16 August 2011 (UTC)

- Oh, yes, the ArbCom case that I'm involved in. Thanks for bringing that up here, even though it isn't related in any way but might make me look bad. Especially if people thought I was the one manipulating those BLPs, which I wasn't, or that my role was anything other than whistleblower and finger-pointer, which it wasn't. I'll be sure to remember your thoughtfulness in this matter. Delicious carbuncle 20:26, 16 August 2011 (UTC)

- As I just explained, the reason why I care is that (a) developers are probably going to waste months fiddling around with this image hiding toy, when there are more pressing tasks for them to do, and (b) soon ArbCom cases like the one you're involved in and which I commented about during its initial consideration (w:Wikipedia:Arbitration/Requests/Case/Manipulation of BLPs) will include a whole new field of controversy with people saying that so-and-so has too loose or too tight a standard when tagging images. Especially if there are 10 categories - I can't even imagine what they all would be. So we won't just be ignoring the image blocking, and you know it, because it's going to be this huge new battlefield for inclusion/deletion. This is a big step in the wrong direction, and we already have too much trouble. Wnt 19:48, 16 August 2011 (UTC)

@Delicious carbuncle: hardcore "anti-censorship" crowd - do you know what you are writing? The freedom of speech is regarded as one of the human rights and the reason why such a project like Wikipedia is possible. I dont want anyone to choose in the background of the flaging process which image will be seen and which will not be seen. If somebody has legal problem with certain images, he should come forward and start a delete discussion. --Eingangskontrolle 14:53, 16 August 2011 (UTC)

- Thank you for demonstrating my point. How is the tagging of an image as offensive (even if incorrectly tagged) in any way interfering with your freedom of speech? Delicious carbuncle 15:21, 16 August 2011 (UTC)

- Its not directly interfering with freedom of speech, it's interfering with knowledge and neutrality. Neutrality is the sum of all knowledge. Selective knowledge is censorship and leads to misunderstanding, prejudice and conflict. Anyone that lives inside Germany knows this as a fact, any American that thinks about terrorists should also know this as a fact and anyone living under modern dictatorship will also know it, but has no other choice as to stay quite for his own safety. Thats how you loop back to freedom of speech which will also suffer from blindfolds given to the audience of the speaker. --Niabot 16:20, 16 August 2011 (UTC)

- The selective knowledge approach is being used extensively on Wikipedia already. Wikipedia doesn't have a "Teach the Controversy" approach. If Wikipedia teaches the controversy, then perhaps evolutionists would stop buying into the idea that Creationists and Intelligent Designers are uneducated hillbillies who rape and murder people in Hollywood horror and post-apocalyptic action films. Wikipedia is already censored, and it's affecting how people think of people they're not truly familiar with. --Michaeldsuarez 16:37, 16 August 2011 (UTC)

- If the situation is already this worse, why we even think about this bullshit to make it even more worse? Whats next? A Wiki for nationalists a Wiki for terrorists and next a Wiki for the guys that doesn't want to see any of the previous? Technically we create such internal differentiations and should no wonder if the climate gets down further if draw such borders. A free mind can look at anything without being offended. He stands on top of that. --Niabot 16:53, 16 August 2011 (UTC)

- A lot of talk about "freedom" on WIkipedia seems to involve depriving others of their ability to chose for themselves. Delicious carbuncle 17:01, 16 August 2011 (UTC)

- If the situation is already this worse, why we even think about this bullshit to make it even more worse? Whats next? A Wiki for nationalists a Wiki for terrorists and next a Wiki for the guys that doesn't want to see any of the previous? Technically we create such internal differentiations and should no wonder if the climate gets down further if draw such borders. A free mind can look at anything without being offended. He stands on top of that. --Niabot 16:53, 16 August 2011 (UTC)

- The selective knowledge approach is being used extensively on Wikipedia already. Wikipedia doesn't have a "Teach the Controversy" approach. If Wikipedia teaches the controversy, then perhaps evolutionists would stop buying into the idea that Creationists and Intelligent Designers are uneducated hillbillies who rape and murder people in Hollywood horror and post-apocalyptic action films. Wikipedia is already censored, and it's affecting how people think of people they're not truly familiar with. --Michaeldsuarez 16:37, 16 August 2011 (UTC)

- Its not directly interfering with freedom of speech, it's interfering with knowledge and neutrality. Neutrality is the sum of all knowledge. Selective knowledge is censorship and leads to misunderstanding, prejudice and conflict. Anyone that lives inside Germany knows this as a fact, any American that thinks about terrorists should also know this as a fact and anyone living under modern dictatorship will also know it, but has no other choice as to stay quite for his own safety. Thats how you loop back to freedom of speech which will also suffer from blindfolds given to the audience of the speaker. --Niabot 16:20, 16 August 2011 (UTC)

- If more viewpoints are taught, then the people will become less arrogant about what people of other viewpoints think. The more people understand about each other; the better. Wikipedia needs to be more inclusive when considering which viewpoints to include in articles. Wouldn't it be advantageous for us to document how Creationists, nationalists, and even terrorists think and what they believe in separate sections instead of allowing stereotypes fill in the blanks? --Michaeldsuarez 17:09, 16 August 2011 (UTC)

- Ignorance is a choice?! In relation to creationism, the "teach the controversy" approach was refused by many science teachers in schools, on the grounds that there was no scientific evidence upon which to base creationism. I could be wrong (not really), but I believe encylopaedias rely on verifiable sources and scientific evidence. Creationism does not fit the criteria, it's that simple. Encyclopaedias must settle on what is provable and generally accepted. If there is controversy, for which reliable evidence exists, it should be covered. Wikipedia is not a platform for terrorists, creationists, holocaust deniers, liberals, conservatives or any particular group. It's an encyclopaedia and should remain as neutral as possible. Zachs33 17:25, 16 August 2011 (UTC)

- Why are you arguing about Creationism here? This is a discussion about opt-in versus opt-out for image blocking. Delicious carbuncle 17:53, 16 August 2011 (UTC)

- Sorry, I was just expanding on Niabot's idea of "Selective knowledge is censorship and leads to misunderstanding, prejudice and conflict." --Michaeldsuarez 18:57, 16 August 2011 (UTC)

- Why are you arguing about Creationism here? This is a discussion about opt-in versus opt-out for image blocking. Delicious carbuncle 17:53, 16 August 2011 (UTC)

- Ignorance is a choice?! In relation to creationism, the "teach the controversy" approach was refused by many science teachers in schools, on the grounds that there was no scientific evidence upon which to base creationism. I could be wrong (not really), but I believe encylopaedias rely on verifiable sources and scientific evidence. Creationism does not fit the criteria, it's that simple. Encyclopaedias must settle on what is provable and generally accepted. If there is controversy, for which reliable evidence exists, it should be covered. Wikipedia is not a platform for terrorists, creationists, holocaust deniers, liberals, conservatives or any particular group. It's an encyclopaedia and should remain as neutral as possible. Zachs33 17:25, 16 August 2011 (UTC)

I agree with Delicious carbuncle. Opt-in filtering will please no-one, and hardly seems worth the massive effort in creating and maintaining the system. It has to be opt-out, and by virtue of being opt-out, very simple. For example, if the system doesn't know the user's preferences on what to see (eg anonymous user), it doesn't show images classed as problematic (with various categories of problematic so users can fine-tune preferences, but in the first instance, the system needs to hide everything problematic), and provides a placeholder with a textual description instead (probably the alt text, which we should have for visually impaired users anyway), along with a "click here to see the image" button. Simple, and not a trace of censorship, which takes power away from the reader, where this gives power to the reader. Rd232 17:59, 16 August 2011 (UTC)

- Thats exactly what i don't want. A default censored Wikipedia with option to enable the decensored content. This is total bullshit and should be fired up in hell. --Niabot 18:22, 16 August 2011 (UTC)

- Luckily, that's exactly what's... NOT proposed. Don't conflate the two. Philippe (WMF) 18:35, 16 August 2011 (UTC)

- I'm arguing that opt-in is fairly useless, except for the sort of power users who might be involved in this discussion. WMF should commission some research (a poll?) on opt-in/opt-out, because the people involved in these discussions are entirely unrepresentative of those most affected by the choice. Rd232 18:38, 16 August 2011 (UTC)

- See discussion below on what censorship is. Hiding something a click away is not "censorship", and never will be no matter how often the claim is made. Rd232 18:38, 16 August 2011 (UTC)

- In this case it's inconvenience. Making something not as accessible as something else is the same as to say: This party can start showing advertisements a year before election and this party only one week before the election. Of course you could go to cinema XYZ and watch it there all time. Do you now understand that it would violate any principle of neutrality? --Niabot 18:57, 16 August 2011 (UTC)

- It's every bit as accessible. The feature is off by default... you have to choose to turn it on. Philippe (WMF) 20:12, 16 August 2011 (UTC)

- And I am proposing that it is on by default. 20:19, 16 August 2011 (UTC)

- An opt-in system is as much use as chocolate dildo in the Sahara. John lilburne 20:42, 17 August 2011 (UTC)

- Niabot, our analogy is, unsurprisingly, unrelated nonsense. If an IP user wants to see images that have been blocked, all they need to do is bring up the dialog as illustrated in the mocked-up screenshots the first time they encounter a blocked image. After that, they will see the images as they choose. This is exactly the same amount of inconvenience that users face in the current proposal, except the onus has been switched from users who do not wish to see potentially offensive images to users who do wish to see those images. Delicious carbuncle 20:19, 16 August 2011 (UTC)

- It's every bit as accessible. The feature is off by default... you have to choose to turn it on. Philippe (WMF) 20:12, 16 August 2011 (UTC)

- In this case it's inconvenience. Making something not as accessible as something else is the same as to say: This party can start showing advertisements a year before election and this party only one week before the election. Of course you could go to cinema XYZ and watch it there all time. Do you now understand that it would violate any principle of neutrality? --Niabot 18:57, 16 August 2011 (UTC)

- Luckily, that's exactly what's... NOT proposed. Don't conflate the two. Philippe (WMF) 18:35, 16 August 2011 (UTC)

I am not strongly opposed to the idea of an opt-in filtering system. (Please note: my views on this issue have not solidified yet.) However, an opt-out filter strikes me as unworkable.

Delicious carbuncle writes: "it is simple to restrict display of all possibly offensive images unless the user takes deliberate action to view them". Yes, but how do we decide what images are "possibly offensive"? Images of gore, explicit sex, and the prophet Muhammad seem to clearly qualify, but beyond that it gets fuzzy. For example, should pictures of spiders be tagged as "potentially offensive" given the existence of arachnophobes?

Of course, you might argue that we can limit the opt-out filter to the "obvious" cases. But that would not work for two reasons:

- Some of the opt-in supporters will inevitably complain that such a filter does not allow them to filter everything that they'd like to filter.

- No one will agree on exactly where to draw the line with regard to "obvious" cases. Above, I listed images of Muhammad as an obvious example of "possibly offensive" material, and it does seem obvious to me. But, at least in the Western world, there are many on both the right and the non-PC left who will probably object to tagging a mere picture of Muhammad as "possibly offensive" -- not because they don't recognize that such images do in fact offend some people, but because they view the sensitivities of the people in question as an insufficient reason to have the pictures hidden by default. Likewise, many would probably object to tagging images related to evolution as "possibly offensive" -- again, not because they don't recognize that the images do in fact offend some people, but because they view the sensitivities of the people in question as an insufficient reason to have the pictures hidden by default. There are countless other cases where some images do in fact offend a subset of the population but where there will be resistance to having the images hidden by default. There seems to be no way to draw the line.

So, to sum up, I'm not dead-set against any kind of filter. But I object to an opt-out filter on the grounds that it is simply not feasible. --Phatius McBluff 20:48, 16 August 2011 (UTC)

- My statements are based on the assumption that there will be an image filter implemented and there will be tagging of images by some group (as yet unknown) using some schema (as yet unknown). There will, of course, be many arguments caused by this. My suggestion is simply that any such filter is on by default. The pints you raise apply equally to an opt-in filter. Delicious carbuncle 20:59, 16 August 2011 (UTC)

- "The pints you raise apply equally to an opt-in filter."

- Only somewhat. You're right that there will be a lot of arguments over what images get tagged for what category even if we go with the opt-in suggestion. However, your suggestion, if implemented, would require a whole second round of arguments about which categories (or combinations of categories, or degrees of membership in a category such as nudity, etc.) qualify as "possibly offensive" to, say, a "reasonable person". I submit that this is not the sort of value judgment that Wikimedia projects should be making, given our value of neutrality.

- However, since I am unlikely to persuade you on the above points, I will offer the following constructive suggestion: if we do implement an opt-out filter, then we sure as heck better implement an optional opt-in filter as well. Or, to put it differently, users should be able to customize their filters by adding additional categories to be blocked. How else will we avoid charges of favoritism? --Phatius McBluff 21:15, 16 August 2011 (UTC)

- Just to clarify: My understand of the opt-in proposal was that, under that proposal, we would tag images as belonging to various (relatively value-neutral) categories (e.g. nudity, spiders, firearms, explosives, trees) and allow people to choose which categories to filter. My point is that, if we are going to have some of those categories filtered by default, then we had better allow people the option of adding additional categories to their filters. --Phatius McBluff 21:18, 16 August 2011 (UTC)

Ambigious questions

The questions are ambiguous and must be changed; For example "It is important for the Wikimedia projects to offer this feature to readers." should become "It is important that the Wikimedia projects offer this feature to readers.". i.e. "for" has a double meaning and is thus ambiguous. AzaToth 15:18, 16 August 2011 (UTC)

- Thanks AzaToth. I don't quite follow the confusion though -- explain further? -- phoebe | talk 15:49, 16 August 2011 (UTC)

- The ambiguity here is what is important for the projects as whole, i.e. it's self interest, or what is important for the readers. AzaToth 16:04, 16 August 2011 (UTC)

- Got it. Thanks. I'll see if we can change it. (There's a heap of translations that might be affected). -- phoebe | talk 16:13, 16 August 2011 (UTC)

- Thats fine, change the question while it is answered. And then add the option: Dont spend any further efforts in this idea. --Eingangskontrolle 16:39, 16 August 2011 (UTC)

- For the record, we are not changing the question in mid-stream. :) Philippe (WMF) 18:19, 16 August 2011 (UTC)

- Thats fine, change the question while it is answered. And then add the option: Dont spend any further efforts in this idea. --Eingangskontrolle 16:39, 16 August 2011 (UTC)

- Got it. Thanks. I'll see if we can change it. (There's a heap of translations that might be affected). -- phoebe | talk 16:13, 16 August 2011 (UTC)

- The ambiguity here is what is important for the projects as whole, i.e. it's self interest, or what is important for the readers. AzaToth 16:04, 16 August 2011 (UTC)

Blindfolds and stupidity?

I can't image why it should have any positive effect on mankind to declare knowledge or visualizations of knowledge as non existent. I'm strongly against such a proposal since selective oder preselected knowledge is the worst kind of knowledge. Preselected knowledge is the same as censorship, even if you censor yourself. It allows human beings to build there own reality which way different from actual reality. On top of it we encourage cultural misunderstanding and discrepancy. Frankly said, I'm already offended by the fact, that we even talk about such idiocy. -- Niabot 15:52, 16 August 2011 (UTC)