Subventions:Évaluations/Rapports d’évaluation/2013/Ateliers de contribution

Ceci est la page du programme d’évaluation sur les ateliers. Elle contient actuellement des informations basées sur des données collectées fin 2013 et sera mise à jour régulièrement. Pour des informations supplémentaires sur ce premier tour d’évaluation, reportez-vous à la page d’aperçu.

Ce rapport concerne les données de huits dirigeants de programme (program leaders), pour un total de 16 ateliers de contribution, dont six viennent d’un dirigeant de programme et nous ont permis de collecter plus de données sur la participation et le taux de rétention. Les dirigeants de programme qui ont répondu au questionnaire sur les ateliers sont des personnes sans affiliation à un chapitre ainsi que des des dirigeants de programme des chapitres ou groupes d’utilisateurs.

Les points à retenir sont :

- Les ateliers ont six objectifs prioritaires centrés sur l’éducation du public à Wikimedia et sur le recrutement et la conservation de nouveaux contributeurs variés.

- Seuls cinq ateliers (31 %) ont un budget à rapporter, trois (19 %) avaient un budget de zéro dollars et la majorité des dirigeants de programme ont rapporté avoir reçu des dons pour le lieu de la rencontre, de l’équipement, du matériel, de la nourriture ou d’autres choses. Les dirigeants de programme ont besoin d’augmenter leur suivi de ces informations ainsi que le temps de préparation, les noms d’utilisateurs et les dates et heures des événements pour une évaluation propre de leurs ateliers.

- Les données collectées montrent que les ateliers avec plus de participants tendent à avoir un plus faible pourcentage de nouveaux utilisateurs. Toutefois, nous avons besoin de plus de données sur de nouveaux ateliers pour explorer cela plus à fond – tous les petits événements n’ont pas de rétention de nouveaux utilisateurs mais les plus grands événements semblent être moins efficaces vis-à-vis de la rétention. Expérimenter avec des petits ateliers focalisés sur un groupe pourrait aider à déterminer cela.

- As of reporting, workshops are not the most effective programs at recruiting new editors. Out of 87 identified new editors reported in this data, only two were retained after the workshops – with the average for the reported workshop being zero. While workshops are a popular way to try and engage new volunteer editors, without further data and/or evaluation along other potential metrics, it is unclear what, if any, return on investment workshops may deliver in terms of retention of active new editors. Experimenting with workshop types (series of workshops, hands on workshops where participants actually edit, targeted group workshops), gathering qualitative data from participants (i.e. surveying), and developing better tracking tools for program leaders to use could help to learn more about potential retention opportunities and attendee needs.

Fondamentaux et historique du programme

Wikipedia editing workshops are events that focus on educating the public about how Wikipedia works and how to contribute to it.

Unlike edit-a-thons, workshops don't always have a hands-on editing component. Workshops, which can last from 1–2 hours or an entire afternoon, are generally hosted in public venues such as universities, libraries, or community centers. Workshops can be held for specific types of groups, such as academics and students, or as specific as women or the elderly. They might take place at major conferences, or even online through webinars and online conferences.

Workshops are often facilitated by experienced Wikipedians, who present about the history of Wikipedia, it's mission, and then present the basics about how to edit, what the policies are, and perhaps additional information about the culture and community. Pamphlets might be given away to serve as references for participants, and even certificates are given to participants in some workshops.

Editing workshops are one of the oldest outreach events in the Wikimedia community. In 2004, Italian community members were hosting first workshops to educate and inspire the public, and to participate in Wikipedia and related projects. After 2004, editing workshops quickly continued to expand to other countries. Today, each month Wikipedians around the world invite the public to engage and learn about why it's important and how to contribute to Wikipedia.

Rapport des données

Taux de réponses et qualité/limitations des données

- Eight program leaders submitted data, and we mined data for six additional workshops.

Program leaders voluntarily submitted data for ten workshops. One program leader sent us cohorts, dates, and details about six additional workshops.

We were able to use that information to mine additional data. As with all the program report data reviewed in here, data was often partial and incomplete, please refer to the notes, if any, in the bottom left corner of each graph.

Rapport sur les données soumises et récoltées

Objectifs prioritaires

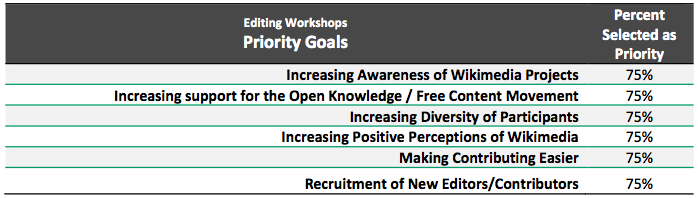

- According to respondents, workshops have six priority goals.

We asked program leaders to select their priority goals for workshops. We provided 18 priority goals with an additional 19th option to report on "other" goals. They could select as many or as little as they saw fit.

All eight of the program leaders who reported on workshops selected priority goals for their workshops. Those eight selected between five and 16 priority goals for each of their events.[1]

Our team noted six stand out goals that appeared as priorities amongst the reporting program leaders (see table below):

Entrées

- In order to learn more about the inputs that went into planning workshops, we asked program leaders to report on:

- The budget that went into planning and implementing the workshop.

- The hours that went into planning and implementing the workshop.

- Any donations that they might have received for the event: a venue, equipment, food, drink, giveaways, etc.

Budget

- Only five out of 16 of the workshops reported by programs reported a budget.

Out of 16 reported workshops, five (31%) reported a budget and three (19%) were for zero dollars.

The budgets reported ranged from $0.00 US to $750.00 US.

Ressources données

- Donated resources are important for workshops, with meeting space being the most commonly donated resource.

Several program leaders reported that they received donations to help implement their workshops.

The most commonly reported donation was meeting space (70%), with materials/equipment following up in second as reported by 50% of all respondents. Food and prizes/giveaways were reported for only one event each (see Graph 1).

The most commonly reported donated resources workshops were meeting space and materials/equipment. Reported for 50 to 70 percent of workshop implementations, these donated resources may be key to keeping programming costs low.

Effets

- We also asked about three outputs in this section:

- How many people participated in the workshop?

- How many people made new user accounts at the workshop?

We used these outputs, and budget and hour inputs, to ask:

- How many participants are gained for each dollar or hour invested?

Participation

- The average workshop, based on the data reported, has 12 attendees, with little over half being new potential editors.

The number of people who attended workshops varied. Some workshops consisted of 3–4 new potential editors. Others were larger, with 10–40 participants, both new potential and experienced editors.

The average participation for workshops was 12 participants.[2]

Some events had no new editors, when others consisted entirely of new potential editors, however, the the average workshop had a make up of 52% new editors.[3]

In all, the proportion of participants which were new users ranged from 0 to 100 percent with an average of 52%.[4]

- Workshops seem to cost little to nothing per participant to implement. But, we need more program leaders to report budgets in order to learn more.

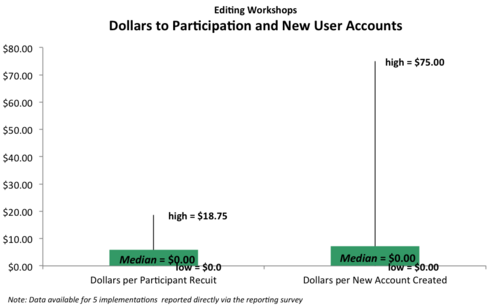

Five out of ten workshops that were directly reported by program included budget, and those five also had participant counts. So we used that data and evaluated how much it workshops cost per new user accounts created and per participant to implement (see Graph 2' and Graph 3). However, the lack of budget reporting makes it hard to determine exact costs.

-

Graph 2. Dollars to New Accounts Created. As illustrated in the graph, participation ranged from groups of 3 to 40 participants which led to the recruitment of from 0 to 14 new users out of inputs ranging from $0.00 to $750.00. In the graph, the number of new users recruited from each workshop is illustrated by bubble size and label. The larger the workshop, the higher the number of new user accounts created, however, the proportion of new user accounts created declines. No conclusions can be drawn from the dollars spent without additional observations.

-

Graph 3. Dollars to New Accounts. As illustrated in the box plot, for the most part editing workshops were implemented with little or no budget. The median cost reported was zero-dollars per participant and new account created in which the half of the reports surrounding the median of the distribution reported cost from $0.00 to $5.88 per workshop participant, and from $0.00 to $7.14 per new account created.

- Workshops take a lot of time to implement. But, we need more data to know the effect input hours has on recruitment and retention.

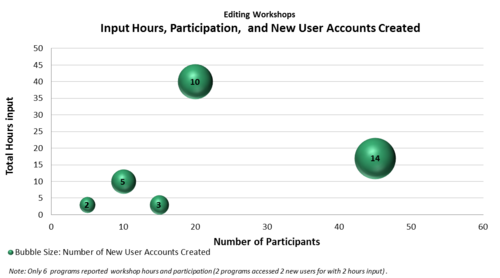

Seven workshops (70% of those reported directly) included both the input of how many hours it took to implement the event and output participant counts.

We learned that workshops take a lot of time to prepare and implement. However, we can't make a solid determination that added preparation leads to higher rates of new user activation without collecting more workshop data. Using the data from those seven workshops, which included input hours, we evaluated how the amount of input hours affected the primary goals of recruitment (see Graphs 4 and 5).

-

Graph 4: Hours to new accounts created. As illustrated in the graph, participation ranged from groups of 3 to 40 and recruited from 0 to 14 new users after inputs ranging from 5 to 45 hours. This graph features data for 7 workshops, however, one bubble reported zero, so it isn't visible on the chart, and two events reported the same data, so they appear together. In the graph, the number of new users recruited for each workshop are illustrated by bubble size and label. It appears that the larger the workshop, the lower the proportion of new user accounts as well as that the more hours invested, the more new user accounts were created. However, no conclusion can be drawn without additional observations.

-

Graph 5: Hours to new accounts. As illustrated in the box plot, editing workshops took a fair bit of preparation and implementation time for program leaders and volunteers. The median hours reported were 1.7 hours per participant and 2.5 hours per new user account created with a distribution in which the half of reports surrounding the median reported from 1.2 hours to 2.4 hours per workshop participant, and from 2.1 hours to 3 hours invested per new account created.

Effets

Recrutement et rétention des nouveaux rédacteurs

- The majority of workshops reported user retention rates after the event. The data shows that very few new active editors are retained after the event.

- 75% of workshops reported included 3-month retention data for new editors. These reports ranged from 0% to 33% retention. The average number of active editors reported 3-months after was zero.[5]

- 56% of workshops reported included a 6-month retention rate. These reports ranged from 0% to 6% retention. The average number of active editors reported 6-months after was also zero. Data couldn't be reported for two of the reported workshops because they hadn't reached the 6-months time since completion.[6]

With this data, we were able to learn that the retention of new editors was minimal (See Graph 6).

New editors seem more likely to be retained when workshops are smaller in size. Without additional data to confirm this observation, program leaders who want to continue doing workshops should try and test different workshop sizes and formats.

-

Graph 6. Recruitment and Retention. For all editing workshops reported, the total number of participants was 230, of which 87 (38%) created new user accounts). At 3 months, 5 new editors (6%) were still actively editing (i.e. 5+ edits per month) and, at 6 months, at least 2 were still active (Note: 3 new users that were retained at 3-months had not yet reached the 6-month follow-up window.

Réplication et connaissance partagée

- While the majority of program leaders who implement workshops consider themselves experienced enough to assist others to do the same, very few have created blogs or other online material about their workshops. A small percentage also reported providing handout materials for their events.

Out of the 10 programs reported directly by program leaders:

- 64% were implemented by an experienced program leader who could help others conduct their workshops.

- 19% had developed brochures and printed materials.

- 19% had created blogs or other online information for others to learn from.

- 0% had produced guides or instructions to help others to implement workshops.

While the majority of program leaders identified themselves as experienced in producing workshops, only a few reported producing materials to be shared during the event or blogs or online information about the workshop.

Unlike edit-a-thons, in which program leaders reported having online documentation on how to implement them, none of the program leaders reported having a guide or instructions on how to implement workshops (see Graph 7).

-

Graph 7. Replication and Sharing. The biggest strengths that workshops demonstrated in terms of potential replication and shared learning were that most of the events were run by an experienced program leader who could help to guide others and a number of them had blogs, online posts, brochures, or printed materials documenting it.

Résumé, suggestions et questions ouvertes

Comment le programme parvient-il à ses propres objectifs?

Wikipedia editing workshops have been around for a long time. Initially intended to recruit new contributors for Wikipedia, they seem to be utilized today for a wider variety of purposes. Program leaders who reported back to us indicated that they used the workshop format not only to attract new contributors, but, for increasing the positive perception and support for Wikipedia and free knowledge, as well as for increasing people's awareness of Wikimedia projects.

Finding out whether this type of event delivered results against its goal of encouraging participants to become active Wikipedia editors turned out to be difficult. A comparably high number of usernames included in the reported cohort data was invalid.

This was caused by some workshop organizers who either collected usernames on paper or asked workshop participants to fill out an online form through Google Docs, resulting in a large number of participants remembering their usernames incorrectly.

Although this diminishes the validity of our analysis, the results indicate that editing workshops are not the most effective way of turning newcomers into editors. Out of a total of 230 workshop participants (with some organizers also counting existing editors as participants, only 87 people (38%) created new user accounts. Out of those 87, only 2 (2.3%) qualified as "active editors" half a year after the event.[7]

In order to confirm these results, we will need a better dataset which might require the Wikimedia Foundation to provide volunteers with a software extension that facilitates the collection of usernames that come in through this and comparable (e.g. edit-a-thons) event types.

We would like to see program leaders investigate further whether workshop series might achieve better results when it comes to long-term retention than one-off events.

Our analysis of edit-a-thons indicates that participants need more time to become acquainted with Wikipedia's many rules and guidelines. We think that it is very likely that the same applies to editing workshops, so perhaps having a series of workshops during a set period of time (weeks, months) might encourage new user account creation and retention.

From all we know it seems like editing workshops don't always have a hands-on component where participants can practice their new skills while being assisted and supported by experienced Wikipedians. We think that having hands-on experience and in-person support might make a significant difference in whether workshop participants succeed in learning how to edit.

Finally, in order to achieve a higher retention rate it is crucial to think about how to narrow down on the target group. In theory, only a very small portion of people will ever be interested in becoming long-term Wikipedians. General criteria based on age, gender, and occupation are not sufficient when it comes to predict who will show an continued interest in Wikipedia. It seems more like a combination of criteria (e.g. strong special interest + high computer literacy + research skills and interest + good writing abilities) will get closer to narrowing the target group down so editing workshops can achieve higher editor retention rates. We would like to see entities in the movement experiment with different criteria which —combined with more hands-on components— might achieve the goal of turning workshop participants into long-term Wikipedians.

Although we don't have any data on whether Wikipedia editing workshops lead to an increased positive perception of Wikipedia and free knowledge in general,[8] it seems to be questionable that workshops —given their limited reach— are the most efficient way of increasing the public's awareness and support for our cause. We've seen in the early days of Wikipedia that newspaper and magazine articles can have a significant impact on the influx of new editors.[9] Although it remains an open question of whether this effect could be replicated today (given that expectations of web users have changed since then), effects of positive mass media coverage on Wikipedia's user base have never been analysed in-depth. It would be interesting to hear, at least anecdotally, from the community in different countries whether they perceived a change in attitude towards Wikipedia through favorable news coverage.

Comment le coût du programme se compare-t-il à ses résultats ?

Although, at this point, no conclusions can be drawn from the dollars spent without additional observations, it became clear in this analysis that Wikipedia editing workshops —as they've been reported in our first round of data collection— don't meet their goals of recruiting and retaining active Wikipedia editors.

That doesn't mean that workshops in general are the wrong tool to attract new editors. Every day, workshops (as a teaching model) on a wide variety of topics other than Wikipedia are being used as a tool for teaching specific sets of skills in different parts of the world.

With that said, we clearly believe that editing workshops can deliver results if program leaders just combine further experimentation with a thorough evaluation of the results against goals. The high amount of donations (meeting space, equipment, etc.) indicate that third parties are willing to support this model of educating groups of people on how to edit Wikipedia. This could possibly make workshops a tool that can be used at relatively low costs.

Comment le programme peut-il être facilement répliqué ?

As of now, there seems to be no documentation available that would offer people who intend to run Wikipedia editing workshops clear instructions on how to run this kind of events effectively. Based on the results of our analysis, this is not surprising. Workshops don't deliver on what they're expected to do —recruiting new editors and retaining them for a sufficient amount of time.

What's needed first and foremost is a proven model for workshops that succeed in delivering against their goals. We encourage workshop leaders to further experiment with this model and report learning patterns in cases where results are satisfactory enough to inform others about the methods that have been applied to make workshops achieve their intended outcomes.

Étapes suivantes

- Les étapes suivantes en bref

- Increased tracking of detailed budgets and hour inputs by program leaders

- Improved ways of tracking usernames and event dates/times for program leaders to make it easier and faster to gather such data. We hope to support the community in creating these methods.

- Program leaders experiment with targeting workshops towards specific groups and evaluate those workshops and we would like to work with program leaders who have interest in testing this program design method, in which workshops are targeted towards people who would be more likely to edit, which isn't necessarily connected to demographic (gender, age).

- Experimenting with hands on and series workshops to see if these types of workshops can have an impact on the outcomes and priority goals of recruitment and retention.

- An evaluation of recruitment strategies being used by program leaders due to concerns with workshops not meeting their intended priority goals. Can recruitment and promotion of workshops be implemented differently?

- Pre- and post- workshop surveys understand more about what participants know going into, and leaving, the workshop. This allows program leaders to see if their event is meeting priority goals about Wikimedia movement education and skill improvement.

- Exploring opportunities to determine the value of workshops through Return on Investment analysis

- Les étapes suivantes en détail

As with all of the programs reviewed in this round of reporting and analysis, it is key that efforts are made toward properly tracking and valuing programming inputs in terms of budgets and hours invested as well a tracking user names and event dates for proper monitoring of user behaviors. It seems especially important that workshop implementers also focus energy on better targeting their programming group, new users.

Many of the participants, especially in larger workshop groups, were not new users but existing users (who also demonstrated very low levels of activity and/or basic retention at 3 and 6 months following). If the workshops are intended for new users and are attracting the wrong participants, the participants may not get what they need out of the workshop to motivate and help them along a path to becoming more active editors and new users may also not be get the attention and support they need as beginners. It may be key that program leaders review their current recruitment strategies and how they target potential new editors in order to increase their effectiveness in meeting the intended goal (and all the outcomes that possibly ensue).

It is also unclear whether the workshops meet their core goal of making contributing easier, a key stepping stone for recruitment and retention.

A survey or other feedback from participants may help to answer whether the workshops accomplish this step, and to what extent, to help understand the lack of new editor recruitment and retention observed for these programming efforts. It will be important to identify core skills and gather new user feedback to assess the extent to which they are gaining the knowledge and skills they need to become an editor.

It will be important for program leaders also to consider how these events may be getting at their other goal priorities (i.e., increasing awareness, positive perceptions, and support for open knowledge and Wikimedia projects).

Lastly, we must work toward strategies for both measuring potential impacts as well as making efforts to value these impacts for future Return on Investment analysis.

Ressources externes

Annexes

Table synthétique : ateliers de rédaction (données brutes)

| Donnée rapportée | Minimum | Maximum | Moyenne | Médiane | Mode | Écart-type | |

|---|---|---|---|---|---|---|---|

| Budgets non-zéro | 13% | $100.00 | $750.00 | $425.00 | $425.00 | aucun (excepté zéro) | $459.62 |

| Heures du personnel | 38% | 0.00 | 15.00 | 5.17 | 0.50 | 0.00 | 7.63 |

| Heures des bénévoles | 44% | 5.00 | 30.00 | 11.71 | 10.00 | 5.00 | 8.98 |

| Total des heures | 44% | 5.00 | 45.00 | 16.14 | 13.00 | 5.00 | 13.81 |

| Espace de réunion donné | 70%[10] | Non applicable – Fréquence de sélection uniquement | |||||

| Matériels / équipement donnés | 50%[11] | Non applicable – Fréquence de sélection uniquement | |||||

| Nourriture donnée | 10%[12] | Non applicable – Fréquence de sélection uniquement | |||||

| Récompenses / cadeaux donnés | 10%[13] | Non applicable – Fréquence de sélection uniquement | |||||

| Participants | 94% | 3 | 40 | 15 | 12 | 3 | 13 |

| Dépenses en dollars par participant | 31% | $0.00 | $18.75 | $4.93 | $0.00 | $0.00 | $8.14 |

| Heures fournies par participant | 38% | 0.50 | 5.00 | 2.08 | 1.67 | 1.67 | 1.60 |

| Octets ajoutés | Non applicable – La production de contenu n’est pas le but des ateliers de rédaction | ||||||

| Dépenses en dollars par page de texte (mesurée en octets) | |||||||

| Heures fournies par page de texte (mesurée en octets) | |||||||

| Photos ajoutées | |||||||

| Dépenses en dollars par photo | |||||||

| Heures fournies par photo | |||||||

| Pages créées ou améliorées | |||||||

| Dépenses en dollars par page créée ou améliorée | |||||||

| Heures fournies par page créée ou améliorée | |||||||

| Photos UNIQUES utilisées[14] | |||||||

| Dépenses en dollars par photo UTILISÉE (compte sans duplicata) | |||||||

| Heures fournies par photo UTILISÉE (compte sans duplicata) | |||||||

| Nombre de bons articles | |||||||

| Nombre d’articles mis en avant | |||||||

| Nombre d’images de qualité | |||||||

| Nombre d’images de valeur | |||||||

| Nombre d’images mises en avant | |||||||

| Taux de rétention à 3 mois | 75% | 0% | 33% | 9% | 0% | 0% | 15% |

| Taux de rétention à 6 mois | 56% | 0% | 6% | 1% | 0% | 0% | 2% |

| Part de ceux avec responsable de programme expérimenté | 63%[15] | Non applicable – Fréquence de sélection uniquement | |||||

| Part de ceux ayant développé des brochures et matériels imprimés | 19%[16] | Non applicable – Fréquence de sélection uniquement | |||||

| Part de ceux avec blogues ou partage en ligne | 19%[17] | Non applicable – Fréquence de sélection uniquement | |||||

| Part de ceux avec guide du programme ou instructions | 0%[18] | Non applicable – Fréquence de sélection uniquement | |||||

Données des camemberts graphiques

| Nº du rapport | Budget | Nombre de participants | Taille de bulle : nombre de nouveaux comptes utilisateurs créés |

|---|---|---|---|

| 16 | $0.00 | 10 | 5 |

| 21 | $750.00 | 40 | 10 |

| 41 | $0.00 | 3 | 2 |

| 42 | $0.00 | 3 | 2 |

| 60 | $100.00 | 17 | 14 |

| Nº du rapport | Total des heures | Nombre de participants | Taille de bulle : nombre de nouveaux comptes utilisateurs créés |

|---|---|---|---|

| 16 | 10 | 10 | 5 |

| 21 | 20 | 40 | 10 |

| 34 | 15 | 3 | 3 |

| 41 | 5 | 3 | 2 |

| 42 | 5 | 3 | 2 |

| 45 | 13 | 0 | 0 |

| 60 | 45 | 17 | 14 |

Autres données

| Nº du rapport | Espace de réunion donné | Équipement donné | Nourriture donnée | Récompenses / cadeaux donnés | Budget | Heures du personnel | Heures des bénévoles | Total des heures |

|---|---|---|---|---|---|---|---|---|

| 16 | $0.00 | 0 | 10.00 | 10 | ||||

| 21 | $750.00 | 15 | 5.00 | 20 | ||||

| 27 | ||||||||

| 29 | ||||||||

| 34 | 15.00 | 15 | ||||||

| 41 | $0.00 | 0 | 5.00 | 5 | ||||

| 42 | $0.00 | 0 | 5.00 | 5 | ||||

| 45 | 1 | 12.00 | 13 | |||||

| 60 | $100.00 | 15 | 30.00 | 45 | ||||

| 61 | ||||||||

| 98 | ||||||||

| 99 | ||||||||

| 100 | ||||||||

| 101 | ||||||||

| 102 | ||||||||

| 103 |

| Nº du rapport | Nombre de participants | Nombre de NOUVEAUX comptes d’utilisateur créés | Taux de rédacteurs actifs à 3 mois | Taux de rédacteurs actifs à 6 mois | Taux de rétention à 6 mois (SEULEMENT les nouveaux contributeurs) |

|---|---|---|---|---|---|

| 16 | 10 | 5 | 0% | 0% | 0% |

| 21 | 40 | 10 | |||

| 27 | 3 | 3 | 0% | 0% | 0% |

| 29 | 12 | 0 | |||

| 34 | 3 | 3 | 33% | ||

| 41 | 3 | 2 | 33% | ||

| 42 | 3 | 2 | 33% | ||

| 45 | |||||

| 60 | 17 | 14 | 6% | 6% | 6% |

| 61 | 17 | ||||

| 98 | 15 | 8 | 0% | 0% | 0% |

| 99 | 22 | 11 | 0% | 0% | 0% |

| 100 | 12 | 4 | 0% | 0% | 0% |

| 101 | 30 | 3 | 0% | 0% | 0% |

| 102 | 4 | 4 | 0% | 0% | 0% |

| 103 | 39 | 18 | 3% | 3% | 3% |

Notes

- ↑ (moyenne = 10, écart-type = 4)

- ↑ Averages reported refer to the median response (Median = 12, Mean = 15, StdDev = 13).

- ↑ (médiane = 52 %, moyenne = 56 %, écart-type = 33 %)

- ↑ (médiane = 52 %, moyenne = 56 %, écart-type = 33 %)

- ↑ (médiane = 0, moyenne = 9 %, écart-type = 15 %)

- ↑ (médiane = 0, moyenne = 1 %, écart-type = 2 %)

- ↑ Retention data for new users was only available for 12 of the programs for 3-month follow-up (two programs were too recent), and nine programs for 6-month follow-up (five programs were too recent).

- ↑ This data could be generated through pre- and post-event surveys.

- ↑ An article published in the German print magazine Der Spiegel in October 2004 lead to a significant jump in new users on the German Wikipedia in subsequent months.

- ↑ Pourcentages parmi les 10 qui ont fourni un rapport directement

- ↑ Pourcentages parmi les 10 qui ont fourni un rapport directement

- ↑ Pourcentages parmi les 10 qui ont fourni un rapport directement

- ↑ Pourcentages parmi les 10 qui ont fourni un rapport directement

- ↑ Les références aux photos utilisées comptent le nombre de photos uniques, non dupliquées et utilisées à la date du 4 novembre 2013.

- ↑ Pourcentages parmi les 10 qui ont fourni un rapport directement

- ↑ Pourcentages parmi les 10 qui ont fourni un rapport directement

- ↑ Pourcentages parmi les 10 qui ont fourni un rapport directement

- ↑ Pourcentages parmi les 10 qui ont fourni un rapport directement

El q se haga el favor de confirmar de de la mañana los Mossos el CNP y la rellenas de queso y jamón y queso y