Learning and Evaluation/Global metrics

- Number of active editors involved

- Number of new registered users

- Number of individuals involved

- Number of new images/media added to Wikimedia article pages

- Number of articles added or improved on Wikimedia projects

- Number of bytes added to and/or deleted from Wikimedia projects

- Learning question: Did your work increase the motivation of contributors, and how do you know?

"Global metrics" are designed to provide a standardized way of tracking a few key measures of progress towards our strategic goals for content and participation. After two years of observing the goals and measures of various grants projects, a few core metrics rose to the surface as commonly used indicators, though we found these measures were calculated inconsistently across projects/grants.

The implementation of global metrics provides a way for all of us – grantees, grantmaking committees, WMF grantmaking staff – to make a few summative statements about the work being done through this subset of donor funds. For example, for the first time, we will be able to say that the $6M in grants spent annually helped facilitate x number of articles, and y new users.

Global metrics are the starting point of us asking ourselves: "How are we doing? How are we using donor funds?". They are not an end in themselves. There are no good or bad "results" using those metrics, they are meant to be a starting point in assessing impact, and hopefully will spark conversation about what we can do better and how.

Reporting global metrics takes the form of a small template table with seven required quantitative measures, and one required qualitative learning question. This table will be included on every IEG, PEG, and APG grants reporting form, regardless of project.

What they are

[edit]- Anchors aligned with strategic goals:

The global metrics are based on two of the primary strategic goals set out in the Wikimedia strategic planning process: participation (editors) and content (articles). The majority of WMF grantmaking funding goes to supporting these initiatives, and they should be kept in mind when planning and evaluating projects.[1]

- A way to gauge overall participation and content for grants:

Given the breadth of reporting detail of grants from 2012-14, it has been impossible to make aggregate statements about the work being done by the individuals and communities around the world which are supported by grants. For example, just how many articles have been improved which are connected to initiatives supported through the $8M in WMF grantmaking? How many new editors have been engaged through funded outreach initiatives globally? The Global Metrics will give us a better estimate of these.[2]

- Consistent measures:

The Global Metrics provide a uniform set of measures that we can aggregate across all the primary grantmaking we do. It gives us all a way of making some collective statements about the work being accomplished, and ensures all these basics are collected in a uniform fashion: that is, the metrics should all be calculated in the same way, through the standardized use of tools such as Wikimetrics.

What they are not

[edit]| Do you want more detailed measurement help?

See additional evaluation measures organized by project objective in "Measures for Evaluation" |

- The only numbers to be reported:

Reports will still require tracking of grants to their proposed outcomes. This will be an additional requirement (though overlap may occur, as some grants have goals around number of participants or amount of content). For more guidance on the types of more appropriate measures to evaluate, see "Measures for Evaluation"

- The best measures of success for most projects:

For most grants, these metrics may just be the tip of the iceberg in terms of describing the whole story of impact. For a few grants, these metrics will be hardly relevant at all! For example, an IEG research project such as the Women in Wikipedia will not be focused on any content creation or new user recruitment, though it will have the involvement of some active editors. Each grantee should consider these required metrics when designing their evaluation strategy, but should more importantly focus on evaluating their specific program goals.

- To be considered in isolation:

Per the above, these metrics will not paint the full picture of a grantees progress, and committees, WMF staff, and the community should be careful not to apply them as such. They should also be considered in association with the baseline (e.g., 10 new articles in some languages would be a huge feat compared to others), as well as in conjunction with the grant's proposed goals. The observation of Global Metrics will yield its best results when considered in relationship with context.

Key cautions!

[edit]- For grantees

- Don't necessarily abandon your project just because it doesn't fit into the global metrics framework!: You may have a program strategy that makes a lot of sense, and if you have good rationale and metrics to show your progress against these, that is fine! Just be sure to include your rationale for why your project doesn't effect change at the participation and content levels.

- Don't ONLY report on these handful of metrics. You should be sure to create an evaluation strategy for your project that will capture whether or not you are meeting your project's objectives, and you should use your grant report to communicate if your experiment worked and how you know (the measures of success). Some of these measures may be included in the global metrics, but likely not all of them.

- Don't think that every global metric has to be an answer greater than 0: We expect that most projects being done will not see results across all seven dimensions. This is definitely OK.

- For evaluators

- CONTEXT is the key! Direct comparison is not ideal, given differences in baselines, potential market size, etc. Do report on global metrics giving as much context as possible; context will make their use more and more useful.

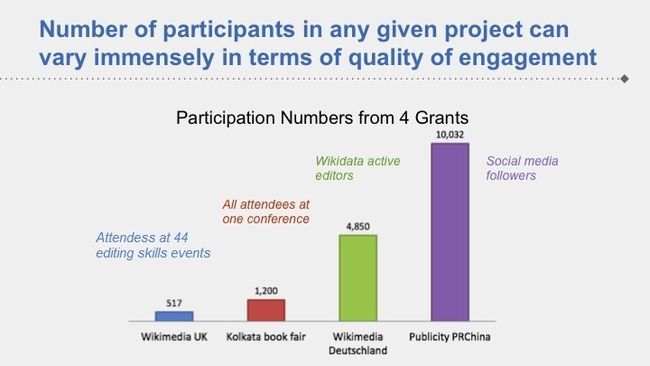

- "Participants involved" is a problematic metric. Of all the metrics, this is the least comparable across grantees. Be aware about quality of interaction with the people, and again, keep in mind context. For example, a program with massive outreach but low levels of engagement in an area that has little awareness of Wikipedia might be OK as an investment, while such an outreach in parts of the world with high levels of awareness of Wikipedia might need to focus on high-engagement participation strategies. See the graph below for an example.

The metrics

[edit]The metrics are listed below; each metric links to a learning pattern describing how can calculate that metric. Please take a look!

| Calculating global metrics | General information on collecting and reporting global metrics | |

| PARTICIPATION (COMMUNITY) | ||

|---|---|---|

| 1. Number of active editors involved | Existing active editors (5+ edits per month) involved at any capacity (planning, executing, participating) during the project duration | E.g., Number of active editors using The Wikipedia Library; Number of active editors involved in mentoring education program. |

| 2. Number of newly registered users | Newly registered users as a result of the project, using a two week window for specific events | E.g., Number of new editing accounts created through outreach campaigns to a university throughout a school year |

| 3. Number of individuals involved | All people participating in the project (organizers, users, attendees, etc.) | E.g., Number of attendees at Wikimania, Number of students + teachers + ambassadors in education program |

| CONTENT | ||

| 4a. Number of new images/media added to Wikimedia article pages | Number of images uploaded to Commons which have been added to Wikipedia articles or other Wikiproject pages | E.g., Number of images added to Wikipedia articles from WLM |

| 4b. Number of new images/media uploaded to Wikimedia Commons (Optional) | Number of images uploaded to Commons and added to Wikipedia articles or other Wikiproject pages | E.g., Number of images added to Wikimedia Commons from WLM |

| 5. Number of articles added or improved on Wikimedia projects | By project (Wikipedia, Wikisource, etc.) the Number of articles that were created or improved as a result of the program efforts | E.g., Number of articles improved on Arabic Wikipedia during a semester-long education program |

| 6. Number of bytes added to and/or deleted from Wikimedia projects (absolute value) | By project, total number of bytes contributed to or deleted from the Wikipedia projects through programs. This metric should not include photos or other media. | E.g., Number of bytes added + deleted to Wikisource after an editing campaign, (1500 added) + (300 removed) = 1800 total bytes |

| LEARNING QUESTION | ||

| 7. Learning question: Did your work increase the motivation of contributors, and how do you know? | ||

Background

[edit]Problem: The design of WMF grantmaking for complete self-evaluation resulted in limited ability to consolidate/sum the inputs, outputs, and outcomes of grantee-funded projects.

Solution: Standardize a small subset of metrics from all grantees, while still asking them to conduct their own detailed self-evaluation of their desired project.

History

[edit]In fiscal year 2012–13, the Wikimedia Foundation identified grantmaking as one of its key focus areas and created a revised grantmaking suite that consisted of four primary grants avenues (see Grants:Start). While reporting was absolutely required and certain types of metrics were encouraged in these reports, by in large the consensus was to keep the grantmaking reporting process more open-ended with the allowance for complete self-evaluation.

Two fiscal years and ~$15M in grants later, the grantmaking community ("we") is now in a space where it can see what progress has been made through grants and how. While we have seen significant improvements in how grantees are planning and evaluating their initiatives, there are marked inconsistencies in the types of information reported. We are seeing that the more clearly linked to strategic goals grants are, the more likely they are to achieve impact.[3]

While metrics can only ever be taken in context and with careful consideration, the global metrics is an attempt to concretely anchor the grantmaking initiatives in the strategic priorities of the movement, and also provide the consistency in compilation so we have some baseline for aggregation.

Key questions

[edit]The following questions were taken into consideration when determining an appropriate shortlist of metrics:

- How easy is a metric to gather/measure?

- Are tools available to calculate this metric?

- To what proportion of funded projects is the metric relevant?

- How can the metric be interpreted across types of projects funded, such as events, conferences, programs, annual plans, and research?

- Do the metrics encourage specific outcome goals? How can we appropriately span the inputs, outputs, and outcomes?

- Can we embed qualitative and quantitative?

Known gaps and limitations

[edit]- Does not account for quality, retention, or readership; to be included once better methods for tracking exist

- Will not cover the right metrics for all types of grants ... we need them to report more fully based on their grant type!

- The learning question will likely change from year to year as we shift focuses in our needs to understand areas, and the quantitative measures may also shift based on new ways of measuring or new strategic priorities.

Resources

[edit]Presentation

[edit]

This presentation, from Wikimedia Conference 2015 details the global metrics, as well as some of the ways to get the data using various tools:

Learning Patterns

[edit]We have developed a set of learning patterns to support understanding and application of the global metrics. These patterns provide useful guidance to grant recipients, committee members, program staff, and community members involved in future grant-funded projects and mission-aligned programs.

Engaging and retaining productive Wikimedia contributors is as important as recruiting new ones.

You need to represent the potential impact of your outreach project on the growth of the Wikimedia movement.

It's easier to demonstrate impact if you can show who participated in or benefited from your work.

Media-file contributions are most valuable when they're integrated into Wikimedia content.

Edits, files, and bytes contributed and other metrics may not totally represent the significance of content contributions that involve creating or improving many pages.

The amount of content that a project has contributed to a Wikimedia project can be hard to measure.

Did your work increase the motivation of contributors? How do you know?

You need to measure your project's output according to the global metrics specified by the Wikimedia Foundation.